TABLE OF CONTENTS

Intel’s new graphics cards have a lot of people talking — for reasons both good and bad. First of all, having such a tremendously large company dip its toe into this particular part of the market means great things for us consumers as it inherently usurps the status quo — by which we mean the duopoly held by NVIDIA and AMD.

More competition benefits us the most, even if said competition isn’t quite ready yet for mass adoption.

Here’s the thing: Intel GPUs still aren’t as competitive as we’d like them to be, but they’re a lot better than one would expect and, perhaps most importantly, they’re getting better and better with each passing month.

It all depends on your workflow, though, so it’s a bit of a double-edged sword. For some tasks and projects, Intel GPUs are not only sufficient but actually pretty darn stellar. For others, though, they’re absolutely abysmal.

Image Credit: Intel

Such a thing was to be expected given their budding nature. Still, we’re truly impressed with what this company has been able to accomplish in such a short period of time; that doesn’t, however, warrant a glowing recommendation — far from it.

Anyone who happens to buy an Intel graphics card at this point in time is basically accepting the role of a beta tester.

You should never rely on a faint promise of an update further down the line as the company itself isn’t in a particularly good position at the moment — it’s hemorrhaging money on all fronts and has been losing to its biggest competitors for years.

A change in trajectory and fortune is possible, but it’s nonetheless fairly unlikely.

Still, that’s a topic for a different time and place, so let’s focus on the GPUs themselves and whether or not they’re worth the investment.

But first, a word of caution:

Intel ARC — Be Careful Which Content You Consume

It’s hard to fully and accurately gauge the performance of Intel’s latest graphics cards solely because they keep improving with each passing month.

In some cases and scenarios — like gaming — we’re talking about quantum leaps (depending on the title, of course). In others, more production-focused workflows, the situation is quite a bit different.

That’s the thing: Intel keeps updating its graphics drivers at an incredibly fast rate — in no small part because it has to. It needs to keep up with NVIDIA and AMD in order to stand even the slightest chance of being competitive.

As a result, the vast majority of reviews and opinion pieces and videos on the matter — the ones that were written, filmed and released mere months ago — should be ignored. They’re wholly outdated and do not paint a realistic picture.

And, by the looks of it, even the content and data we have right now will be “obsolete” in the very near future.

So, if you happen to be interested in an Intel GPU — and want to be on the safe side — make sure to read/watch the latest coverage possible, lest you end up with a skewed view of things and an incorrect perception on just how powerful they really are.

Intel ARC Naming Scheme and Product Breakdown

Intel’s ARC line-up, while by no means confusing, is incomplete. The company had many grandiose plans for its dedicated GPUs and yet, for one reason or another, only a handful of them have actually seen the light of day.

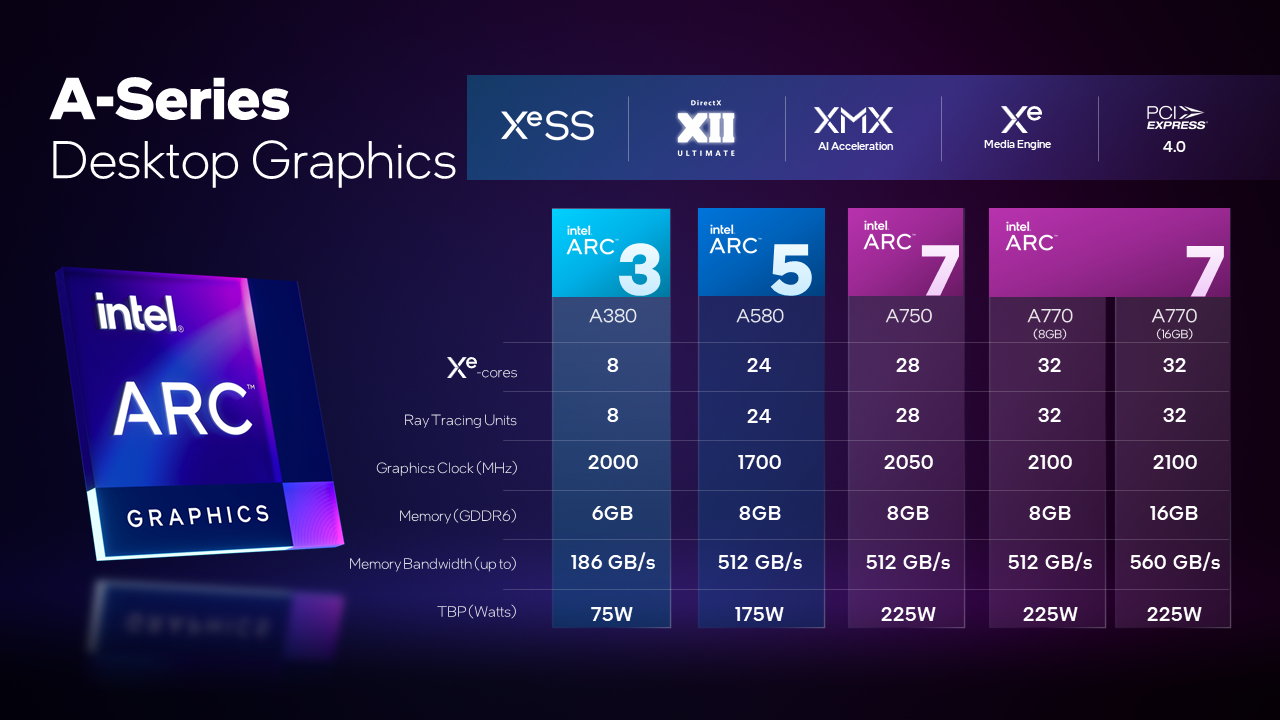

At the time of this writing, only the A380 (6GB), A750 (8GB), and A770 (8GB/16GB) have hit the market.

The first one is the quintessential budget graphics card — and is, therefore, about as underpowered as it gets. The A750, on the other hand, is more of a mid-ranger.

It’s a good option for 1080p and 1440p gaming and can pack a surprising punch, especially when it comes to ray tracing.

It’s also okay for production workloads but nonetheless has the exact same limitations as the rest of the ARC line-up. Iffy drivers and unimpressive software/hardware support are the bane of its existence.

The A770 is basically a souped-up version of the A750 and is the very best dedicated GPU that Intel has to offer. If you’re after the absolute best performance — from an Intel graphics card, at least — the 16GB variant is the one to go for.

Source: Intel

Intel ARC Pro GPUs Designed for Workstations

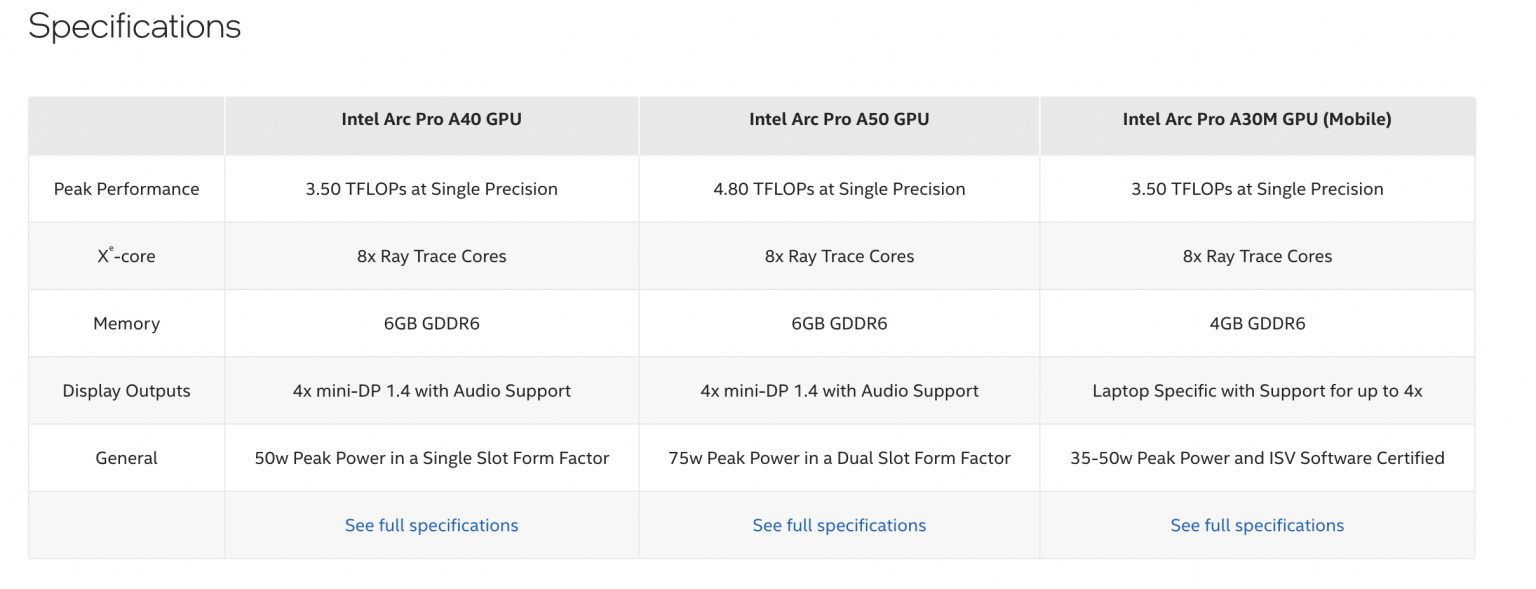

Intel also has a dedicated line of ARC GPUs that are primarily designed for workstations and production-heavy workloads.

There are only three (“entry-level”) options at the time of this writing: the ARC Pro A40, A50, and A30M.

Image Credit: Intel

That last one’s a mobile variant and could, in time, find its way into pro-oriented workstation laptops. You can already find a model or two on the market, but they’re few and far between — to say the least.

All three models have support for hardware-based ray tracing and AV1 acceleration, alongside machine learning capabilities. The A40 is a single-slot design, with the A50 taking up two slots.

Source: Intel

Intel, however, seems to be facing the exact same problem as with its gaming-oriented line-up: availability. The company has supposedly started shipping these workstation-focused GPUs to OEMs, but they’re still not readily available.

And, frankly, even when they do become somewhat ubiquitous, they’ll probably still trail behind NVIDIA and AMD’s latest offerings.

At best they’ll be competitive which, while certainly a win for Intel, isn’t going to make any tangible difference in regards to their position on the market.

These pro-grade GPUs have a lot going for them, but until they’re out in the wild, we can only theorize as to how well they’ll perform and whether they’re as good as Intel claims they are. Intel supposedly plans on pricing them aggressively, but that, too, remains to be seen.

ARC Pro GPUs are already certified for numerous different Autodesk products, including AutoCAD, 3ds Max, Fusion 360, Revit, Maya, and so on.

Other notable ISVs (Independent Software Vendors) are expected to give their stamp of approval in the very near future, which would definitely make these GPUs a viable option.

The potential is there, but it’s still way too early to give Intel the benefit of the doubt.

For a more detailed look into these workstation GPUs, make sure to check out the following link.

Intel ARC Content Creation — Performance Breakdown

Intel’s first (true) foray into the discrete GPU market targets just a single category of users: gamers.

Content creators and productivity workers, in that sense, are more of an afterthought. Intel wants to cater to their needs as well, but it can only do so much simultaneously, especially given NVIDIA’s current position on the market (and CUDA’s relevance).

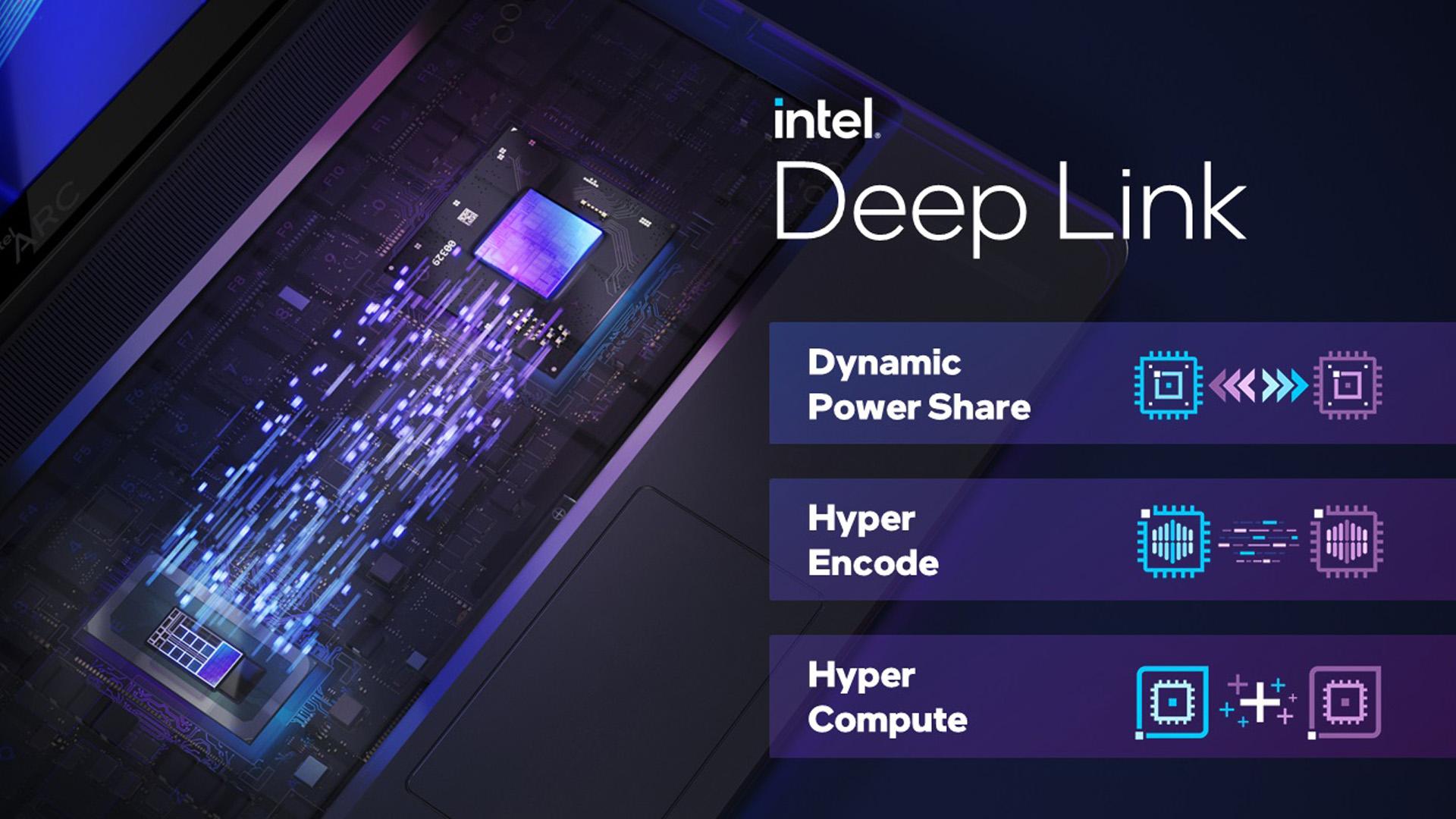

Intel does employ its Deep Link technology to better synchronize both the dedicated GPU and the iGPU (assuming you have a non-F variant of an Intel processor), and while that should result in a noticeable performance benefit as far as video editing is concerned (better HEVC, H.265, and VP9 video encoding), it is only supported by a handful of application at the time of this writing.

Image Credit: Intel

Intel ARC and DaVinci Resolve

Source: Puget Systems

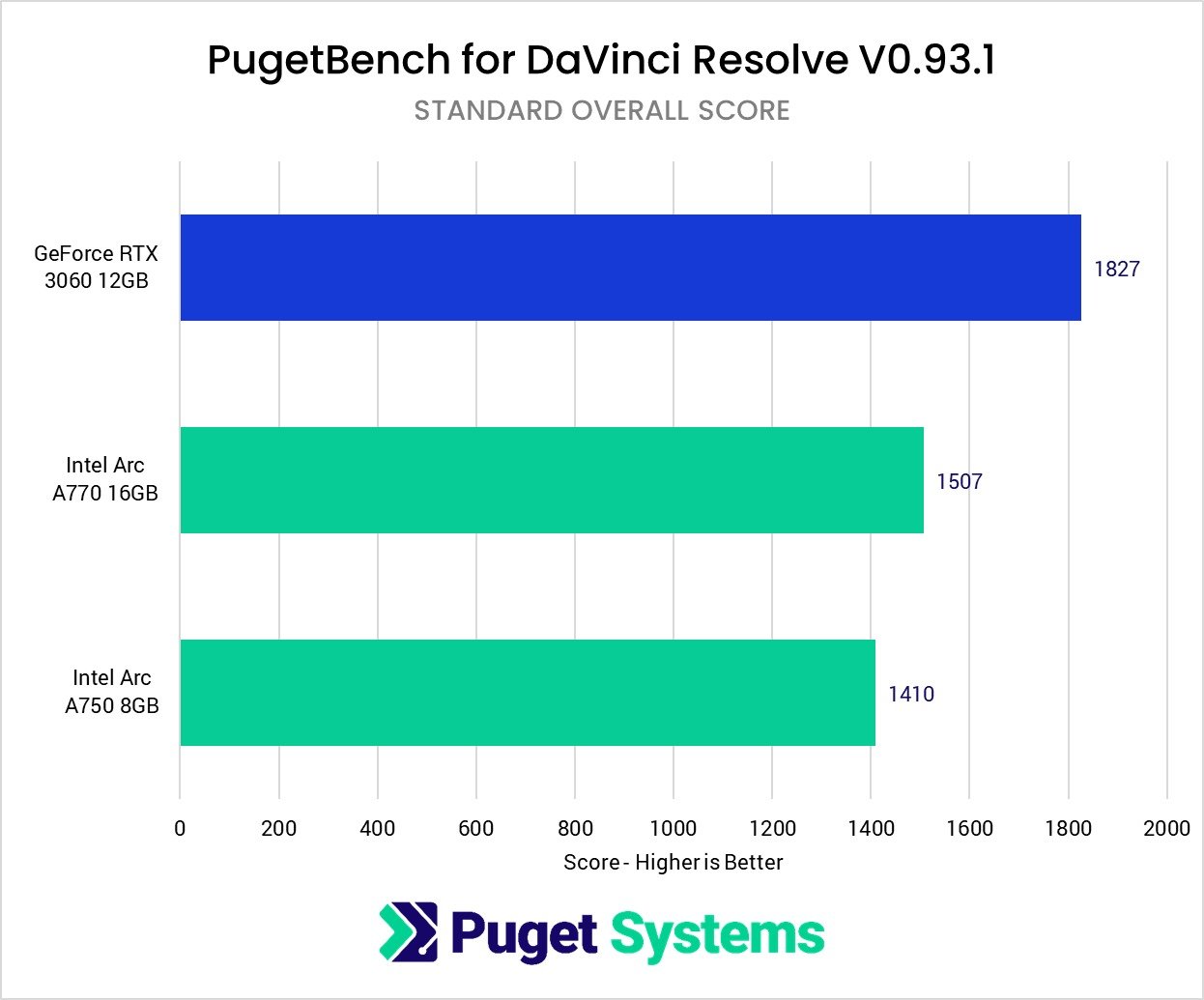

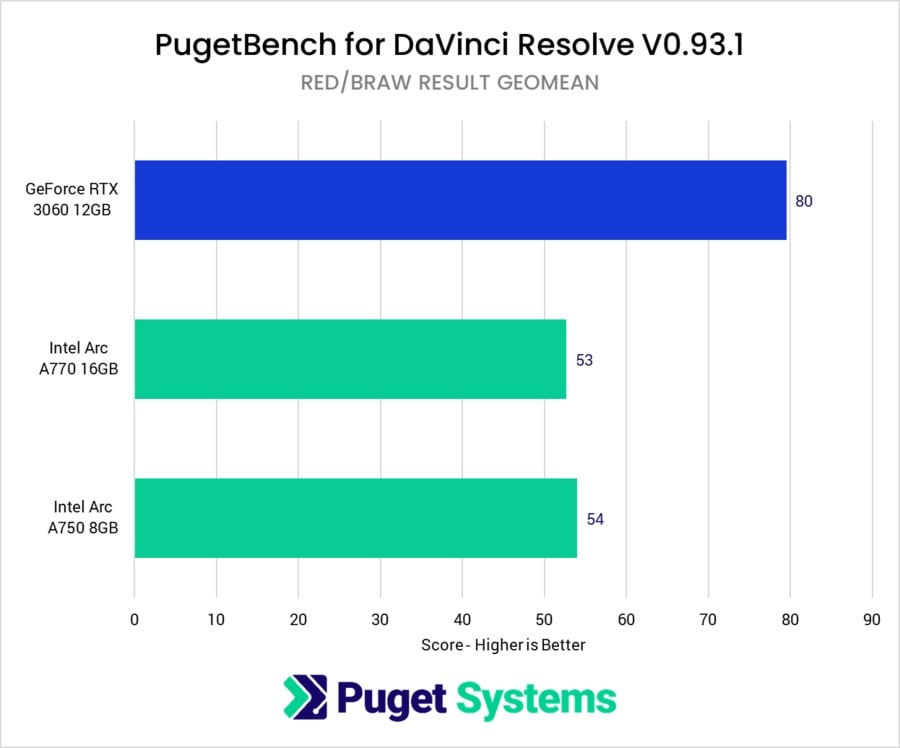

On the surface, Intel GPUs can keep up with the more popular RTX 3060 (12GB), although there’s still a noticeable difference when it comes to real-world performance.

Do note that, at the time of this writing, only DaVinci Resolve has been updated to support ARC graphics cards.

The iGPU on all newer Intel CPUs actually handles the decoding and encoding of H.264 and HEVC footage with surprising ease, so it’s not like you need a dedicated graphics card (let alone one made by Intel) to have smoother playback and timeline performance.

Intel’s Quick Sync is actually quite incredible at what it does and is one of the main reasons why content creators across the globe always go with Intel, regardless of AMD’s incredible advances and overall value proposition.

Image Credit: Intel

It’s not a matter of sheer performance or, say, core count, but rather optimization and specific hardware-related benefits that end up making a world of difference.

For RED and BRAW footage, Intel’s top graphics cards trail behind the RTX 3060.

Source: Puget Systems

This could change a bit through software optimization, but you really shouldn’t hold your breath for it to happen. Intel has been making great strides optimization-wise, but for all we know this may well end up being the best these GPUs can perform.

Then again, working with RED and BRAW footage probably isn’t the primary goal of someone buying a mid-tier Intel graphics card, but still — it leaves a bit to be desired.

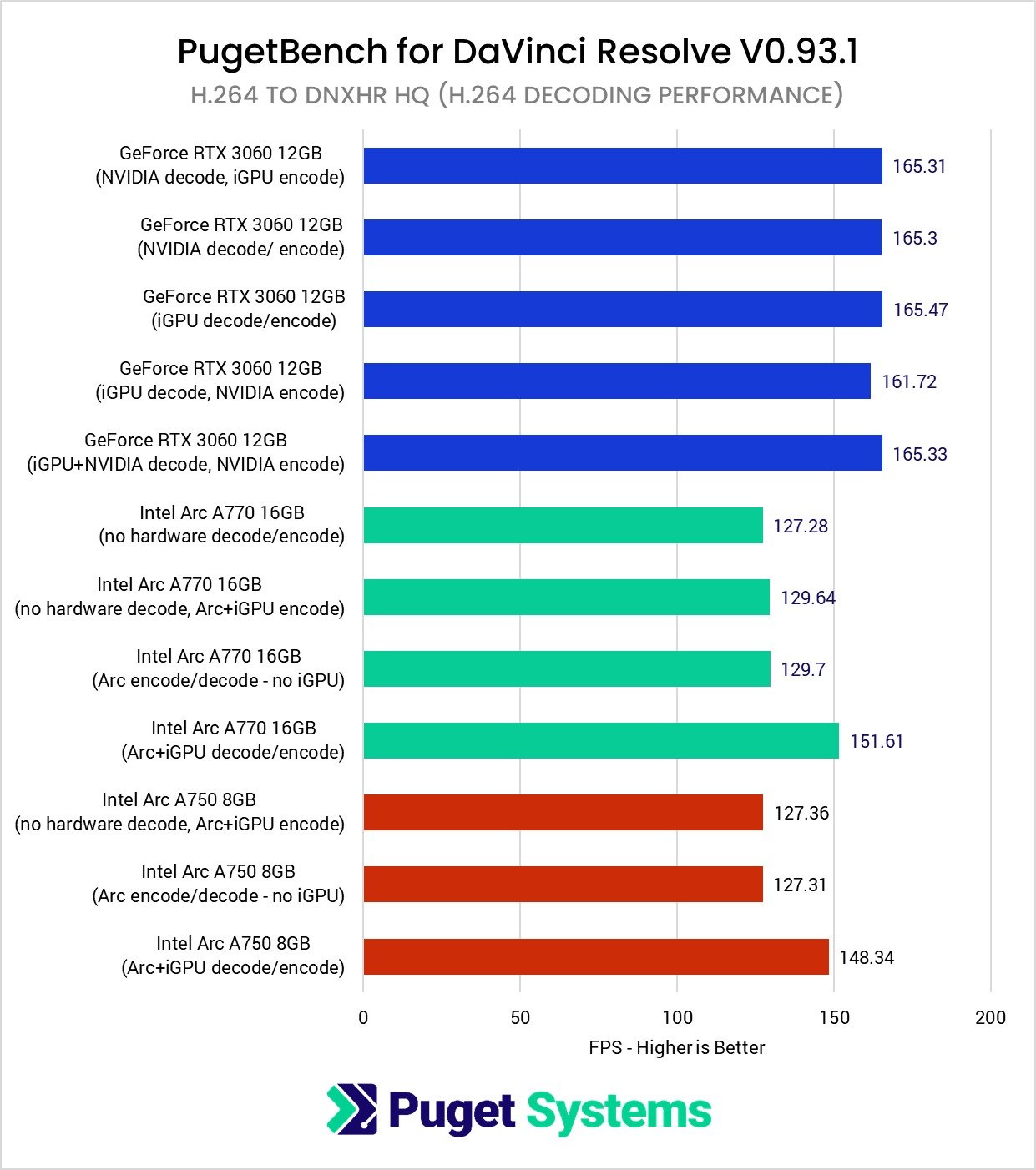

Let’s take a look at H.264 footage next; a much more reasonable criterion in this particular case.

Source: Puget Systems

Source: Puget Systems

For H.264 decoding, ARC GPUs do not provide any kind of performance benefit whatsoever.

In fact, in some cases and scenarios, they’re actually worse than Intel’s integrated graphics. Can this be tackled and improved with a software update? Most likely, but whether such an update will ever be shipped still remains to be seen.

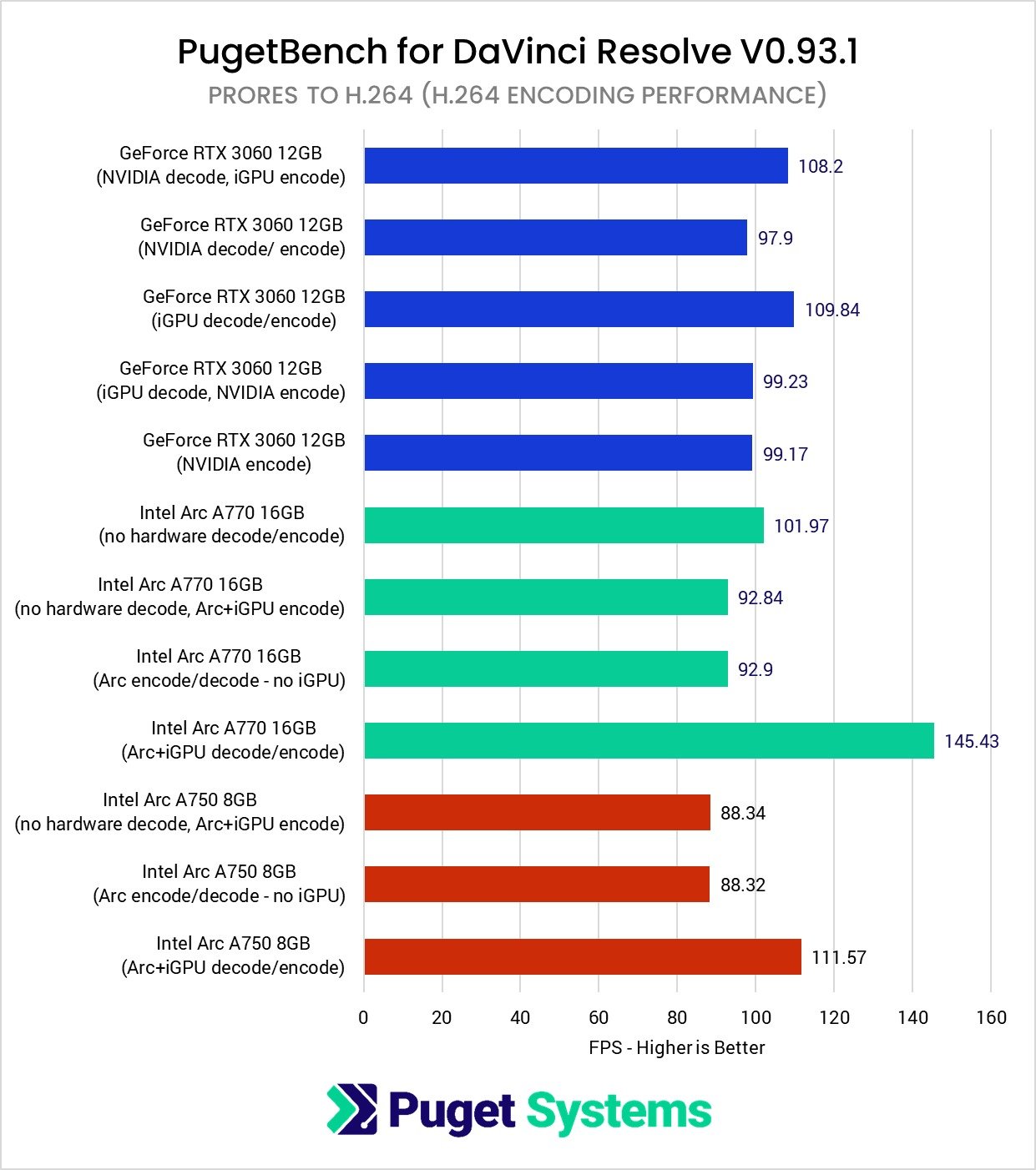

H.264 encoding is a slightly different story. This shows the true potential that comes from pairing an ARC GPU and Intel’s Quick Sync as it offers the absolute best performance within this particular comparison.

There are still a few performance-related conundrums, but they can, in theory, be patched with a driver update.

Once Intel’s Deep Link is harnessed, the A770 is 32% faster than Intel’s iGPU, 47% faster than NVENC, and 43% faster than the native encoder found on the Intel Core i9-12900K.

These are some truly impressive results, and even though we’re only observing them within a vacuum and within this one particular use-case, they nonetheless show a lot of potential.

Source: Puget Systems

Intel ARC and Adobe Premiere Pro

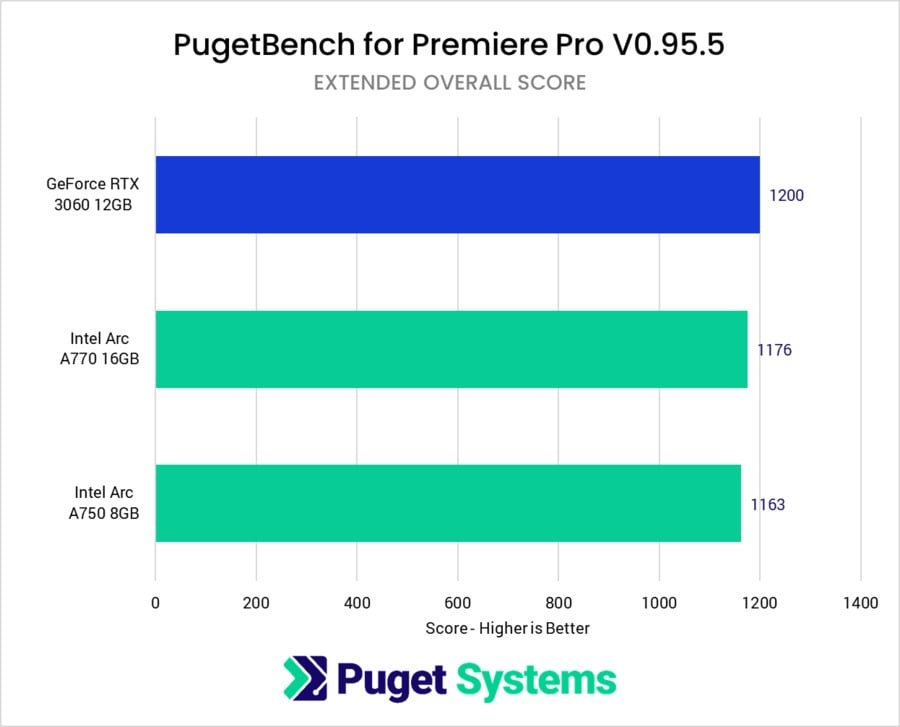

Adobe’s wildly popular NLE still doesn’t have native support for Intel GPUs, so you won’t get any hardware-based decoding/encoding. GPU-accelerated effects, on the other hand, do get a nice boost.

Still, the A750 and A770 manage to keep up with NVIDIA’s RTX 3060 from an overall perspective, despite the fact that they’re not being utilized to their full potential.

That being said, if working in Premiere Pro is your main goal and concern, going with an NVIDIA GPU will undoubtedly result in a better overall experience — one devoid of any bugs and issues.

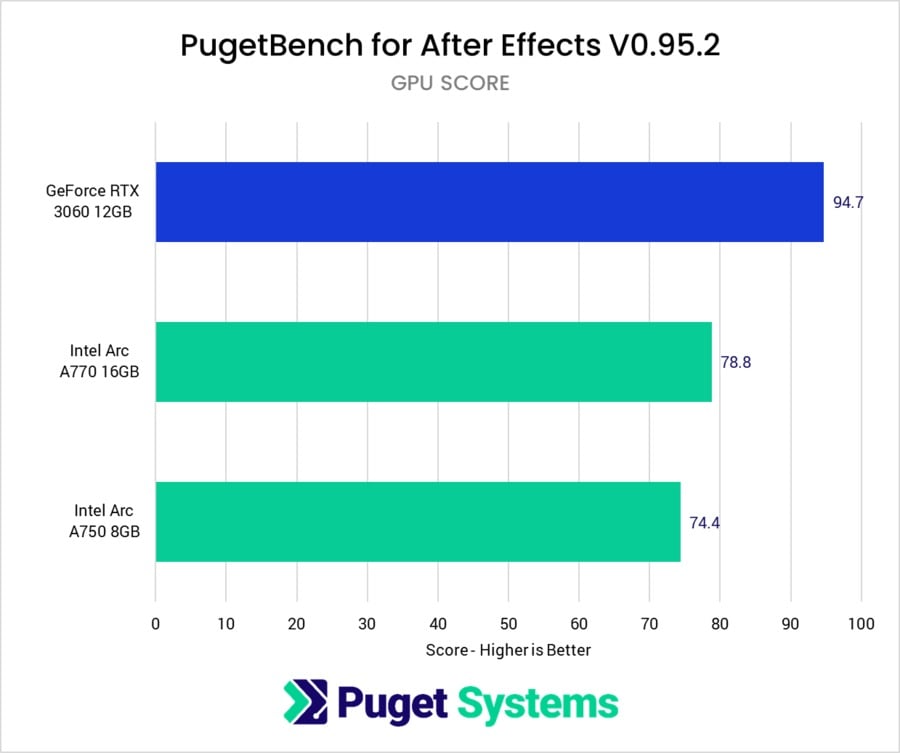

Intel ARC and Adobe After Effects

Source: Puget Systems

Much of the same can be said for After Effects as well. Seeing how AE scales mostly with CPU performance, we’re fairly impressed with how well the A770 and A750 are able to compete with NVIDIA’s mid-tier offerings.

They’re not better by any stretch of the imagination and they might cause certain driver-related issues, but overall, they’re actually quite good — especially for a first-generation product.

Again, much like with Premiere Pro, going with an NVIDIA graphics card will definitely result in a better, “hassle-free” experience.

Still, you’d get quite a lot of “bang for your buck” if you happen to go with the 16GB variant of the A770. After Effects needs as much VRAM as it can get, and having a whopping 16GB of memory (for a very reasonable price) would no doubt serve as a tremendous boon to your creative endeavors.

Then again, Adobe doesn’t have that big of a reason to work on native support for ARC GPUs as they’re nowhere near as ubiquitous as their competitors. This is something you definitely need to keep in mind.

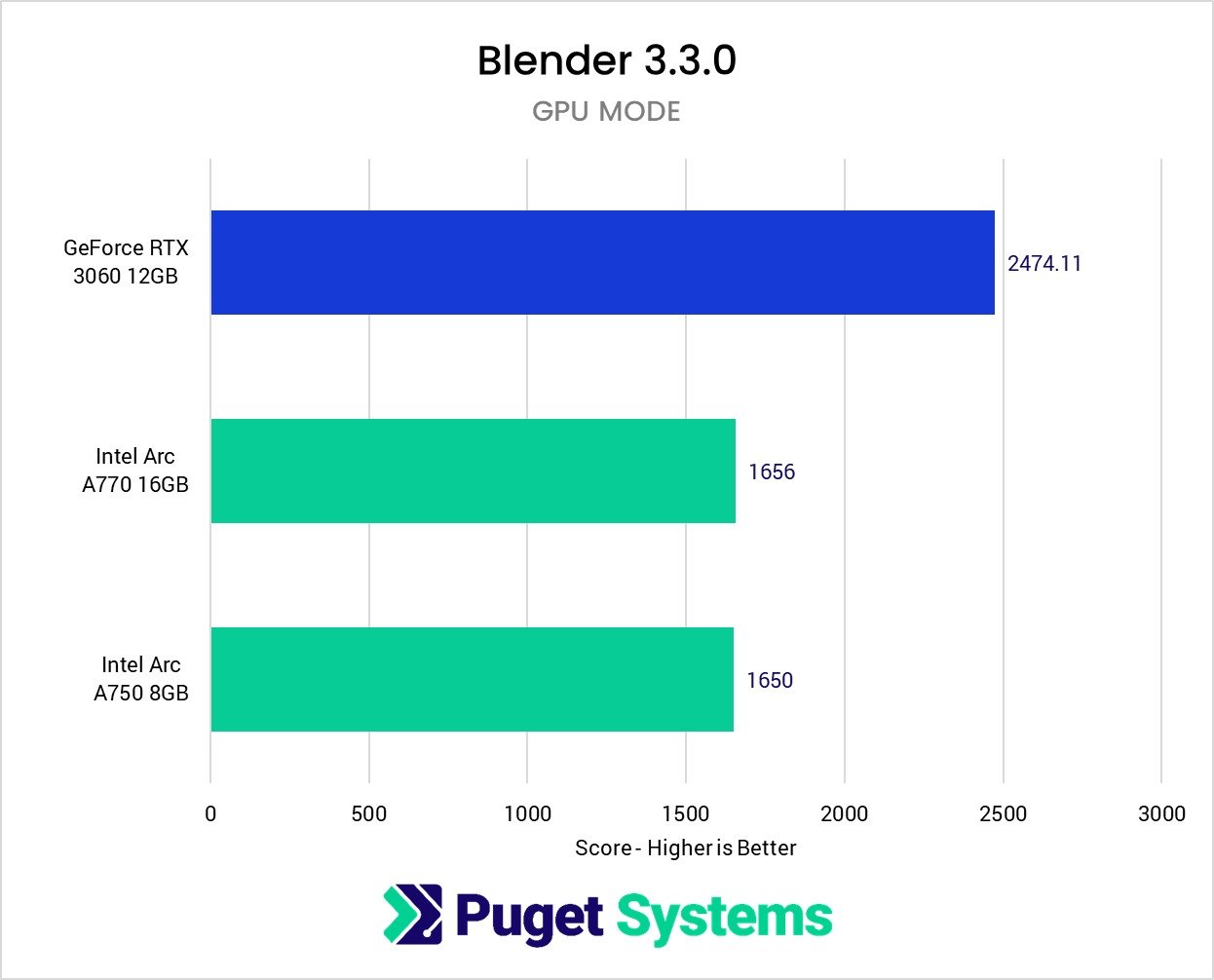

Intel ARC and Blender

Blender is where things fall apart for Intel, despite the fact that Blender actually has native support for ARC GPUs. NVIDIA is still king and, frankly, that’s not going to change any time soon.

Source: Puget Systems

Their graphics cards might be unreasonably priced, but they sure do offer the best performance for most production-heavy workflows.

Still, if Intel keeps at it, it might be able to usurp the status quo in a few years’ time.

It’s a stretch, sure, but by no means is it impossible, especially if it aggressively prices its offerings and starts pushing out all the necessary updates that would turn ARC GPUs into a truly viable option for content creators.

That’s the thing: there’s ample potential, but we’re not quite sure whether Intel wants — or knows how — to capitalize on it.

If we ignore the existence of OptiX for the moment, Intel ARC GPUs are actually a whole lot better than one would expect, sometimes even beating the likes of AMD’s RX 6800 XT and NVIDIA’s RTX 3070 Ti.

In that kind of scenario and setting, Intel is superbly competitive. With OptiX in the picture, it’s a complete blowout in NVIDIA’s favor.

RT acceleration will be implemented in the very near future and while it should result in a slightly more competitive state of affairs, it’s still too early to give Intel the benefit of the doubt and proclaim it as a viable option for any serious work in Blender.

Image Credit: Intel

NVIDIA simply has too firm of a grasp on creative workloads and while Intel does stand a chance at being competitive, we’re still a few months (if not years) away from it (potentially) happening.

Are Intel Iris Xe iGPUs Any Good?

That depends. If all you want to play are undemanding indie games, an Intel iGPU will suffice — but your mileage will vary depending on the title, resolution, and desired frame rate.

They’re great for video editing, primarily because of Intel’s Quick Sync.

Having an Intel iGPU makes a world of difference in basically all of today’s most popular non-linear editors, and that holds true for both timeline performance/scrubbing as well as rendering.

Are Intel ARC GPUs Good for Content Creation?

Not really. Intel is primarily focused on gaming with its top-of-the-line graphics cards, with content creation being more of an afterthought.

You’re bound to run, depending on your workflow, into a myriad of different problems, like iffy drivers, non-existent software support, and so on and so forth. Intel still has a long way to go if it wants to catch up with NVIDIA in regards to heavy production workloads.

Image Credit: Intel

You should, therefore, avoid buying an ARC graphics card if you’re primarily focused on content creation.

Are Intel ARC GPUs Good for 3D Work?

They’re better than expected but are still not as good — or, perhaps most importantly, as stable — as NVIDIA’s offerings. If you just want to work a bit in, say, Blender, then you’ll definitely be able to get by with an ARC graphics card.

If, however, you’re a seasoned professional — or someone whose livelihood depends on 3D work — then you really ought to avoid buying an Intel GPU for the foreseeable future.

This might change over time, but there are never any guarantees, especially considering Intel’s current woes and the fact that it’s losing both relevance and market share.

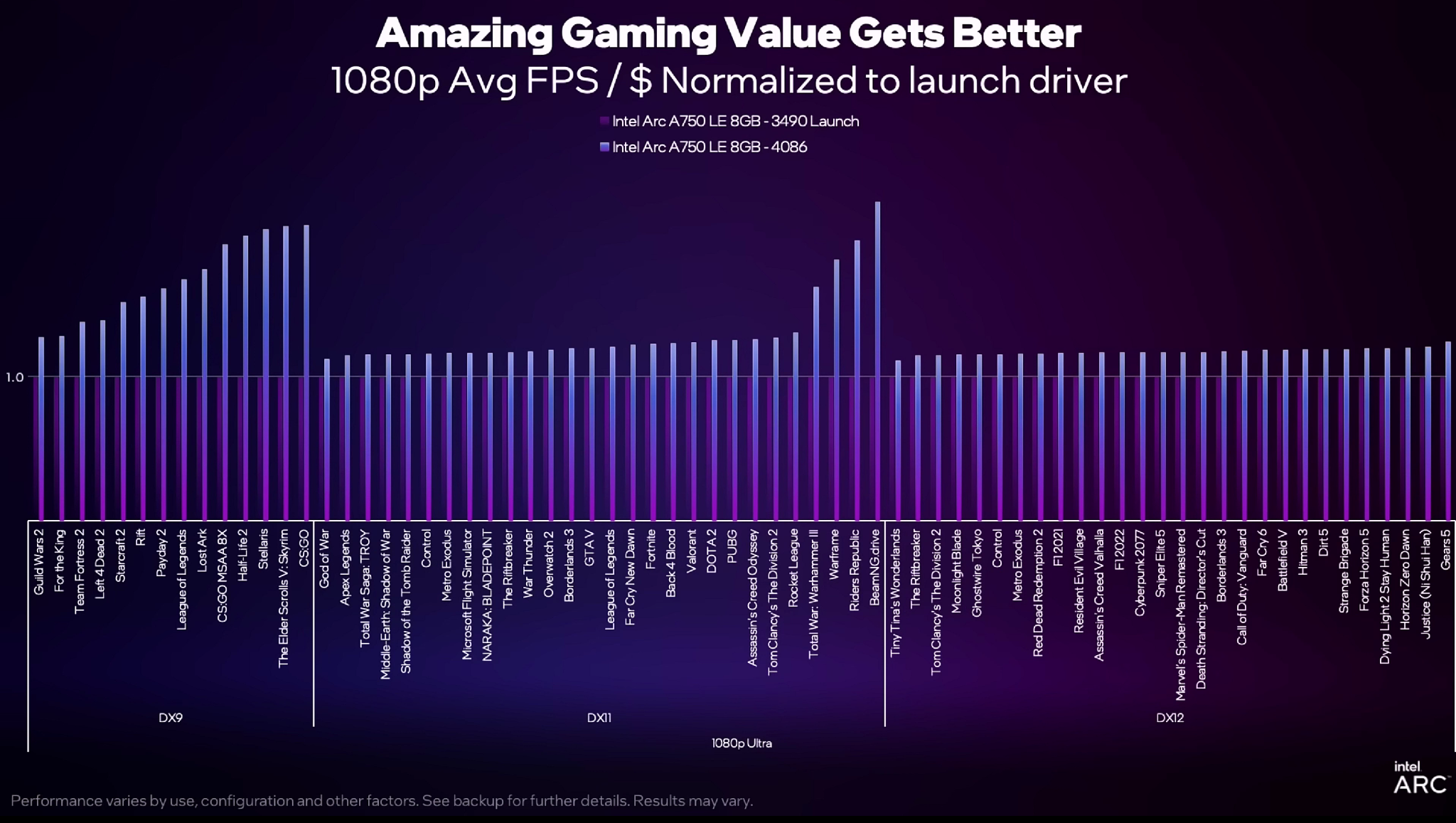

Are Intel ARC GPUs Good For Gaming?

They actually are! It all depends on a slew of factors, though, as not all titles perform as well as you’d expect them to.

Either way, all of this is quite a surprise given the fact that they’re still a first-generation product. The fact that they’re rubbing shoulders — and, in certain scenarios, outperforming — equivalent offerings from NVIDIA and AMD speaks volumes.

Still, there’s a number of astoundingly important asterisks and footnotes you need to keep in mind before pulling out your wallet and making any kind of purchasing decision.

For starters: Intel GPUs perform at their best only if you have Resizable Bar (or ReBAR, for short) enabled. Without it, you’ll encounter disastrous performance — to say the least.

Moreover, Intel ARC GPUs perform best in titles that support DirectX 12. DX11 games do run, but your mileage will vary in regards to how well they’ll perform.

Certain strides have been made on Intel’s part to alleviate many of the launch issues that had plagued its GPUs, but it’s still not a stellar situation overall.

Source: Intel

As for ray tracing performance, ARC GPUs can, in most scenarios, keep up with NVIDIA’s equivalent offerings, which is an astounding result given their budding nature.

Intel’s XeSS still isn’t as widespread as DLSS (an understatement), but you won’t have to resort to any upscaling shenanigans as much as you’d expect.

And, well, ARC GPUs are leaps and bounds better at ray tracing than AMD’s graphics cards, which is yet another unexpected win for Intel — not an important one per se, but a win nonetheless.

If you’re mostly focused on modern titles (as opposed to any legacy ones), you’ll get fairly incredible performance, especially for the asking price. There’s a lot of work ahead of Intel and, by the looks of it, it knows it too.

Fortunately, this is a far better start than anyone had expected, and if Intel keeps shipping better and improved drivers, it will no doubt stand a solid chance at usurping the status quo.

For a more in-depth look at how these GPUs perform, you can watch the following video:

What’s In Store for Intel GPUs In the Future?

Raja Koduri, Chief Architect behind the entire ARC line-up, has just parted ways with Intel.

Depending on the way you look at it, it was either an “amicable” split or, conversely, a telling move on Intel’s part — one which doesn’t bode well for the future of its dedicated line of GPUs.

Intel isn’t likely to pull a Google on everyone and fully remove itself from the dGPU market, but it will downsize as much as possible so as to stop hemorrhaging money.

That’s the thing: they’ve been leapfrogged by basically all of their competitors and, frankly, the outlook is about as grim as it gets.

Investing any further into this particular segment of the market — one in which they haven’t even made a splash — would be foolish and as far from a business-savvy decision as it gets.

To make things clear: Intel doesn’t make bad GPUs. Far from it. It just failed to make them in time.

The A770 (and its lesser powerful brethren) was supposed to be released many months before it finally hit the shelves and, by that point, it could no longer compete on even footing. The drivers needed ample polishing, too, which certainly didn’t help.

For a deeper dive into Intel’s current predicament, make sure to watch the following video from prolific leaker Moore’s Law Is Dead:

Conclusion

Intel ARC GPUs are truly phenomenal, especially for a first-generation product. It’s not the home run Intel hoped it would be, but it sure is an impressive showing and, perhaps most importantly, a stellar foundation on which Intel will be able to keep building upon.

It’s not perfect, though, and it’s only a viable option for gamers and those who are looking for something different and unique — and, by proxy, want to support a third “player” in the market instead of further strengthening NVIDIA and AMD’s duopoly.

The A770, for instance, is a better option than the RTX 3060 performance-wise, but it doesn’t come with nearly as many bells and whistles nor can it be used for as many workflows and use-cases.

It’s a tremendous trade-off and, frankly, going with NVIDIA still makes a fair bit more sense.

FAQ

Let’s go over a few potential questions you might have regarding Intel’s ARC GPUs:

Are Intel ARC GPUs Any Good?

They actually are, but they nonetheless come with more asterisks and prerequisites than one would expect.

They don’t offer a “plug and play” kind of experience which, to the impatient or those who aren’t particularly tech savvy, is as far from ideal as it gets.

All that being said, they offer tremendous value and, if you can live with their flaws and deficiencies — and aren’t too tied to “legacy” titles and production workloads — they’ll definitely get the job done.

Are Intel ARC GPUs Good for Gaming?

Absolutely, especially at 1440p. You will need a CPU and motherboard that support ReBAR, though, so that’s definitely something worth keeping in mind.

Moreover, DirectX 11 titles still don’t perform as well as one would expect, whereas DX12 ones run without an issue, often performing better than NVIDIA’s own RTX 3060 (and 3060 Ti, in some cases).

All in all, Intel ARC GPUs are a stellar option, especially considering their price.

They’re not the most polished performance-wise and you’re bound to run into some issues as far as drivers are concerned, but that, to some, is an acceptable hindrance and peculiarity — one that keeps getting smaller and smaller due to Intel’s phenomenal patching cadence.

Are Intel ARC GPUs Good for Content Creation?

They’re mediocre, if a bit better than expected. They’re just not as good as anything that NVIDIA has to offer nor are they natively supported by the vast majority of programs out there.

So, if content creation is your main concern, look elsewhere.

Are Intel ARC GPUs Worth It?

They are, especially at the time of this writing. The sheer fact that the A770 can compete — and even beat — the RTX 3060 (despite costing noticeably less) is a tremendous boon for gamers on a budget, especially if they happen to be targeting 1440p.

They’re not a particularly wise investment for other workloads, though, so just keep that in mind.

Do ARC GPUs Support Ray Tracing?

They do and are actually a lot better at it than one would expect. They can actually give NVIDIA’s mid-tier offerings a run for their money! It’s not necessarily a standout feature but it sure is a surprise.

Over to You

What are your thoughts on Intel ARC GPUs now that they’ve been out in the wild for a while? Would you consider buying one despite their many driver and compatibility issues?

Let us know in the comment section down below and, in case you need any help, head over to our forum and ask away!

![Are Intel ARC GPUs Any Good? [2024 Update] Are Intel ARC GPUs Any Good? [2024 Update]](https://www.cgdirector.com/wp-content/uploads/media/2024/02/Are-Intel-ARC-GPUs-Any-Good-Twitter-1200x675.jpg)

![Intel UHD vs Iris Xe Graphics — Which is Better? [2024 Update] Intel UHD vs Iris Xe Graphics — Which is Better? [2024 Update]](https://www.cgdirector.com/wp-content/uploads/media/2023/09/Intel-UHD-vs-Iris-Xe-Graphics-—-Which-is-Better-Twitter-594x335.jpg)

0 Comments