Trying to find the best GPU for video editing and rendering? Today, I’ll be going over all you need to know about getting a GPU for video editing, rendering, or both and giving you a set of up-to-date recommendations at the end of this article. You can also use the Table of Contents below to skip ahead to those GPU recommendations, if you just want “the sauce“, so to speak.

That said, this article has also been fully-revamped compared to its pre-December 2023 version. This was in hopes of improving flow and organization of the topics discussed therein, and includes an expansion to the previous limited-but-concise roster of three GPU recommendations to a new total of six GPU recommendations (3 mainstream, 3 pro).

Whether you want to follow along for a detailed breakdown of what you need to know or skip ahead to the picks, let’s dive in! I’ll also be taking a moment to discuss used GPUs before wrapping up the article.

TABLE OF CONTENTS

What To Know Before Buying a Video Editing and Rendering GPU

Core GPU Specs and What They Mean

Below, I’ll be listing core GPU specs and what kind of workloads they correspond to. I’ll discuss how to more thoroughly evaluate a given GPU’s performance later in the article, but for now it’s important to familiarize yourself with the basic specifications of a GPU before you consider buying one for this purpose.

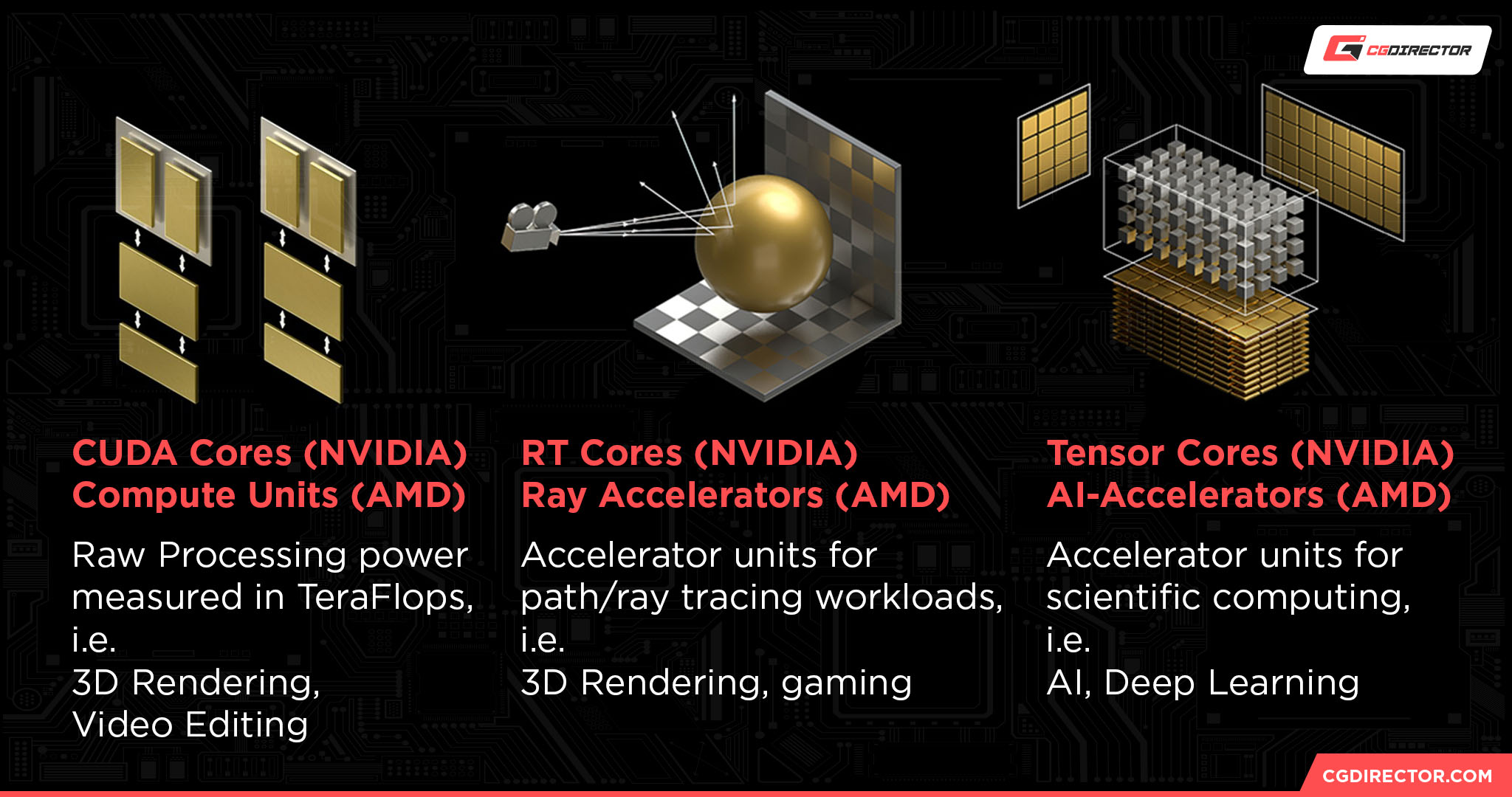

- CUDA Cores (Nvidia) / Compute Units (AMD) — Corresponds to raw processing power. which can be a great metric for GPU-accelerated video rendering and 3D rendering performance.

- Tensor Cores — Corresponds to deep learning/AI capabilities, as well as FP32/16 workloads.

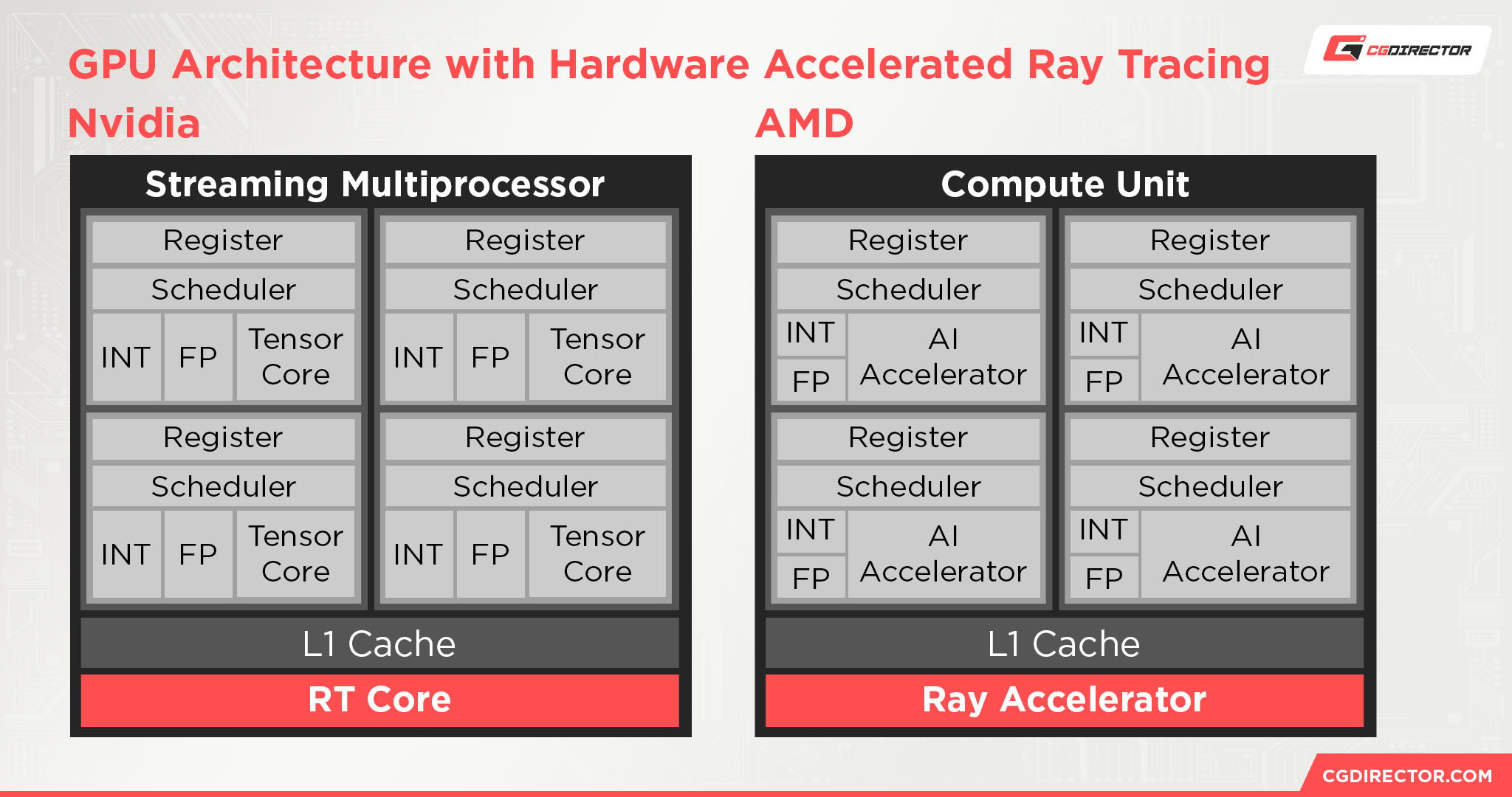

- RT Cores (Nvidia) / Ray Accelerators (AMD) — Corresponds to ray tracing performance, which can be an accelerator for 3D Rendering in supported render engines. We’ll discuss ray tracing in more detail a little later in the article!

- VRAM — For managing larger scenes, edits, etc. without overfilling memory. Higher is almost always better. Consider Alex’s guide to How Much VRAM You Need if you want more info on how different workloads are impacted by VRAM capacity.

- GPU Clock — A measurement of GPU core speed, most useful for comparing GPUs within the same architecture. Higher GPU clocks on the same GPU (via overclocking) may also improve render performance in certain scenarios.

Whether To Use AMD or Nvidia For a Video Editing and 3D Rendering GPU

Curious about whether to opt for AMD or Nvidia for your video editing and rendering needs?

I want to take some time to take a nuanced look at both of your potential paths here.

But before continuing, I also have to emphasize that Nvidia is definitely the industry leader when it comes to pro-facing GPUs, even if you’re buying their mainstream graphics cards. The reason for this mainly boils down to CUDA Cores being the most widely-supported hardware for GPU acceleration that’s available on the market, leading many users to favor Nvidia due to sheer compatibility before anything else.

However, this doesn’t mean AMD GPUs are useless for professional workloads by any stretch of the imagination. In video editing, AMD GPUs are pretty much on-par with Nvidia GPUs in every way that counts, including support for GPU-accelerated renders and timeline performance. AMD’s onboard encoders used for gameplay streaming and recording are also perfectly functional, though Nvidia’s NVENC encoders are known to be a little better than AMD’s in terms of image quality.

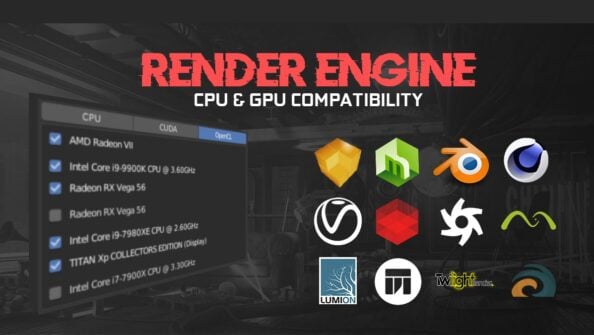

3D rendering is where the industry’s overwhelming support for CUDA more heavily favors Nvidia GPUs, as reflected in the Techgage benchmarking video embedded above. Some 3D rendering software simply doesn’t work without CUDA acceleration at all, making them a non-starter on AMD. Other 3D rendering software may have support for OpenCL or other standards supported by AMD GPUs, but speed usually isn’t always on par with that of Nvidia’s.

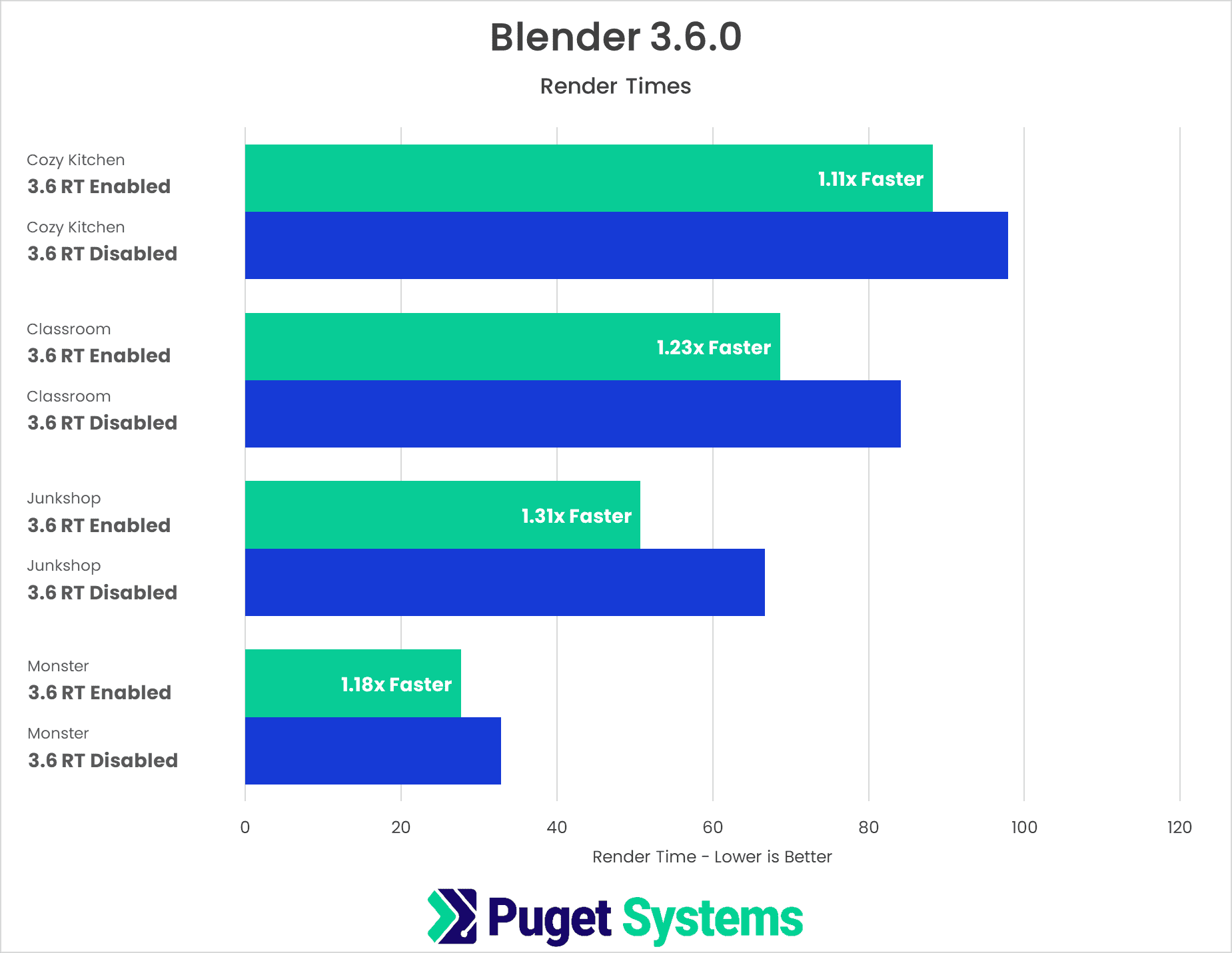

However, that doesn’t mean AMD is necessarily a bad idea for professional rendering, either. In fact, support for AMD and its own RT acceleration has improved recently. PugetSystems benchmarked the addition of AMD’s RT in Blender 3.6.0 on an RX 7900 XTX in September of 2023, and found that it greatly increased the speed of Blender renders on AMD GPUs, like doing so does on an Nvidia GPU.

Image Credit: Puget Systems

This doesn’t mean that all of AMD’s problems are solved, though: Nvidia is still far and away the market leader in terms of raw 3D render performance, whether that’s ray traced or not. However, support for AMD’s HIP and HIP-RT functionalities being added to Blender and other major 3D applications does bode well for the future of AMD graphics cards in this space.

Overall, you can go with either AMD or Nvidia…but Nvidia is likely to provide the best experience with the least headaches, especially if you do a lot of 3D rendering.

Do You Need RTX In a 3D Rendering GPU?

Since we’ve narrowed down Nvidia as the best overall choice for a 3D rendering GPU ahead of time, it’s also worth speaking about whether or not you need an RTX GPU specifically.

Worth noting: Nvidia’s current-gen production of non-RTX GPUs has vastly diminished over time, even in the mainstream entry-level GPU space. Thus, your options in GPU horsepower will be fairly limited when buying non-RTX GPUs unless you’re buying used top-of-the-line cards from yesteryear, like the Titan V.

That said, do you necessarily need RTX in a 3D rendering GPU? After all, “RTX” is just Nvidia’s term for graphics cards equipped with onboard RT cores, alongside AI-dedicated Tensor cores. If you don’t need these things in your 3D rendering workloads, you might be able to save money opting for a non-RTX GPU, but it’s worth noting that RT/Tensor cores can be used to boost various tasks, including video rendering. The entry-level to RTX is also getting cheaper every day, especially on the used market.

Overall, I can’t really recommend a non-ray tracing GPU to a 3D rendering PC builder today. The price barrier to real-time ray tracing hardware is lower than ever, and the acceleration that hardware gives in mainstream applications is too good to reasonably pass up.

Even AMD can do ray tracing in 3D rendering applications, these days, so this isn’t necessarily an Nvidia-exclusive answer. I would still opt for Nvidia until AMD significantly improves its ray tracing performance, though.

What You Need From a Video Editing GPU

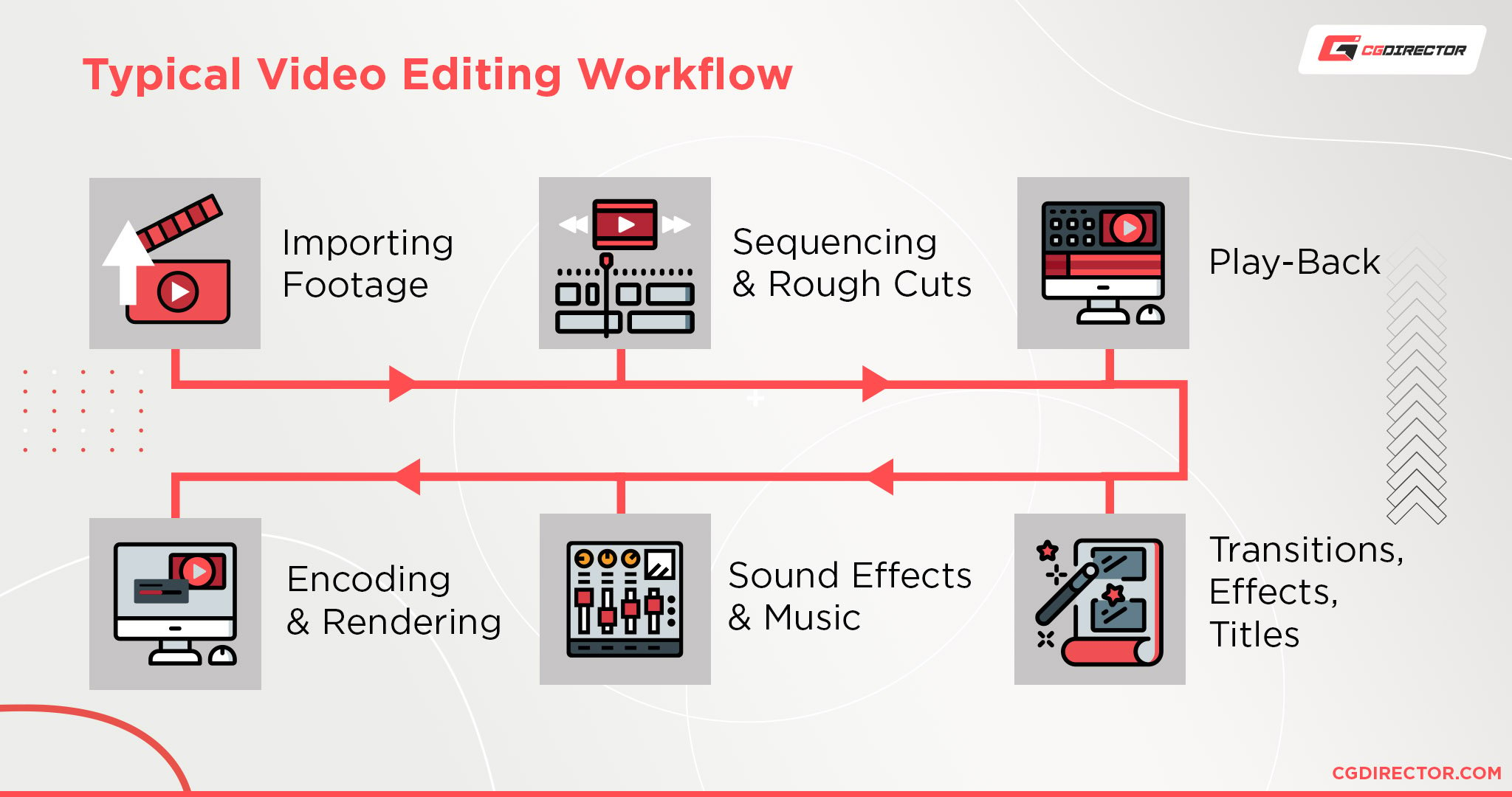

A Video Editor’s typical editing workflow

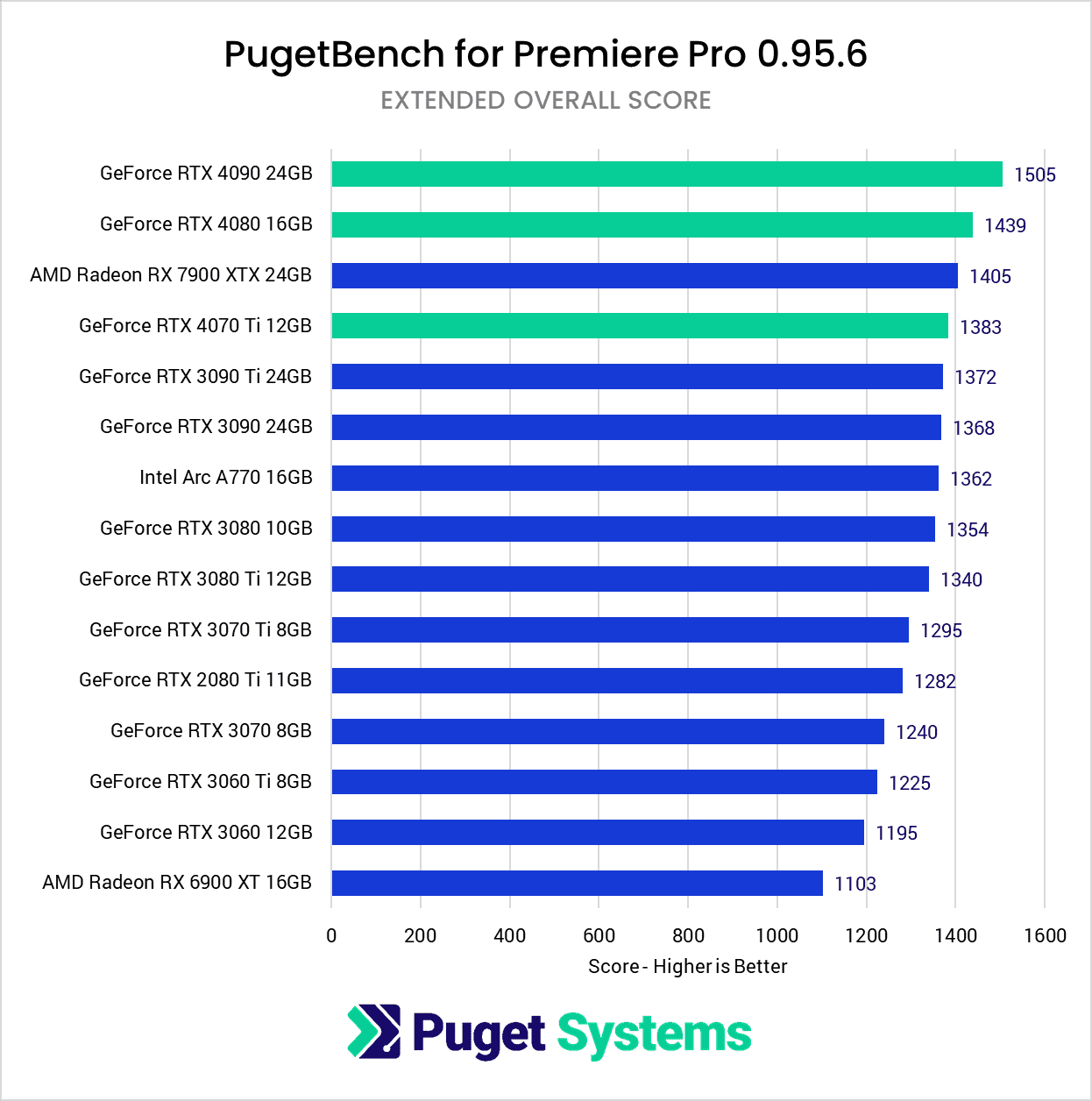

So, good news and bad news regarding GPUs for video editing: they can have an impact, but typically your GPU won’t be the your highest-impact spec in a video editing-focused PC. This is because video editing is most demanding on your CPU and RAM before the rest of your system, though of course GPU acceleration works well in leading video editing applications. In particular, graphics cards with a lot of VRAM to spare will do well in video editing workloads, whether they stem from AMD or Nvidia. Below, I’ve embedded benchmarking from PugetSystems showing the current top Premiere Pro GPUs, but you can really do most video editing with an entry-level GPU.

The main benefits of a GPU in a video editing workflow are seen in GPU-accelerated video previewing and, of course, GPU-accelerated video rendering. Depending on how you choose to balance your render settings and the rest of your PC (for example, many prefer to do video rendering on the CPU for maximum quality, even if it takes longer), a good GPU can become a boost to your video editing process, rendering process, or both.

Besides GPUs, the other most important components for a video editing PC are your CPU, your RAM, and (ideally) SSD storage.

What You Need From a 3D Rendering GPU

Like with video editing, 3D rendering is a task that is also reliant on lots of storage, RAM, and multi-core CPU power to spare. However, raw GPU power is also fairly good to have in 3D rendering workloads, since they all support GPU hardware acceleration. A GPU capable of complex and fast 3D renders will generally be more expensive than a GPU capable of just video editing, but we’ve also reached a point in history where entry GPUs have been fast enough for that workload for ages.

A good 3D rendering experience will be most reliant on high GPU and CPU power before the usual suspects of RAM, VRAM, and storage speed begin creeping into the mix. CPU and GPU will mostly operate as expected, but once your scene reaches a certain level of scale or complexity, performance will chug.

To avoid performance issues in a 3D GPU, try to keep in mind what specifications matter most for this workload. High VRAM, GPU compute, and CPU compute will all contribute to final render performance and 3D viewport performance. Having lots of RAM, and fast storage for a faster paging file whenever you run out of RAM, will also help your system manage larger projects without slowing down.

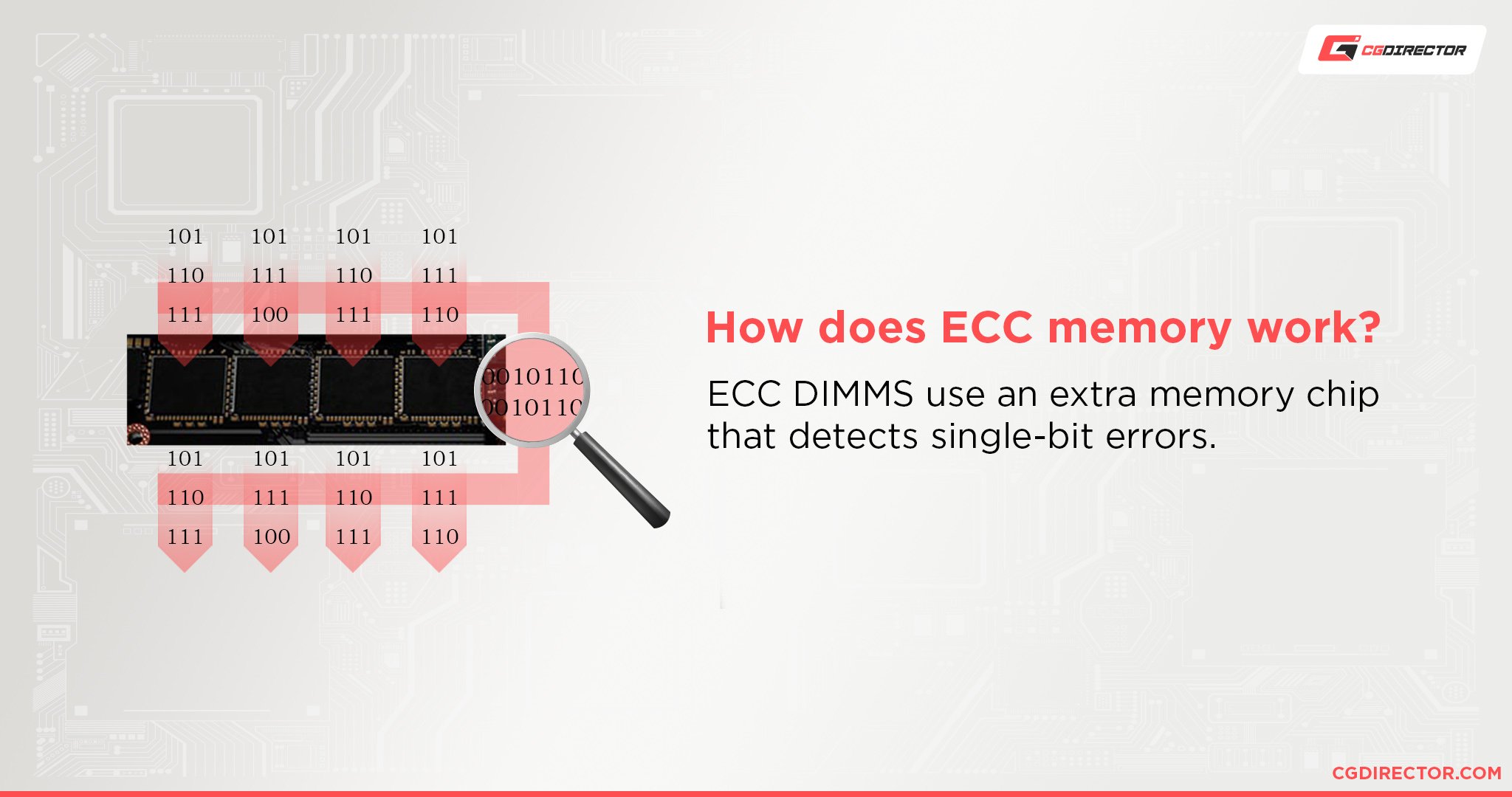

Whether or Not You Need ECC

ECC refers to Error Correcting Code Memory.

ECC memory detects and corrects data errors that naturally occur over the course of long-term, high-intensity workloads.

These errors are what cause seemingly-random events like data corruption or system failure, and must be avoided at all costs when fragile enough data is being dealt with.

That’s why ECC is most commonly used in servers and enterprise PCs- in order to prevent these errors from occurring when they would cause the worst damage.

In GPUs, ECC is exclusive to professional GPUs from Nvidia and AMD.

Most consumers and creators, however, who are not integrated into an enterprise workflow, can safely ignore ECC.

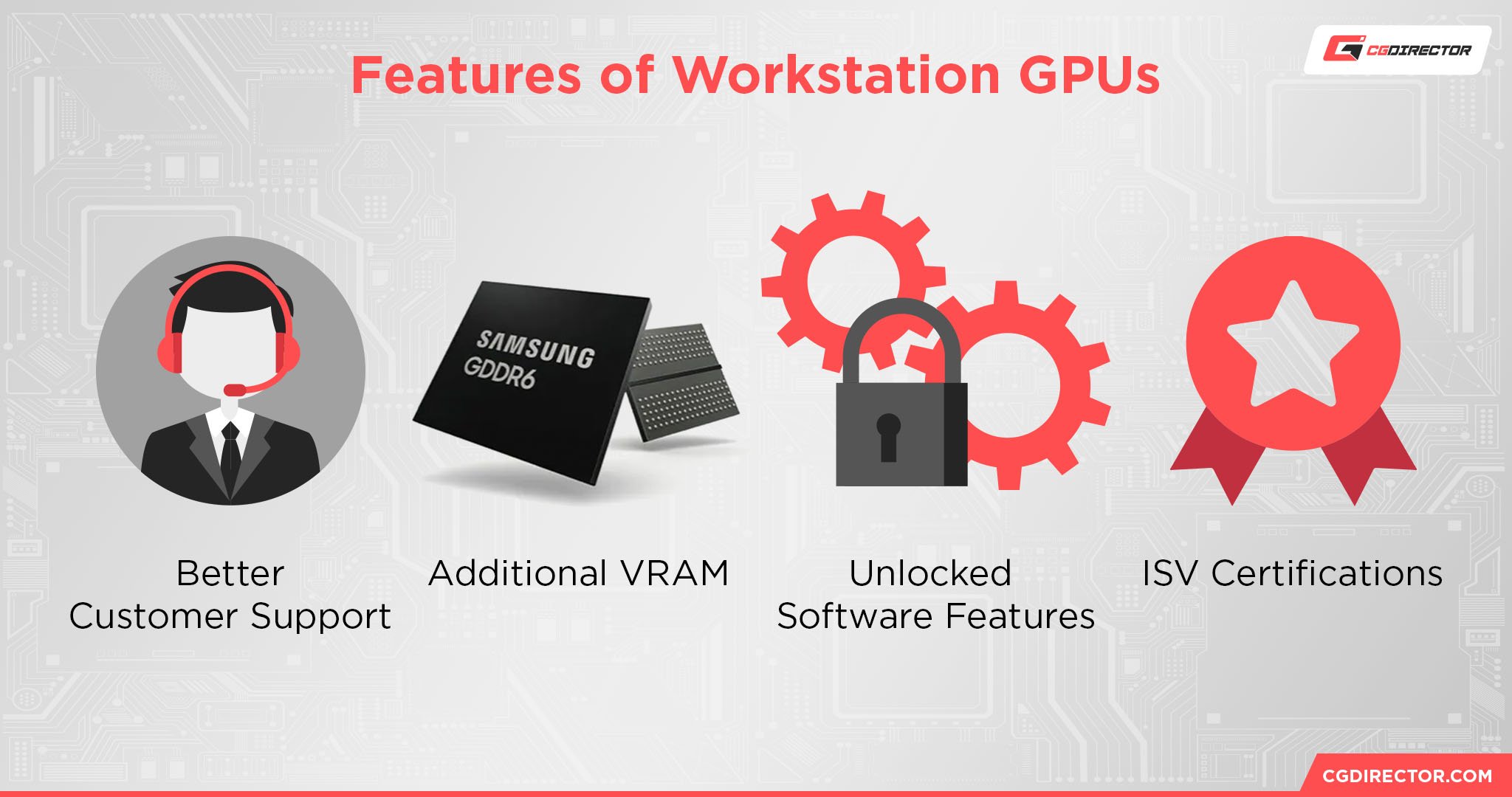

The Difference Between a Workstation GPU and a Mainstream GPU

One of the biggest differences between workstation GPUs and mainstream GPUs boils down to customer support. Many application features and a higher degree of customer support in general are both locked behind ownership of a workstation GPU from AMD or Nvidia (FirePro and Quadro, respectively). Many software vendors will only provide support and maintenance to businesses that are using PCs with proper workstation GPUs, and this is critical info for enterprise customers who need near-24/7 uptime.

This may sound arbitrary, but think of it from the vendors’ perspective! What’s more effective: applying one support guy for every dozen troubled mainstream users, or applying a support staff exclusively to business customers who will most likely communicate through just one IT guy at a time? For a vendor like Solidworks, that choice is obvious (officially): business customers (enterprise GPUs) only.

Unfortunately, in terms of hardware…workstation GPUs aren’t actually that different, at all. Some features that are locked behind workstation GPU branding, like RealView in Solidworks, can even be unlocked on mainstream GPUs with the correct tweaks applied, allowing them to flex their raw GPU compute. However, it’s generally recommended to just opt for a workstation GPU anyway, especially since they also tend to come with much more VRAM and the such when you’re buying in the proper workstation GPU price range.

Overall, CGDirector recommends Workstation GPUs to users who:

- Can write off the high prices as a business expense.

- Can make use of ECC, higher amounts of VRAM, higher floating-point precision, higher monitor bit depth, and etc.

- Need special software features only supported on Workstation GPUs (in Solidworks, AutoCAD, etc).

- Regularly rely on the software vendor’s maintenance and support.

- Need their hardware to be thoroughly tested for durability and stability in enterprise— or server— environments, even at 24/7 uptime.

Meanwhile, CGDirector recommends Mainstream GPUs to users who:

- Don’t make use of features only supported on Workstation GPUs

- Want more bang for their buck

- Don’t necessarily need high amounts of VRAM or ECC

- Don’t rely on regular software support from their Application’s Vendor

- Might also want to game every now and then

Whether or Not You Need Dual GPU

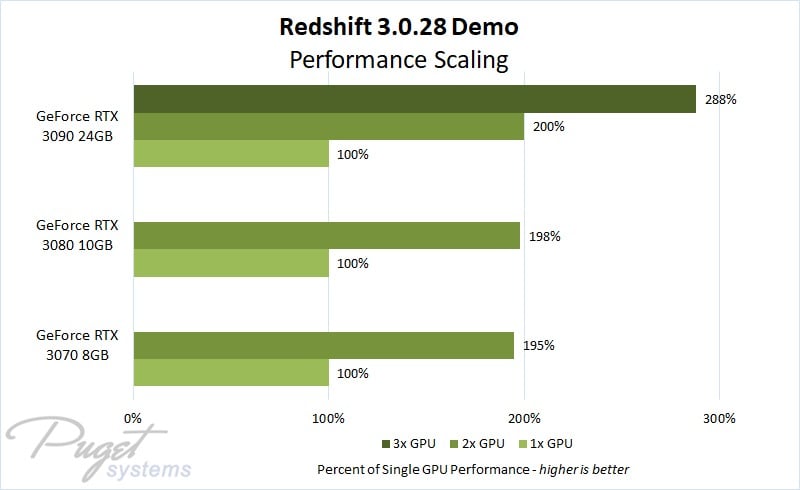

Image Credit: Puget Systems

Generally-speaking, using two or more GPUs will provide a near 2x, 3x, etc performance scale increase in 3D rendering workloads. We’ve included some multi-GPU results in our collection of GPU rendering benchmarks, if you want some examples of this. The reason for this is because unlike real-time gaming, 3D renders and similar tasks are easily distributed across multiple processing cores. CPU cores and RAM capacity are also very important-to-scale for video editing and 3D rendering workloads, of course.

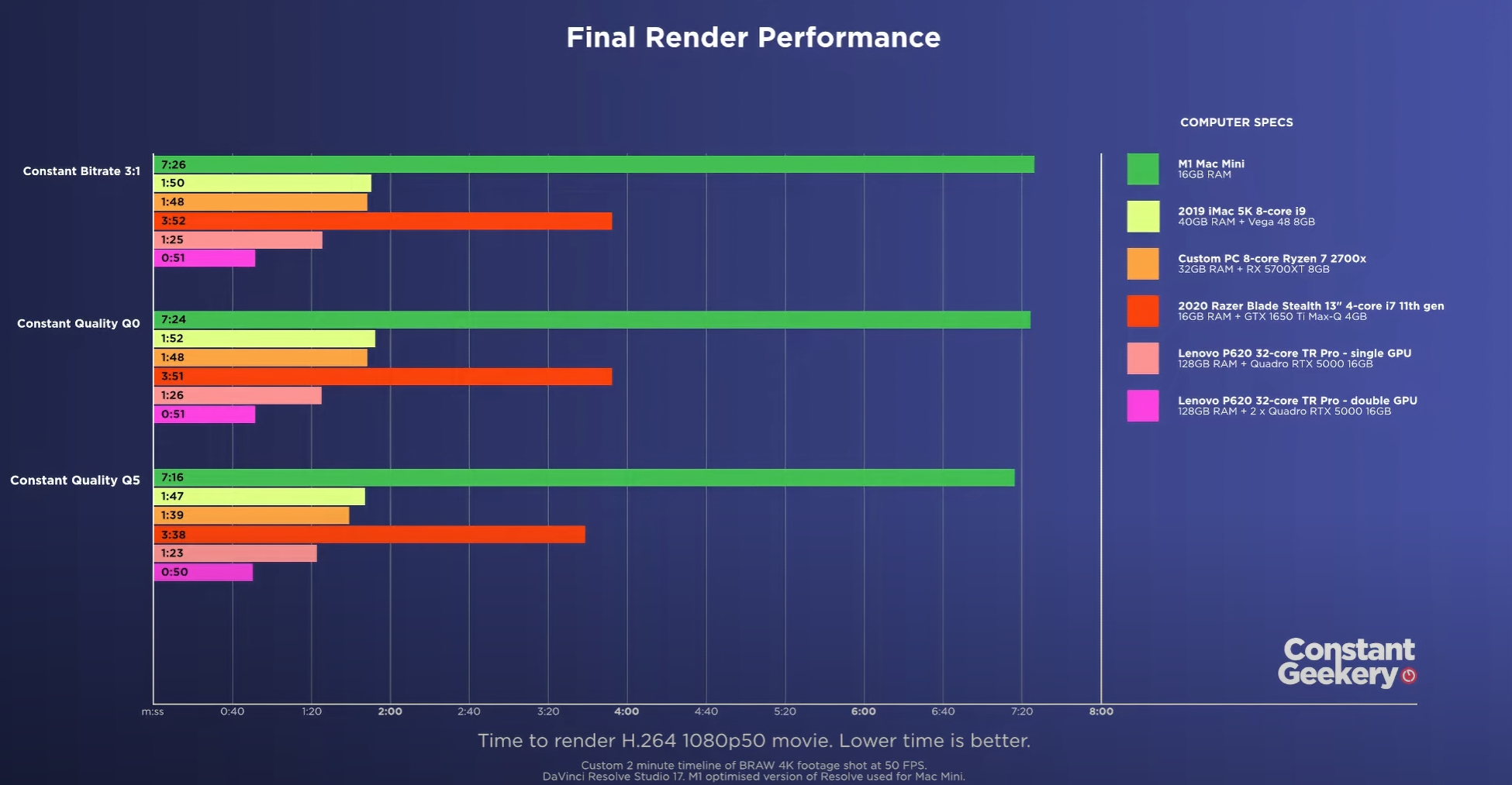

Image Credit: Constant Geekery on YouTube

Unfortunately, video editing actually doesn’t benefit as much from two or more GPUs, at least not in terms of render times or timeline render performance. As highlighted in the render time benchmarking posted above (note the multi-GPU benchmark), some improvements can still be found in software like DaVinci Resolve, but it won’t necessarily scale in accordance to all GPUs. Video rendering performance can sometimes improve with multiple GPUs, but editing timeline performance almost never will.

If the benefits of multi-GPU sound appealing to you, good! Be sure to double check that your motherboard actually supports multi-GPU configurations, though, especially if you’re on a mainstream platform instead of using a dedicated workstation or server motherboard.

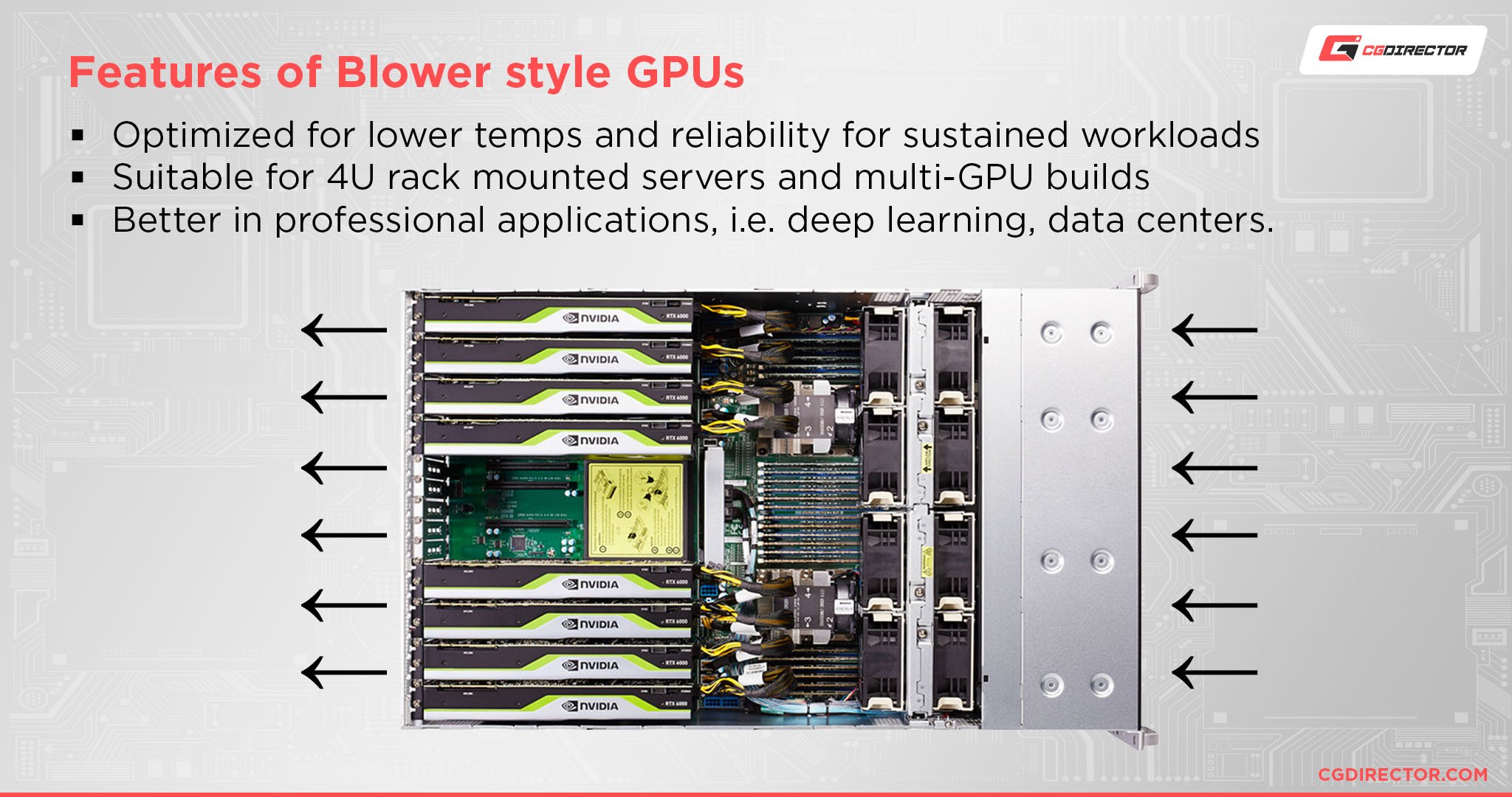

If you happen to need 2 or more GPUs in your setup, the favorability of blower-style GPUs (including many Pro GPUs, if they’re suited to you) increases significantly.

If you want a blower-style GPU without paying the Quadro premium, though, you may need to stick to AMD GPUs or used past-gen Nvidia GPUs, since Nvidia has seemingly banned blower-style cards from their mainstream GPU series as of March 2021, reports Tom’s Hardware.

How Your GPU Performs In 3D Rendering Benchmarks

Another thing you may want to gauge is how your new GPU is expected to perform in 3D rendering benchmarks. This is both so you can verify that you’re getting the intended performance after purchasing and so you can compare the benefits of a new GPU to any current GPU you may already be using for rendering. Benchmarking tools and the database of results that come with them are an invaluable resource to any prospective PC builder, upgrader, or buyer.

Below, I’ve compiled a list of regularly-updated GPU benchmarking articles to help you stay up-to-date with editing & rendering GPU power scaling:

Recommended GPU Benchmarks:

- OctaneBench GPU Benchmark Results — OctaneBench is one of the most popular GPU rendering benchmarks on the market, and has superb support for multi-GPU testing as well. The ultimate test for gauging performance in GPU-scalable applications.

- Redshift GPU Benchmark Results — Redshift is a fully GPU-accelerated render engine from Maxon, and a popular target for GPU benchmarking. Redshift also recently added support for AMD GPUs as well, though it used to be exclusive to Nvidia cards and is still best on them.

- Cinebench GPU Benchmark Results — Cinebench long stands as one of the most popular sources of CPU and GPU benchmarking on the ‘Net. To get a solid idea of how your GPU should fare in 3D rendering and GPU-accelerated video editing tasks, Cinebench is a great choice. Cinebench is also a Maxon application, so it’s GPU benching is similar to Redshift.

- V-RAY GPU Benchmark Results — V-Ray is a rendering engine (and plugin) that specializes in realistic global illumination computations. It also doubles as a popular target for CPU and GPU benchmarking, which is kind of funny since it comes from a company called “Chaos Group”.

Best Graphics Card For Editing & Rendering: Our Picks

Below, I’ve narrowed down our best picks for video editing and 3D rendering GPUs to six. I’ve elected to include three picks each for Workstation and Mainstream GPUs (Value, Mid-Range, and High-End)— and you’ll note that my pick for Workstation GPUs starts with modern Quadro RTX cards, not old-gen Quadro GTX. This means entry-level Editing & Rendering for Quadro RTX will be at a considerably higher price point than GeForce RTX, which is important to keep in mind.

For workstation and enterprise users most pressed on budget who are sure they don’t need RT hardware, consider the ~$220 Quadro M4000 for its generous 8GB VRAM. Otherwise. I highly recommend continuing to my other Workstation or Mainstream GPU picks below.

If you have a specific budget and needs not addressed by these picks, you can also comment below whenever you read this article for help picking out a GPU!

#1 – Best Value Editing & Rendering GPU: GeForce RTX 4060 Ti Gigabyte Eagle Windforce Edition

Image-Credit: Gigabyte

Specs:

- CUDA Cores — 4352

- Tensor Cores — 136

- RT Cores — 34

- VRAM — 8GB GDDR6 clocked at 18 Gigabits per second.

- GPU Clock — 2535 MHz

- Cooler Type — Slim Dual Slot Triple Fan design. GPU length is 272 mm.

- GPU Warranty Length — Gigabyte GPU Warranty is 4 Years.

- Estimated Price Range — $399 or less

- Other Features — Heatsink grille opening in backplate behind third GPU fan could help cooling performance, especially in a multi-GPU setup. Unfortunately, I couldn’t find a 4060 Ti blower card, but a proper blower-style 4060 Ti may also be preferable for multi-GPU setups…though you’ll still want powerful case fans for the exhaust it creates.

- OctaneBench Score — 410, nearly twice that of the RTX 2060 Super, which was previously the leading value pick in this article. Should be more than enough horsepower for video editing up to 4K, and serves as a great entry-point for 3D rendering with CUDA and OptiX support.

#2 – Best Mid-Range Editing & Rendering GPU: GeForce RTX 4070 Ti ASUS ProArt Edition

Image-Credit: ASUS

Specs:

- CUDA Cores — 7680

- Tensor Cores — 240

- RT Cores — 60

- VRAM — 12GB GDRR6X

- GPU Clock — 2730 MHz up to 2760 Mhz Boost

- Cooler Type — 2.5 Slot GPU Cooler, Triple-Fan. GPU length is 300 mm.

- GPU Warranty Length — ASUS GPU Warranty is 3 Years.

- Estimated Price Range — $850 or less

- Other Features — Includes GPU holder and screwdriver.

- OctaneBench Score — 706. This is even higher than our previous High-End pick, the 3090, which has a score of only of 661! Overkill for video editing, and more than enough for modern 3D rendering workloads.

#3 – Best High-End Editing & Rendering GPU: GeForce RTX 4090 GALAX Serious Gaming Edition

Image-Credit: GALAX

Specs:

- CUDA Cores — 16,384

- Tensor Cores — 512

- RT Cores — 128

- VRAM — 24 GB GDDR6X

- GPU Clock — Up to 2580 MHz before 1-Click OC

- Cooler Type — 3,5-slot GPU cooler with triple 102mm fans for extra mmf. GPU length is 352 mm with bracket and 336 without it.

- GPU Warranty Length — Galax GPU Warranty is 3 Years.

- Estimated Price Range — $1900 or less (MSRP is $1599, but prices/demand are very high for high-end Nvidia cards at initial time of writing)

- Other Features — One-click Overclocking functionality goes up to 2595 MHz.

- OctaneBench Score — 1272, which is better than 4 RTX 2080 Supers and about twice as powerful as the RTX 3090, the previous high-end mainstream pick.

#4 – Best Entry-Level Pro Editing & Rendering GPU: Nvidia Quadro RTX A2000 Stock Edition

Image-Credit: Nvidia

Specs:

- CUDA Cores — 3328

- Tensor Cores — 104

- RT Cores — 26

- VRAM — 6GB GDDR6

- GPU Clock — Unlisted

- Cooler Type — Blower-Style.

- GPU Warranty Length — Nvidia GPU Warranty is 3 Years.

- Estimated Price Range — Under $500

- Other Features — Quadro features and support.

- OctaneBench Score — 234. The lowest on this list by far, but performance is on par with that of the RTX 2060 Super while still getting the benefits of Quadro RTX.

#5 – Best Value Pro Editing & Rendering GPU: Nvidia Quadro RTX A4500 Ada Gen PNY

Image-Credit: Nvidia

Specs:

- CUDA Cores — 6144

- Tensor Cores — 192

- RT Cores — 48

- VRAM — 20 GB GDDR6

- GPU Clock — Unlisted

- Cooler Type — Blower-Style.

- GPU Warranty Length — PNY GPU Warranty is 3 Years

- Estimated Price Range — Under $1600, though MSRP is $1250.

- Other Features — Quadro features and support.

- OctaneBench Score — Untested on OctaneBench, but scores 2X the Quadro RTX A2000 in other benchmarks, including Nvidia’s own benchmarking.

#6 – Best Pro Editing & Rendering GPU: Nvidia Quadro RTX 6000 Ada Gen PNY Edition

Image-Credit: Nvidia

Specs:

- CUDA Cores — 18,176

- Tensor Cores — 568

- RT Cores — 142

- VRAM — 48 GB GDDR6X

- GPU Clock — Unlisted

- Cooler Type — Blower-Style.

- GPU Warranty Length — PNY GPU Warranty is 3 Years

- Estimated Price Range — Up to $7700 (MSRP is $6800, severe price inflation in effect for high-VRAM GPUs like this at time of writing).

- Other Features — Quadro features and support.

- OctaneBench Score — 1117. On par with a 4090 in pure render performance, but with much more VRAM to utilize.

Parting Words and a Note on Used GPUs for Video Editing and Rendering

And that’s it!

I hope this article and the recommendations given to you above have helped you find the best video editing & rendering GPU for your needs.

Before closing things off entirely, though, I do want to take a moment to talk about why you may want to consider the used GPU market for an editing/rendering GPU. The first and most obvious reason is to save money, since mainstream and workstation GPUs alike go for much less money on the used market. But another reason is actually to get cheaper access to blower-style mainstream Nvidia GPUs (which are only available in pre-RTX 30 generations), or cheaper access to workstation GPUs in general.

As long as you’re sure to look out for the warning signs, a used GPU can be a perfectly suitable choice for video editing and rendering.

With all that covered, though, we’re finally done here! If you have any other questions, please feel encouraged to ask them in the comments section below. Alternatively, you can try the CGDirector Forum or Discord server if you want to engage with myself and the rest of the CGDirector Team and Community.

Until then or until next time, though, happy computing! And good luck with your editing and rendering projects.

![What is the Best GPU for Video Editing and Rendering? [Updated] What is the Best GPU for Video Editing and Rendering? [Updated]](https://www.cgdirector.com/wp-content/uploads/media/2019/12/BestGPUForEditingAndRendering-Twitter_1200x675-1200x675.jpg)

39 Comments

11 April, 2022

Thanks for the article. After months of research and hundreds of experiments, I still haven’t come to any meaningful conclusions. I’m dealing with video conversion with Handbrake or FFMpeg, trying my best to reduce the size of movies of a few hours and maintain the quality at the same time. I tried many cards as it is important in terms of FPS conversion time acquired.

GTX 1070

RTX 3060

RTX 3060TI

RTX 3080

RTX 3060TI

RTX 2070 Super

RTX A5000

But I was a little shocked to see that they all have the same FPS when converting video. I thought the problem was from the hard disk, I used SSD and M2, and I caught the same fps values again. I thought my processor was the bottleneck, I used AMD 5950x and still got the same fps values. No matter what I did, I could never increase the FPS. When I looked at the GPU values, Video Engine was used 100% on all cards.

I don’t have any problems with the budget, I’m just looking for the fastest way to convert my videos. For example, I’m converting a 1920×1080 movie with 400 FPS and it takes about 7 minutes. Yes, that’s a good score, but what’s stopping me from doing it in less time? What criteria should I pay attention to in a gpu card. Also, can chip models make a difference in terms of image quality and compression when converting video?. I would be grateful if you could give me any advice.

Thanks

12 April, 2022

Hey Bilge,

What codec are you encoding with?

Cheers,

Alex

12 April, 2022

I am using h264. Also, 128GB of RAM