TABLE OF CONTENTS

Graphics Card (GPU) based render engines such as Redshift, Octane, or VRAY have matured quite a bit and are overtaking CPU-based Render-Engines – both in popularity and speed.

But what hardware gives the best-bang-for-the-buck, and what do you have to keep in mind when building your GPU-Workstation compared to a CPU Rendering Workstation?

Building an all-round 3D Modeling and CPU Rendering Workstation can be somewhat straightforward, but optimizing GPU Rendering performance is a whole other story.

So, what are the most affordable and best PC-Parts for rendering with Octane, Redshift, VRAY, or other GPU Render Engines?

Let’s take a look:

Best Hardware for GPU Rendering

Processor

Since GPU-Render Engines use the GPU(s) to render, technically, you should go for a max-core-clock CPU like the Intel i9 12900K or the AMD Ryzen 9 5950X that clocks at 3,4Ghz (4,9Ghz Turbo).

At first glance, this makes sense because the CPU does help speed up some parts of the rendering process, such as scene preparation.

That said, though, there is another factor to consider when choosing a CPU: PCIe-Lanes.

GPUs are connected to the CPU via PCIe-Lanes on the motherboard. Different CPUs support a different number of PCIe-Lanes.

Top-tier GPUs usually need 16x PCIe 3.0 Lanes to run at full performance without bandwidth throttling.

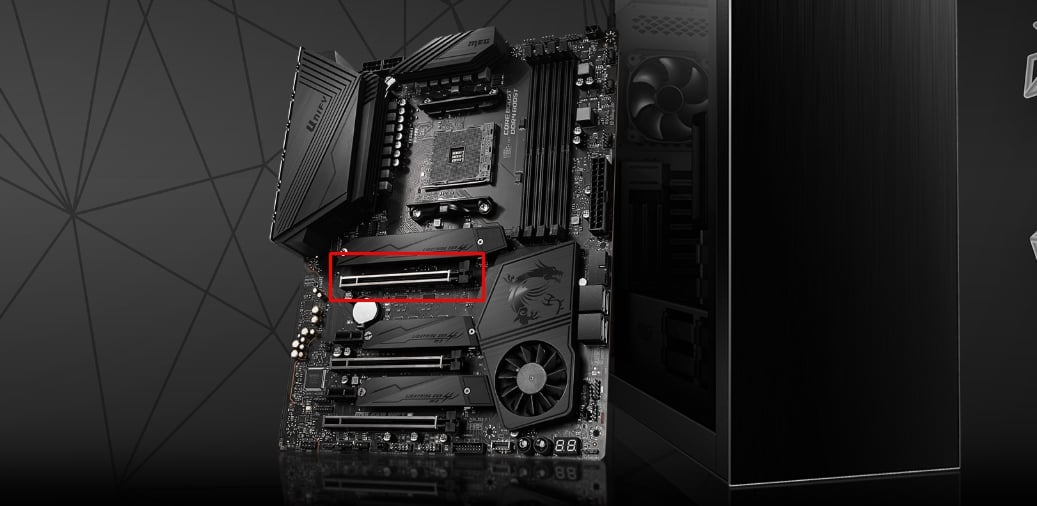

Image-Credit: MSI, Unify x570 Motherboard – A typical PCIe x16 Slot

Mainstream CPUs such as the i9 12900K/5950X have 16 GPU<->CPU PCIe-Lanes, meaning you could use only one GPU at full speed with these kinds of CPUs.

If you want to use more than one GPU at full speed, you would need a different CPU that supports more PCIe-Lanes.

AMD’s Threadripper CPUs, for example, are great for driving lots of GPUs.

They have 64 PCIe-Lanes (e.g., the AMD Threadripper 2950X or Threadripper 3960X)

GPUs, though, can also run in lower bandwidth modes such as 8x PCIe 3.0 (or 4.0) Speeds.

This also means they use up fewer PCIe-Lanes (namely 8x). Usually, there is a negligible difference in Rendering Speed when having current-gen GPUs run in 8x mode instead of 16x mode.

At x8 PCIe Bandwidths, you could run two GPUs on an i9 10900K, or Ryzen 9 5950X. (For a total of 16 PCIe Lanes, given the Motherboard and Chipset supports DUAL GPUs and has sufficient PCIe Slots)

You could theoretically run 4 GPUs in x16 Mode on a Threadripper CPU (= 64 PCIe Lanes). Unfortunately, this is not supported, and the best you can achieve with Threadripper CPUs is a x16, x8, x16, x8 Configuration.

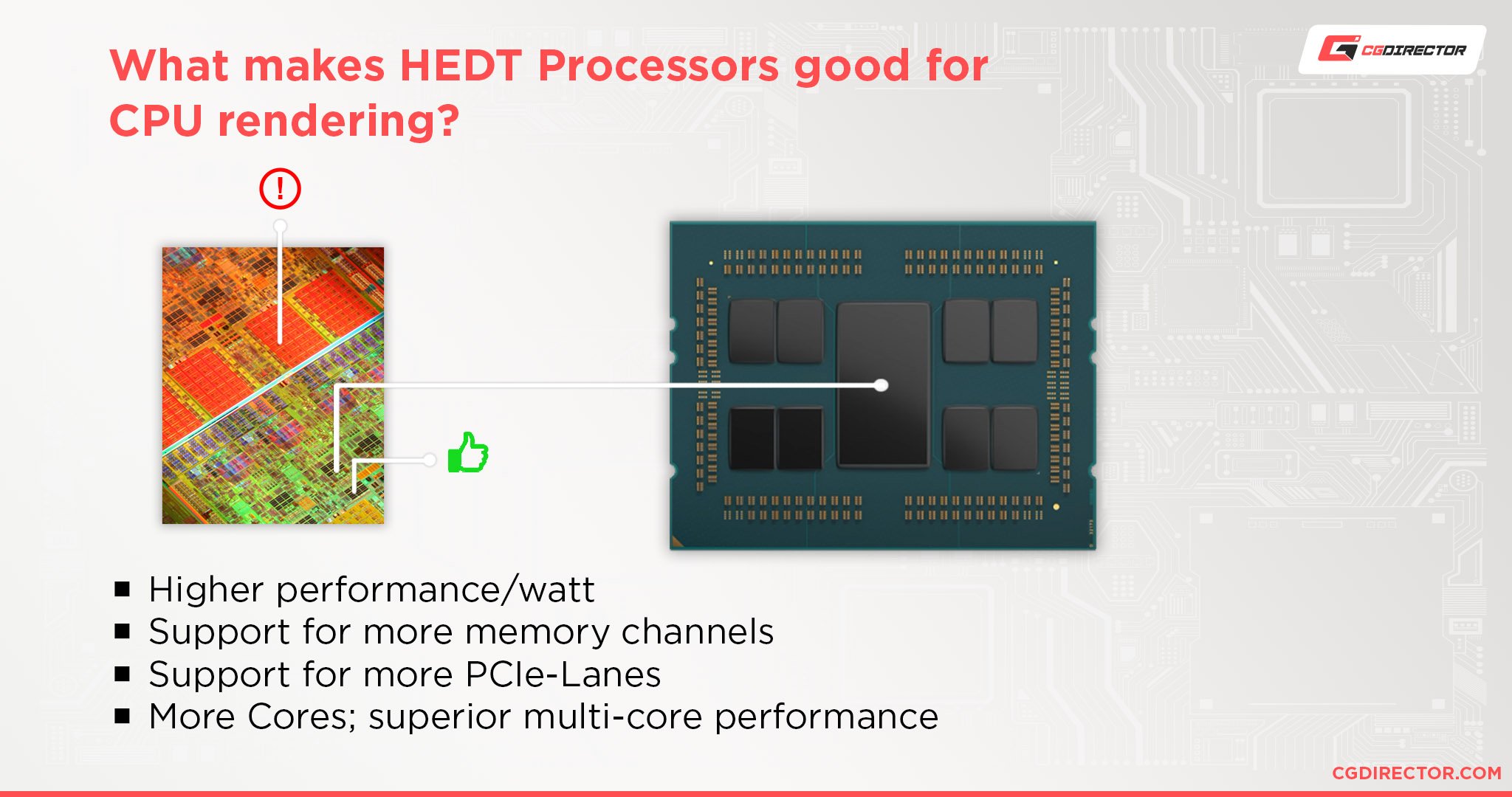

CPUs with a high number of PCIe-Lanes usually fall into the HEDT (= High-End-Desk-Top) Platform range and are often great for CPU Rendering as well, as they tend to have more cores and, therefore, higher multi-core performance.

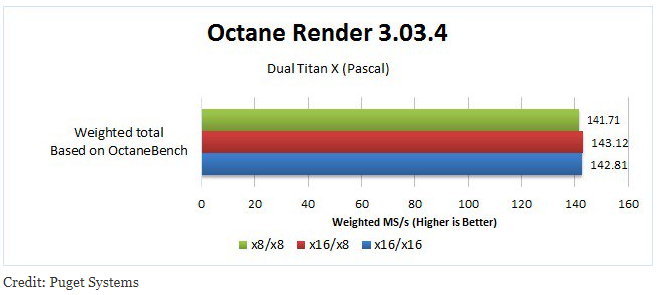

Here’s a quick bandwidth comparison between having two Titan X GPUs run in x8/x8, x16/x8 and x16/x16 mode. The differences are within the margin of error.

Beware though, that the Titan X’s in this benchmark certainly don’t saturate a x8 PCIe 3.0 bus and the benchmark scene fits into the GPUs VRAM easily, meaning there is not much communication going on over the PCIe-Lanes.

When actively rendering and your scene fits nicely into the GPU’s VRAM (find out how much VRAM you need here), the speed of GPU Render Engines is dependent on GPU performance.

Some processes, though, that happen before and during rendering rely heavily on the performance of the CPU, Storage, and (possibly) your network.

For example, extracting and preparing Mesh Data to be used by the GPU, loading high-quality textures from your Storage, and preparing the scene data.

These processing stages can take considerable time in very complex scenes and will bottleneck the overall rendering performance, if a low-end CPU, Disk, or RAM are used.

If your scene is too large to fit into your GPU’s memory, the GPU Render Engine will need to access your System’s RAM or even swap to disk, which will slow down the rendering considerably.

Best Memory (RAM) for GPU Rendering

Different kinds of RAM won’t speed up your GPU Rendering all that much. You do have to make sure, that you have enough RAM though, or else your System will crawl to a halt.

Image-Source: Corsair

Keep the following rules in mind to optimize for performance as much as possible:

- To be safe, your RAM size should be at least 1.5 – 2x your combined VRAM size

- Your CPU can benefit from higher Memory Clocks which can in turn slightly speed up the GPU rendering

- Your CPU can benefit from more Memory Channels on certain Systems which in turn can slightly speed up your GPU rendering

- Look for lower Latency RAM (e.g. CL14 is better than CL16) which can benefit your CPU’s performance and can therefore also speed up your GPU rendering slightly

Take a look at our RAM (Memory) Guide here, which should get you up to speed.

If you just need a quick recommendation, look into Corsair Vengeance Memory, as we have tested these Modules in a lot of GPU Rendering systems and can recommend them without hesitation.

Best Graphics Card for GPU Rendering

Finally, the GPU:

To use Octane and Redshift you will need a GPU that has CUDA-Cores, meaning you will need an NVIDIA GPU.

Some versions of VRAY used to additionally support OpenCL, meaning you could use an AMD GPU, but this is no longer the case.

If you are using other Render Engines, be sure to check compatibility here.

The best NVIDIA GPUs for Rendering are:

- RTX 3060 Ti (4864 CUDA Cores, 8GB VRAM)

- RTX 3070 (5888 CUDA Cores, 8GB VRAM)

- RTX 3070 Ti (6144 CUDA Cores, 8GB VRAM)

- RTX 3080 (8704 CUDA Cores, 10GB VRAM)

- RTX 3080 Ti (10240 CUDA Cores, 12GB VRAM)

- RTX 3090 (10496 CUDA Cores, 24GB VRAM)

Image-Source: Nvidia

Although some Quadro GPUs offer even more VRAM, the value of these “Pro”-level GPUs is worse for GPU rendering compared to mainstream or “Gaming” GPUs.

There are some features such as ECC VRAM, higher Floating Point precision, or official Support and Drivers that make them valuable in the eyes of enterprise, Machine-learning, or CAD users, to name a few.

For your GPU Rendering needs, stick to mainstream RTX GPUs for the best value.

GPU Cooling

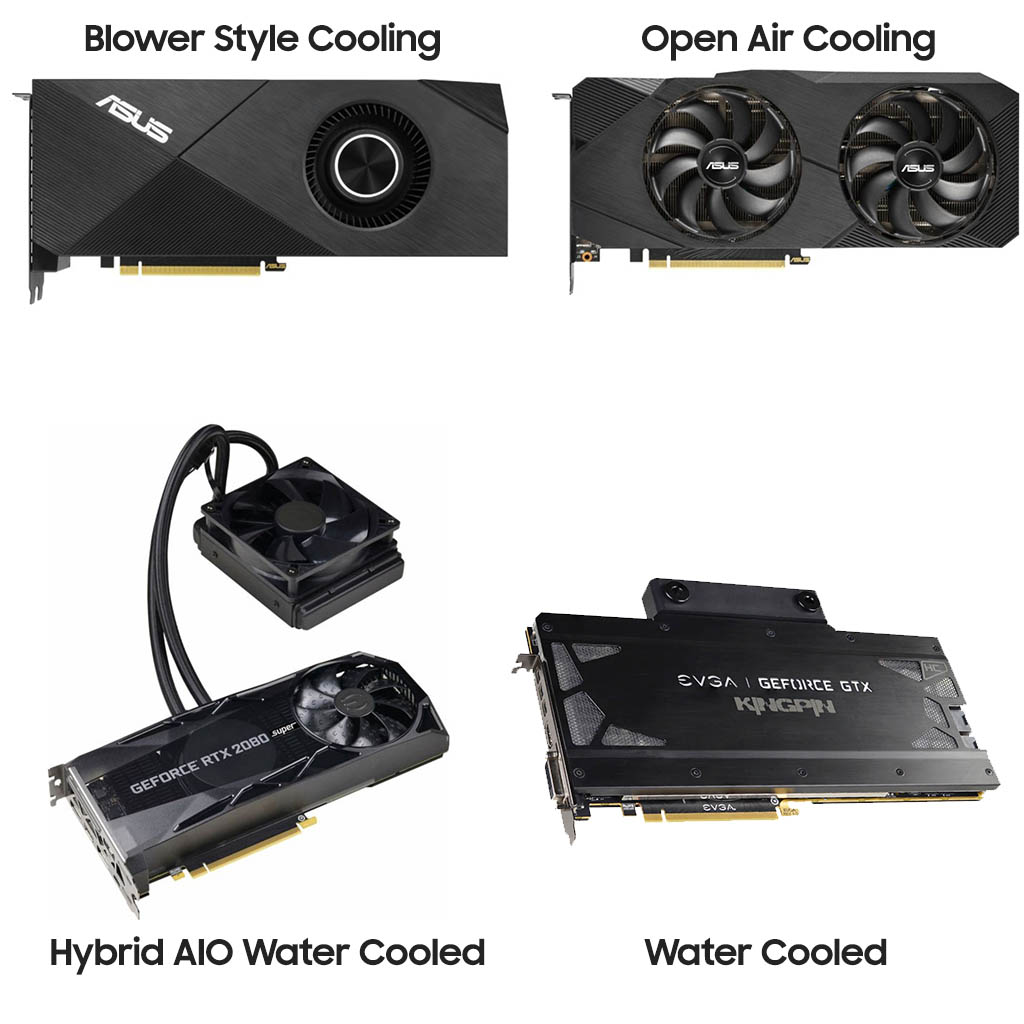

Blower Style Cooler (Recommended for Multi-GPU setups)

- PRO: Better Cooling when closely stacking more than one card (heat is blown out of the case)

- CON: Louder than Open-Air Cooling

Open-Air Cooling (Recommended for single GPU Setups)

- PRO: Quieter than Blower Style, Cheaper, more models available

- CON: Bad Cooling when stacking cards (heat stays in the case)

Hybrid AiO Cooling (All-in-One Watercooling Loop with Fans)

- PRO: Best All-In-One Cooling for stacking cards

- CON: More Expensive, needs room for radiators in Case

Full Custom Watercooling

- PRO: Best temps when stacking cards, Quiet, some cards only use single slot height

- CON: Needs lots of extra room in the case for tank and radiators, Much more expensive

NVIDIA GPUs have a Boosting Technology, that automatically overclocks your GPU to a certain degree, as long as it stays within a predefined temperature and power limit.

So making sure your GPUs stay as cool as possible, will allow them to boost longer and therefore improve the performance.

You can observe this effect, especially in Laptops, where there is little room for cooling, and the GPUs tend to get very hot and loud and throttle very early. So if you are thinking of Rendering on a Laptop, keep this in mind.

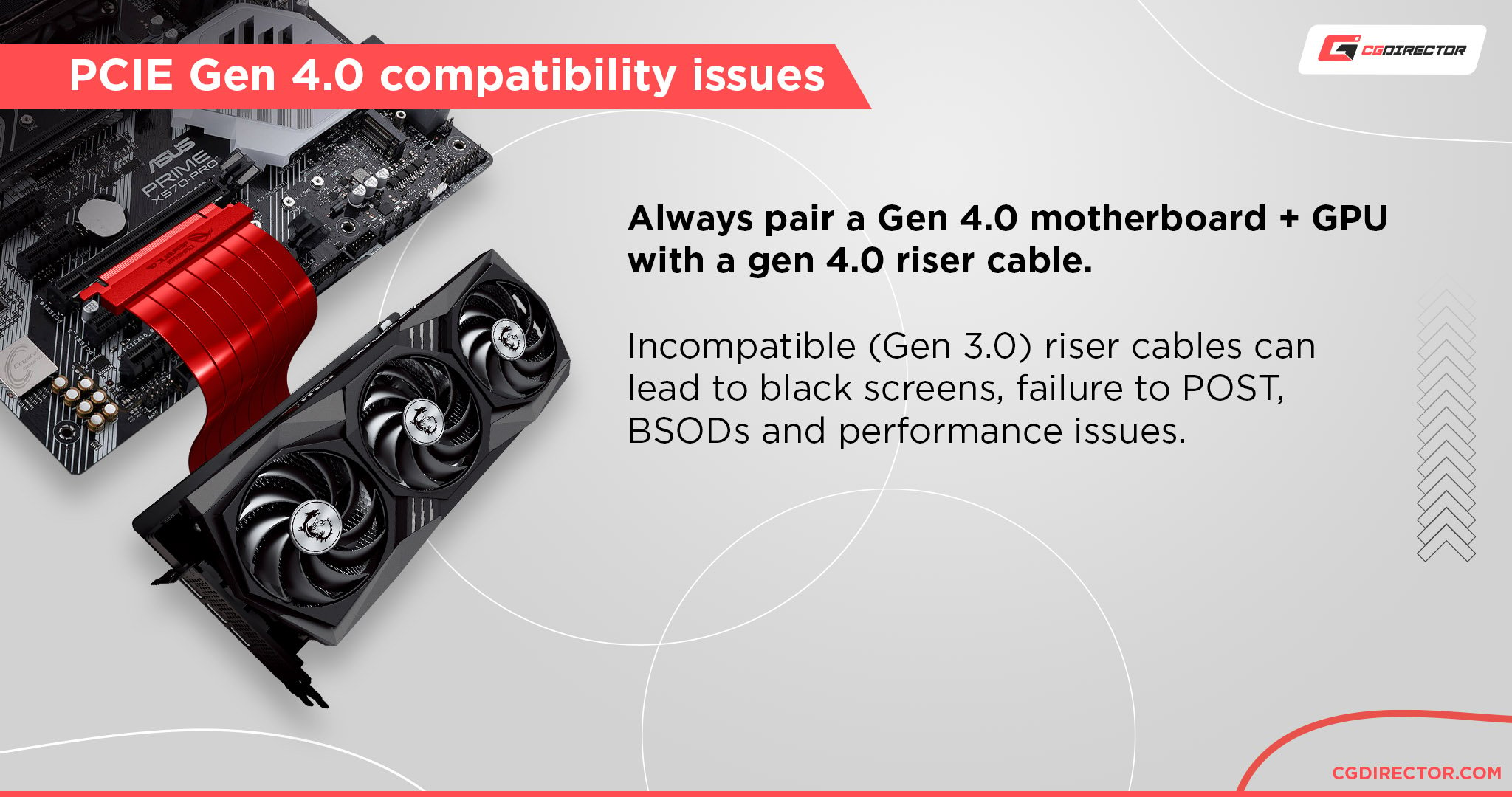

A quick note on Riser Cables. With PCIe- or Riser-Cables you can basically place your GPUs further away from the PCIe-Slot of your Motherboard. Either to show off your GPU vertically in front of the Case’s tempered glass side panel, or because you have some space constraints that you are trying to solve (e.g. the GPUs don’t fit).

If this is you, take a look at our Guide on finding the right Riser-Cables for your need.

Power Supply

Be sure to get a strong enough Power supply for your system. Most GPUs have a typical Power Draw of around 180-250W, though the Nvidia RTX 3080 and 3090 GPUs can draw even more.

I Recommend at least 650W for a Single-GPU-Build. Add 250W for every additional GPU that you have in your System.

Good PSU manufacturers to look out for, are Corsair, beQuiet, Seasonic, and Coolermaster but you might prefer others.

Image-Credit: Corsair

Use this Wattage-Calculator here that lets you Calculate how strong your PSU will have to be by selecting your planned components.

Motherboard & PCIe-Lanes

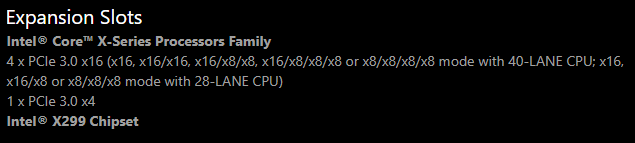

Make sure the Motherboard has the desired amount of PCIe-Lanes and does not share Lanes with SATA or M.2 slots.

Be careful what PCIe Configurations the Motherboard supports. Some have 3 or 4 physical PCIe Slots but only support one x16 PCIe Card (electrical speed).

This can get quite confusing.

Check the Motherboard manufacturer’s Website to be sure the Multi-GPU configuration you are aiming for is supported.

Here is what you should be looking for in the Motherboard specifications:

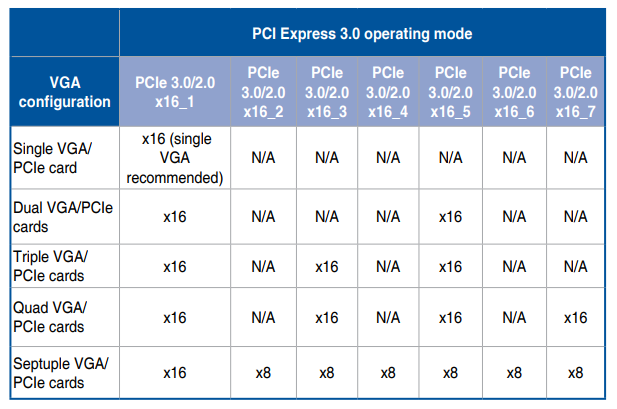

Image-Source: Asus

In the above example, you would be able to use (with a 40 PCIe Lane CPU) 1 GPU in x16 mode. OR 2 GPUs in both x16 mode OR 3 GPUs, one in x16 mode and two of those in x8 mode and so on. Beware that 28-PCIe Lane-CPUs in this example would support different GPU configurations than the 40 lane CPU.

Currently, the AMD Threadripper CPUs will give you 64 PCIe Lanes to hook your GPUs up to, if you want more you will have to go the Epyc or Xeon route.

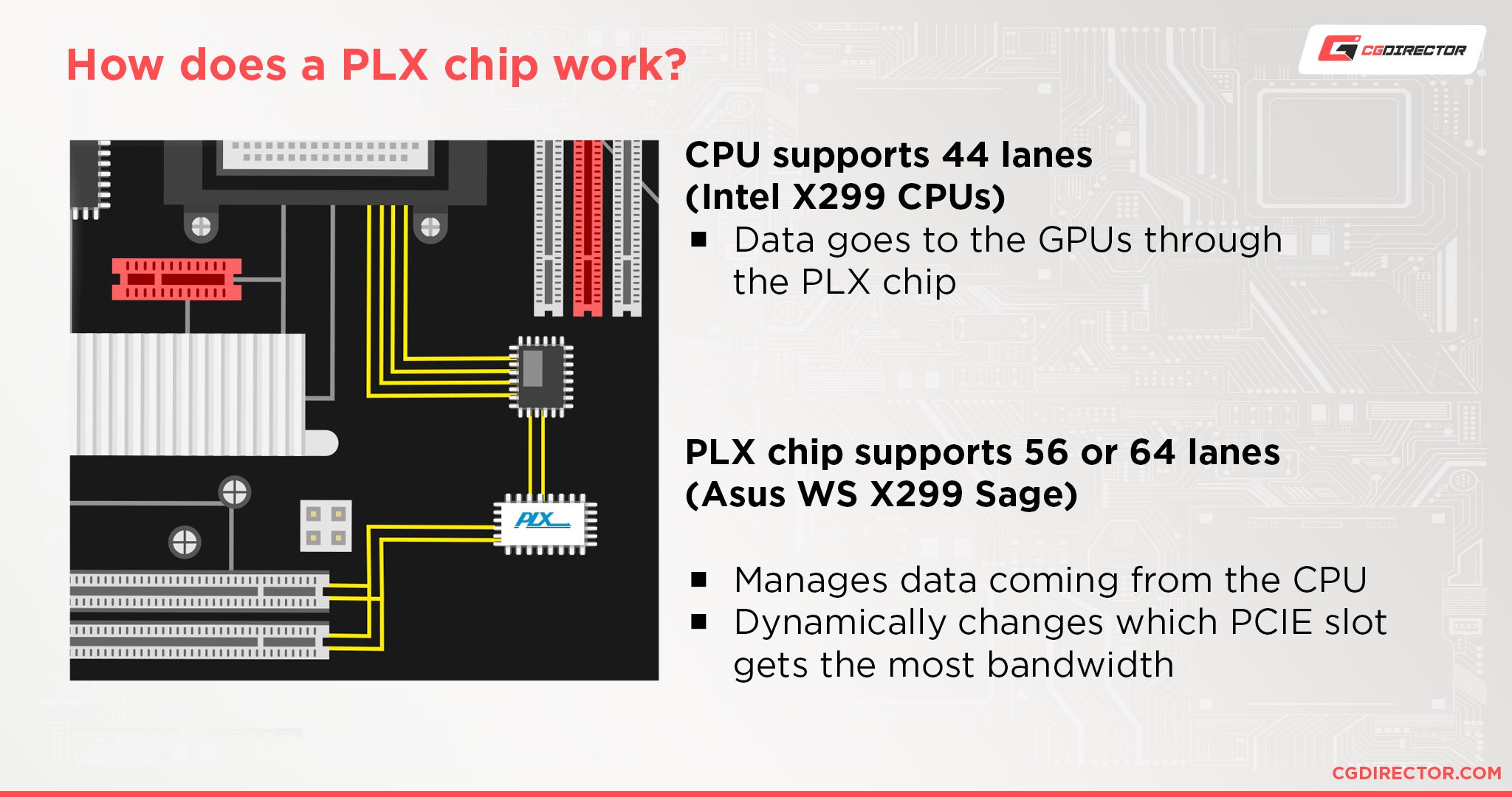

To confuse things even more, some Motherboards do offer four x16 GPUs (needs 64 PCIe-Lanes) on CPUs with only 44 PCIe Lanes. How is this even possible?

Enter PLX Chips.

On some motherboards, these chips serve as a type of switch, managing your PCIe-Lanes and leads the CPU to believe fewer Lanes are being used.

This way, you can use e.g. 32 PCIe-Lanes with a 16 PCIe-Lane CPU or 64 PCIe-Lanes on a 44-Lane CPU.

Beware though, only a few Motherboards have these PLX Chips. The Asus WS X299 Sage is one of them, allowing up to 7 GPUs to be used at 8x speed with a 44-Lane CPU, or even 4 x16 GPUs on a 44 Lanes CPU.

This screenshot of the Asus WS X299 Sage Manual clearly states what type of GPU-Configurations are supported (Always check the manual before buying expensive stuff):

Image-Source: Asus Mainboard Manual

PCIe-Lane Conclusion

For Multi-GPU Setups, having a CPU with lots of PCIe-Lanes is important, unless you have a Motherboard that comes with PLX chips.

Having GPUs run in x8 Mode instead of x16, will only marginally slow down the performance on most GPUs. (Note though, the PLX Chips won’t increase your GPU bandwidth to the CPU, just make it possible to have more cards run in higher modes)

Best GPU Performance / Dollar

Ok so here it is. The Lists everyone should be looking at when choosing the right GPU to buy. The best performing GPU per Dollar!

GPU Benchmark Comparison: Octane

This List is based on OctaneBench 2020.

| GPU Name | VRAM (GB) | OctaneBench Score | Price $ | Performance/Dollar |

|---|---|---|---|---|

| 8x RTX 2080 Ti | 11 | 2733 | 9592 | |

| 2x RTX 4090 | 24 | 2587 | 3198 | |

| 4x RTX 3080 | 10 | 2203 | 2796 | |

| 4x RTX 2080 Ti | 11 | 1433 | 4796 | |

| 1x RTX 4090 | 24 | 1272 | 1599 | |

| 4x RTX 2080 Super | 8 | 1100 | 2880 | |

| 4x RTX 2070 Super | 8 | 1057 | 2200 | |

| 4x RTX 2080 | 8 | 1017 | 3196 | |

| 4x RTX 2060 Super | 8 | 961 | 1260 | |

| 1x RTX 4080 | 16 | 952 | 1199 | |

| 4x GTX 1080 Ti | 11 | 837 | 2800 | |

| 1x RTX 4070 Ti | 12 | 710 | 799 | |

| 1x RTX 4070 SUPER | 12 | 704 | 599 | |

| 2x RTX 2080 Ti | 11 | 693 | 2398 | |

| 1x RTX 3090 Ti | 24 | 692 | 1999 | |

| 1x RTX 3090 | 24 | 661 | 1499 | |

| 1x RTX 3080 Ti | 12 | 648 | 1199 | |

| 1x RTX 4070 | 12 | 641 | 549 | |

| 1x RTX A6000 | 48 | 628 | 5000 | |

| 1x RTX A5000 | 24 | 593 | 2250 | |

| 1x RTX 3080 | 10 | 559 | 699 | |

| 2x RTX 2080 Super | 8 | 541 | 1440 | |

| 2x RTX 2070 Super | 8 | 514 | 1100 | |

| 2x RTX 2060 Super | 8 | 485 | 840 | |

| 2x RTX 2070 | 8 | 482 | 1000 | |

| 1x RTX 3070 Ti | 8 | 454 | 599 | |

| 1x RTX 4060 Ti | 8 | 410 | 399 | |

| 1x RTX 3070 | 8 | 403 | 499 | |

| 2x GTX 1080 Ti | 11 | 382 | 1400 | |

| 1x Quadro RTX 6000 | 24 | 380 | 4400 | |

| 1x RTX 3060 Ti | 8 | 376 | 399 | |

| 1x Quadro RTX 8000 | 48 | 365 | 5670 | |

| 1x RTX Titan | 24 | 361 | 2499 | |

| 1x RTX 2080 Ti | 11 | 355 | 1199 | |

| 1x Titan V | 12 | 332 | 3000 | |

| 1x RTX 3060 | 12 | 289 | 329 | |

| 1x RTX 2080 Super | 8 | 285 | 720 | |

| 1x RTX 2080 | 8 | 261 | 620 | |

| 1x RTX 2070 Super | 8 | 259 | 550 | |

| 1x RTX 2060 Super | 8 | 240 | 420 | |

| 1x Quadro RTX 4000 | 8 | 232 | 950 | |

| 1x RTX 2070 | 8 | 228 | 500 | |

| 1x Quadro RTX 5000 | 16 | 222 | 2100 | |

| 1x GTX 1080 Ti | 11 | 195 | 700 | |

| 1x RTX 2060 (6GB) | 6 | 188 | 360 | |

| 1x RTX 3050 | 4 | 179 | 249 | |

| 1x GTX 980 Ti | 6 | 142 | 300 | |

| 1x GTX 1660 Super | 6 | 134 | 230 | |

| 1x GTX 1660 Ti | 6 | 130 | 280 | |

| 1x GTX 1660 | 6 | 113 | 230 | |

| 1x GTX 980 | 4 | 94 | 200 | |

| 1x RTX 6000 Ada | 48 | 1094 | 6800 | |

| 1x RTX 5000 Ada | 32 | 816 | 4000 | |

| GPU Name | VRAM (GB) | Octanebench Score | Price $ | Performance/Dollar |

Source: Complete OctaneBench Benchmark List

GPU Benchmark Comparison: Redshift

The Redshift Render Engine has its own Benchmark and here is a List based on the Redshift Benchmark 3.0.26:

| GPU(s) | VRAM | Time (Minutes) | Price | Perf / $ |

|---|---|---|---|---|

| 1x Nvidia GTX 1080 Ti | 11 | 08.56 | 300 | |

| 1x Nvidia RTX 3060 | 12 | 05.38 | 350 | |

| 1x Nvidia RTX 2060 SUPER | 8 | 06.31 | 350 | |

| 1x Nvidia RTX 4060 Ti | 8 | 03.30 | 399 | |

| 1x Nvidia RTX 2070 | 8 | 06.28 | 400 | |

| 1x Nvidia RTX 3060 Ti | 8 | 04.26 | 450 | |

| 1x Nvidia RTX 2070 SUPER | 8 | 06.12 | 450 | |

| 1x Nvidia RTX 3070 | 10 | 03.57 | 500 | |

| 1x Nvidia RTX 4070 | 12 | 02.23 | 599 | |

| 1x Nvidia RTX 3070 Ti | 8 | 03.27 | 599 | |

| 1x Nvidia RTX 4070 SUPER | 12 | 02.12 | 599 | |

| 1x Nvidia RTX 2080 | 8 | 06.01 | 600 | |

| 1x Nvidia RTX 2080 SUPER | 8 | 05.47 | 650 | |

| 1x Nvidia RTX 4070 Ti | 12 | 02.08 | 799 | |

| 1x Nvidia RTX 4070 Ti SUPER | 12 | 02.02 | 799 | |

| 1x Nvidia RTX 3080 | 10 | 03.07 | 850 | |

| 1x AMD Radeon 7900 XT | 16 | 03.32 | 899 | |

| 2x Nvidia RTX 2070 SUPER | 8 | 03.03 | 900 | |

| 1x Nvidia RTX 4080 SUPER | 16 | 01.39 | 999 | |

| 1x AMD Radeon 7900 XTX | 24 | 03.07 | 999 | |

| 1x AMD Radeon 6900 XT | 16 | 04.17 | 999 | |

| 1x Nvidia RTX A4000 | 16 | 04.40 | 1000 | |

| 1x Nvidia RTX 4080 | 16 | 01.47 | 1199 | |

| 1x Nvidia RTX 3080 Ti | 12 | 02.44 | 1199 | |

| 2x Nvidia RTX 2080 | 8 | 03.10 | 1200 | |

| 1x Nvidia RTX 2080 Ti | 11 | 04.27 | 1200 | |

| 2x Nvidia RTX 2080 SUPER | 8 | 02.58 | 1300 | |

| 1x Nvidia RTX 3090 | 24 | 02.42 | 1499 | |

| 1x Nvidia RTX 4090 | 24 | 01.16 | 1599 | |

| 4x Nvidia RTX 2070 | 8 | 01.56 | 1600 | |

| 4x Nvidia RTX 2070 SUPER | 8 | 01.42 | 1800 | |

| 1x Nvidia RTX 3090 Ti | 24 | 02.36 | 1999 | |

| 1x Nvidia RTX A5000 | 24 | 03.06 | 2300 | |

| 4x Nvidia RTX 2080 | 8 | 01.36 | 2400 | |

| 2x Nvidia RTX 2080 Ti | 11 | 02.18 | 2400 | |

| 1x AMD Radeon Pro W7800 | 32 | 04.26 | 2499 | |

| 4x Nvidia RTX 2080 SUPER | 8 | 01.32 | 2600 | |

| 1x Nvidia RTX Titan | 24 | 04.16 | 2700 | |

| 2x Nvidia RTX 3090 | 24 | 01.15 | 3000 | |

| 2x Nvidia RTX 4090 | 24 | 00.47 | 3198 | |

| 1x AMD Radeon Pro W7900 | 48 | 03.21 | 3999 | |

| 4x Nvidia RTX 2080 Ti | 11 | 01.07 | 4800 | |

| 1x Nvidia RTX A6000 | 48 | 02.42 | 4800 | |

| 4x Nvidia RTX 3090 | 24 | 00.45 | 6000 | |

| 6x Nvidia RTX 3090 | 24 | 00.31 | 9000 | |

| 8x Nvidia RTX 2080 Ti | 11 | 00.49 | 9600 | |

| 1x Nvidia RTX 6000 Ada | 48 | 01.28 | 10000 | |

| 7x Nvidia RTX 4090 | 24 | 00.18 | 11193 | |

| 8x Nvidia A100 | 40 | 00.36 | 88000 | |

| GPU(s) | VRAM | Time (Minutes) | Price | Perf / $ |

Source: Complete Redshift Benchmark Results List

GPU Benchmark Comparison: VRAY-RT

And here is a List based off of VRAY-RT Bench. Note how the GTX 1080 interestingly seems to perform worse than the GTX 1070 in this benchmark:

| GPU Name | VRAM | VRAY-Bench | Price $ MSRP | Performance/Dollar |

|---|---|---|---|---|

| GTX 1070 | 8 | 1:25 min | 400 | 2.941 |

| RTX 2070 | 8 | 1:05 min | 550 | 2.797 |

| GTX 1080 TI | 11 | 1:00 min | 700 | 2.380 |

| 2x GTX 1080 TI | 11 | 0:32 min | 1400 | 2.232 |

| GTX 1080 | 8 | 1:27 min | 550 | 2.089 |

| 4x GTX 1080 TI | 11 | 0:19 min | 2800 | 1.879 |

| TITAN XP | 12 | 0:53 min | 1300 | 1.451 |

| 8x GTX 1080 TI | 11 | 0:16 min | 5600 | 1.116 |

| TITAN V | 12 | 0:41 min | 3000 | 0.813 |

| Quadro P6000 | 24 | 1:04 min | 3849 | 0.405 |

Source: VRAY Benchmark List

Speed up your Multi-GPU Rendertimes

Note – This section is quite advanced. Feel free to skip it.

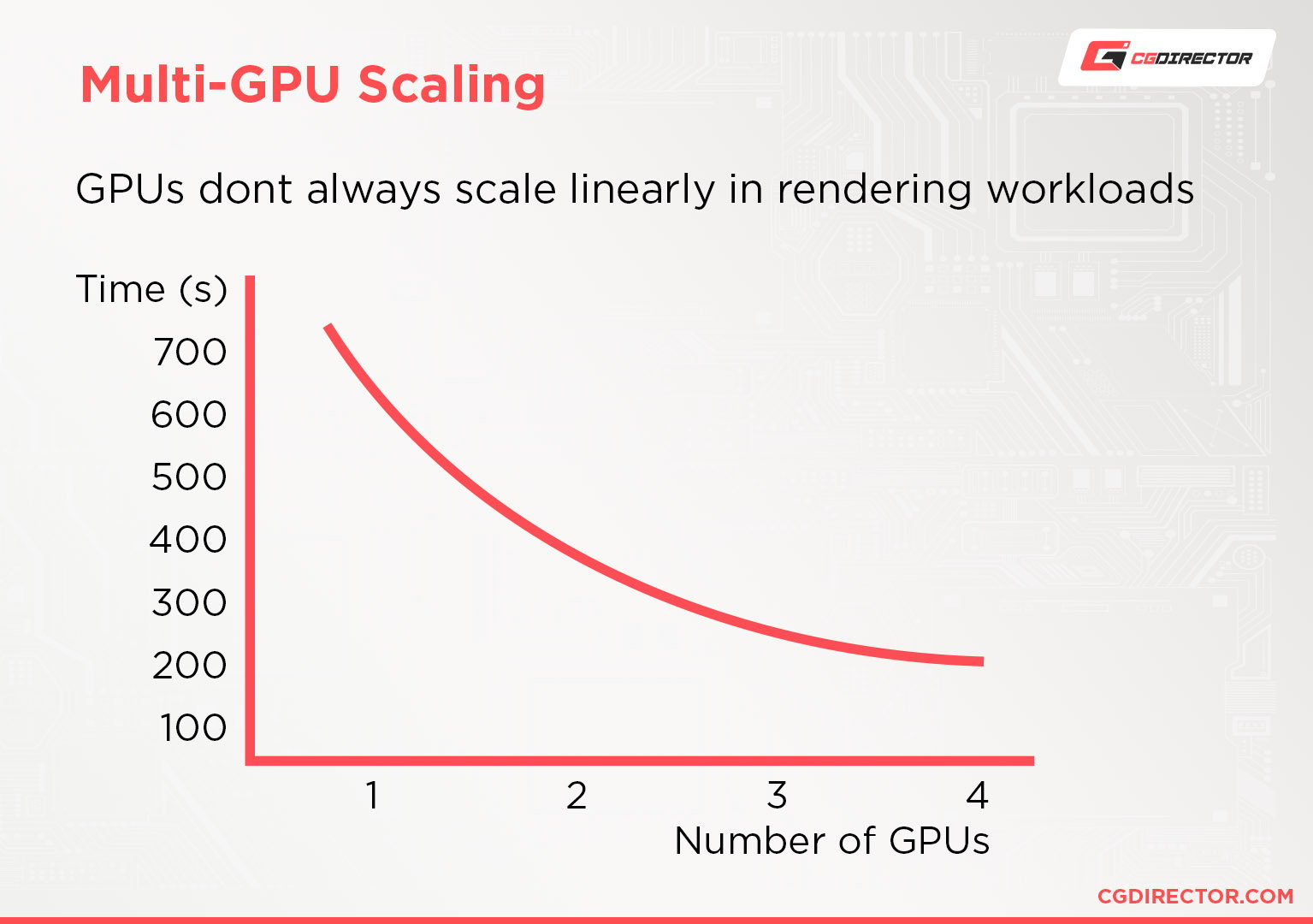

So, unfortunately, GPUs don’t always scale perfectly. 2 GPUs render an Image about 1,9 times faster. Having 4 GPUs will sometimes render about 3,6x faster.

Having multiple GPUs communicate with each other to render the same task, costs so much performance, that a large part of one GPU in a 4-GPU rig is mainly just there for managing decisions.

One solution could be the following: When final rendering image sequences, use as few GPUs as possible per task.

Let’s make an example:

What we usually do in a multi-GPU rig is, have all GPUs work on the same task. A single task, in this case, would be an image in our image sequence.

4 GPUs together render one Image and then move on to the next Image in the Image sequence until the entire sequence has been rendered.

We can speed up preparation time per GPU (when the GPUs sit idly, waiting for the CPU to finish preparing the scene) and bypass some of the multi-GPU slow-downs when we have each GPU render on its own task. We can do this by rendering one task per GPU.

So a machine with 4 GPUs would now render 4 tasks (4 images) at once, each on one GPU, instead of 4 GPUs working on the same image, as before.

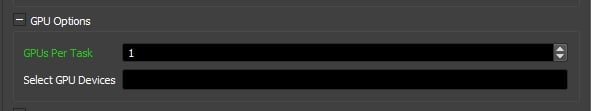

Some 3D-Software might have this feature built-in, if not, it is best to use some kind of Render Manager, such as Thinkbox Deadline (Free for up to 2 Nodes/Computers).

Option to set the amount of GPUs rendering on one task in Thinkbox Deadline

Beware though, that you might have to increase your System RAM a bit and have a strong CPU since every GPU-Task needs its amount of RAM and CPU performance.

We’ve put together an in-depth Guide on How to Render faster. You might want to check that out too.

In case you have a Motherboard that’ll let you hook up several GPUs to it, but you don’t have any room to plug them in side-by-side, be sure to check out our PCIe-Riser Cable Guide.

Redshift vs. Octane

Another thing I am asked often is if one should go with the Redshift or Octane.

As I myself have used both extensively, in my experience, thanks to the Shader Graph Editor and the vast Multi-Pass Manager of Redshift, I like to use the Redshift Render Engine more for doing work that needs complex Material Setups and heavy Compositing.

Octane is great if you want results fast, as it’s learning curve is shallower. But this, of course, is a personal opinion and I would love to hear yours!

GPU Rendering Industry in 2023/2024 and beyond

I’ve had a discussion with a colleague recently on what has changed in GPU rendering over the years and if it still makes sense to go the multi-GPU route today:

Even a relatively slow GPU (like a RTX 4060) outperforms even the fastest CPUs (e.g. 5995WX) in rendering workloads, so going the GPU route makes sense as long as the GPU renderer of your choice is feature-complete for your (production) needs.

That said, with the new generation of RTX GPUs, even a single RTX 4090 is as fast as 4x 2080Ti from a few generations ago.

So, today, it’s become much easier to build a fast GPU renderer that is also a workstation that you can actively work on (fast single-core speed).

And because even high-end GPUs don’t saturate their PCIe bandwidth during rendering, you can also get away with adding two to a PC that runs at x8 PCIe 4.0 Lanes without any noticeable slowdowns in rendering.

You’d be hard-pressed to add more than 2 GPUs to a single PC nowadays anyway because GPUs have become so thick (3 and even 4 slots width), AND they don’t come in blower style cooling configuration anymore, so packing them tightly as was usual a few years ago doesn’t work as well any more anyway. (Nvidia wants us creators to buy Quadros/Pro-level GPUs and not gaming GPUs… which doesn’t make sense given their price tag unless you need the higher amount of VRAM)

Custom PC-Builder

If you want to get the best parts within your budget, have a look at the PC-Builder Tool.

Select the main purpose that you’ll use the computer for and adjust your budget to create the perfect PC with part recommendations that will fit within your budget.

Answers to frequently asked questions (FAQ)

Is the GPU or CPU more important for rendering?

It depends on the render engine you are using. If you’re using a GPU render engine such as Redshift, Octane, or Cycles GPU, the GPU will be considerably more important for rendering than the CPU.

For CPU render engines, such as Cycles CPU, V-Ray CPU, Arnold CPU, the CPU will be more important.

Interestingly, the CPU also plays a minor role in maximizing GPU render performance. CPUs with high single-core performance are less of a bottleneck for the GPU(s).

Is RTX better than GTX for Rendering?

Yes, Nvidia’s RTX GPUs perform better in GPU Rendering than GTX GPUs. The reason is simple: RTX GPUs both designate higher-tiered and more expensive GPUs that perform better compared to Nvidia’s GTX line-up, and also have Ray-Tracing cores, which can additionally increase render performance in supported engines.

Does more RAM help for rendering?

More RAM will only speed up your rendering if you’ve had too little, to begin with. You see, RAM really only bottlenecks performance if it’s full and data has to be swapped to disk.

If you have a simple 3D scene that only needs about 16GB of RAM for rendering, then 32, 64, or 128GB of RAM will do nothing for that particular task. If you only had 8GB of RAM beforehand, then installing more RAM will considerably increase render performance.

Does more VRAM help for rendering?

Similar to RAM, more VRAM only helps increase performance in scenarios where you had too little, to begin with.

If your 3D scene fits entirely into your GPU’s VRAM, then having a GPU with more VRAM won’t impact performance at all (given all other specs are the same).

Of course, many GPU render engines might be able to utilize larger ray-trees or other optimizations if there’s more free VRAM, so depending on the render engine, you could see a small speedup with more VRAM even if your scene is simple and easily fits into your GPU(s)’s VRAM.

Over to you

What Hardware do you want to buy? Let me know in the comments! Or ask us anything in our forum 🙂

404 Comments

16 November, 2024

Have you designed any systems optimized for Arnold on 3D Max 2025?

– It seems Arnold can be finicky in terms of hardware configuration.

26 November, 2023

Hi Alex,

I was builded my PC 3 year ago,

OS: Window 10 Pro

CPU :AMD Ryzen 7 3800X (8 Core)

Motherboard : Gigabyte x570 aoros elite

RAM : DDR4 32GB

PSU: Cooler Master MWE Gold 750 Full Modular

I software use After Effecte, C4D and Blender, I want to change my GPU 1050TI,

so which is comptable GPU Best For Redshift 3.0 and 3.5 and octon ?

Please sugest me.

27 November, 2023

Hey Yogesh, the best GPU currently certainly is the Nvidia RTX 4090, but you may cut it close with your PSU and may need an upgrade. If you want to keep your PSU, I can recommend the RTX 4070Ti to be on the safe side.

Alex

28 November, 2023

Which one GPU should I buy ? my budget is $300 to $400

28 November, 2023

The RTX 4060Ti is the one that fits your budget best.

Cheers,

Alex

28 November, 2023

Thanks

28 November, 2023

Which one GPU should I buy ? my budget is $300 to $400

28 November, 2023

Which one GPU should I buy ?my budget is $300 to $400

20 February, 2023

Hey Alex!

Can two stacked rtx4000 series cards stay cool enough to render without throttling? I’m looking at putting two NVIDIA GeForce RTX 4090s in a case and see they take 3 PCI slots apiece. The case I’m looking at puts them right above the PSU. What magic would keep them from overheating?

Thank you kindly, Alex!

3 March, 2023

Hey Danny,

Take a look at what one of our readers posted on the forum. He built a dual 4090 PC: https://www.cgdirector.com/forum/threads/building-of-aircooled-dual-4090-founders-editions-system.1091/

He also posted a great guide on how he achieved this.

You can certainly do it without too much throttling, yes.

Cheers,

Alex

8 January, 2023

Hey Alex,

I really need your insight.

I’m planning to buy a GPU and the purpose is for animation. (It’s for practice at the moment since I’m a newbie and to be specific, I want to use Blender for modeling and wanted to try basic stop motion animation)

My budget is around $250.

Can you suggest me a basic GPU since I’m practicing for the moment. I will upgrade to much higher end but it will take some time since the price of a high end GPU is not cheap.

I only found rx 6600 and gtx1660super on that price range in my area.

Thank you very much.

8 January, 2023

I’d suggest going with the Nvidia GPU, as Blender and many other render engines work better on Nvidia GPUs (render faster).

The 1660 Super is quite good, especially considering GPU prices are going up with every generation currently.

Cheers,

Alex

9 January, 2023

Thank you very much Alex for your time reading and answering my question.

Regards,

Rj

10 June, 2022

Hi Alex,

First: I love your website. It’s very informative, I’ve already learned a lot! Thanks! 🙂

I’m going to upgrade my cards (2xAsus Turbo1060 6gb… :)), the prices went down a bit. I picked an Asus Turbo 3080 10GB. I had no issues with this type of fan system on the 1060 series but the 3080 plays in a different league in terms of heat and power consumption. I’m going to render a lot in Octane in my upcoming 3D tasks. I’m planning to buy another 3080 later in this year, that’s why I choose a blower style design now. My pc case has a mesh front panel with 3x 120mm fans, but I think I’ll buy 2x Noctua 140mm to give a little support to the cards. Should I worry about the durability of the card? What is an “OK” temp for a card like this during a long render run? (I haven’t got an AC in my apartment, so the room temp is not ideal.)

Thanks!

P.S. Sorry for my English, it’s not my native language.

19 June, 2022

Hey Dave,

Check out our GPU Temp articles here:

Blower Style GPUs are made to run in extreme situations even in multi-gpu stacked setups. I run multiple rendernodes with 4x RTX 3080Tis (Blower) and although they are hot they don’t damage easily. I’ve been running 2080Tis, 2070Supers and 1080Tis for years now, and have never had to return one due to heat damage.

They have throttling mechanism that take care of heat. So the GPU will slightly downclock if so they don’t go over their factory-set max temp.

https://www.cgdirector.com/?s=gpu+temperature

Cheers,

Alex