Walkinghome6

Tech Intern

I'm putting plans together to build a render farm and am wanting to use PCI riser cables to lift the GPUs up and off of the motherboard so that I can provide better air cooling solutions. Also, to try and maximize the number of GPUs I can put on 1 node. I haven't seen much online of anyone doing this. But I have seen this article talking about it below. I don't want to use liquid cooling. I want to do something similar to a mining rig, but using riser cables to keep the bandwidth for Redshift.

www.cgdirector.com

www.cgdirector.com

How far has anyone taking this? I'm looking to use an AMD Threadripper Pro for it's 128 lanes. I'm currently looking at this MB:

www.newegg.com

www.newegg.com

And looking at this mining case:

www.newegg.com

www.newegg.com

Thanks

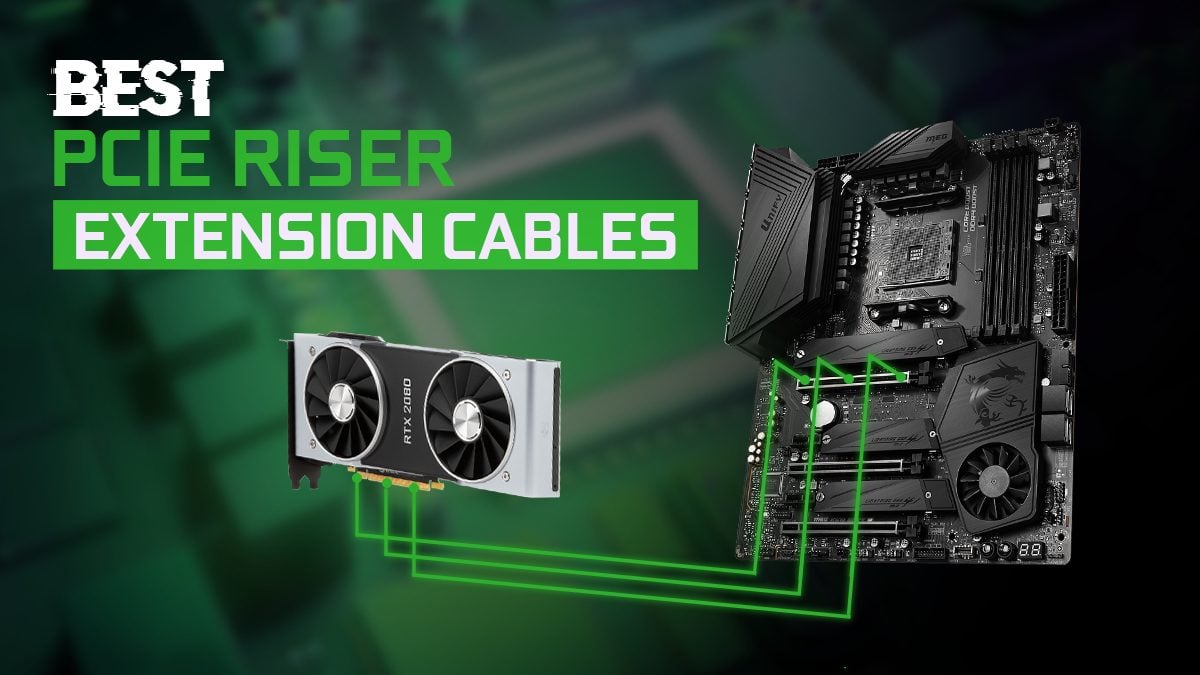

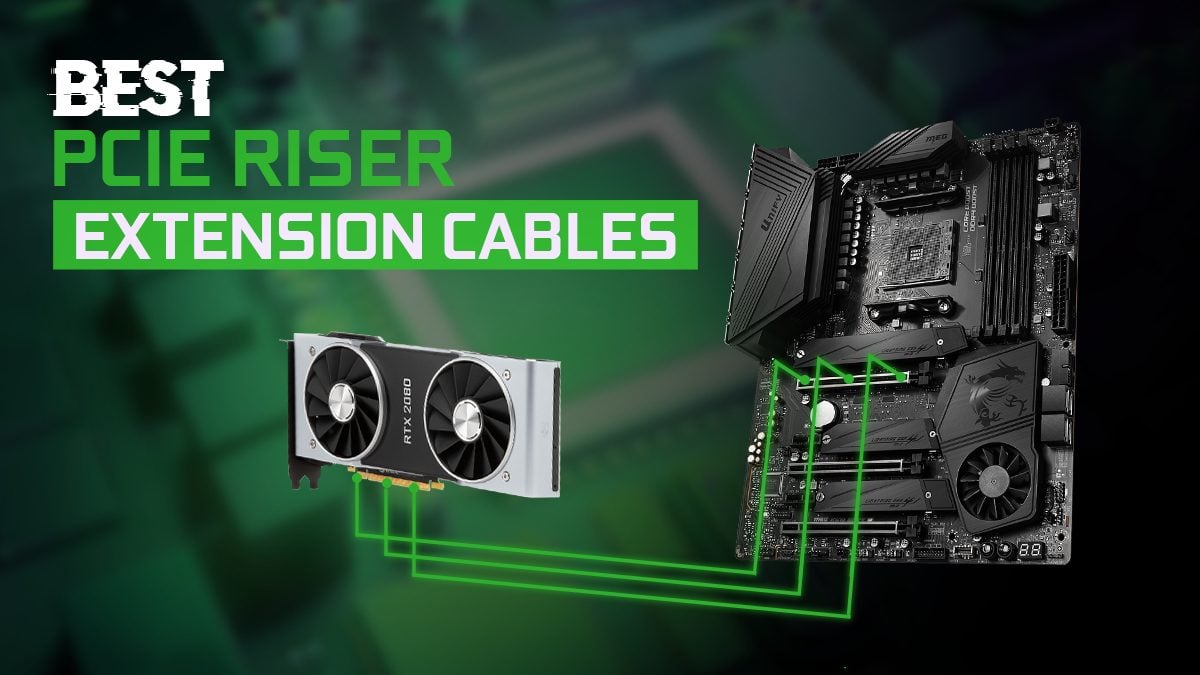

Best PCIE Riser & Extension Cables for heavy GPU Workloads

You will want to make sure you don't lose any performance when attaching a or multiple GPUs to the Motherboard through Riser Cables.

How far has anyone taking this? I'm looking to use an AMD Threadripper Pro for it's 128 lanes. I'm currently looking at this MB:

GIGABYTE AMD WRX80 Motherboard with sWRX8 4094 Socket, 8-Channel DDR4 RDIMM 8 x DIMMs, Aspeed AST2500 BMC, Dual M.2, Dual Intel Server 10G and 1G LAN, 7 x PCIe 4.0 x16 Slots WRX80-SU8-IPMI (rev. 1.0) - Newegg.com

Buy GIGABYTE AMD WRX80 Motherboard with sWRX8 4094 Socket, 8-Channel DDR4 RDIMM 8 x DIMMs, Aspeed AST2500 BMC, Dual M.2, Dual Intel Server 10G and 1G LAN, 7 x PCIe 4.0 x16 Slots for Multi Cards - WRX80-SU8-IPMI (rev. 1.0) with fast shipping and top-rated customer service. Once you know, you Newegg!

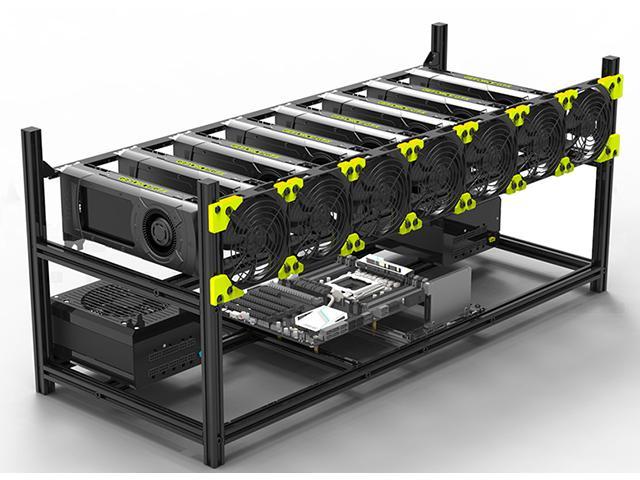

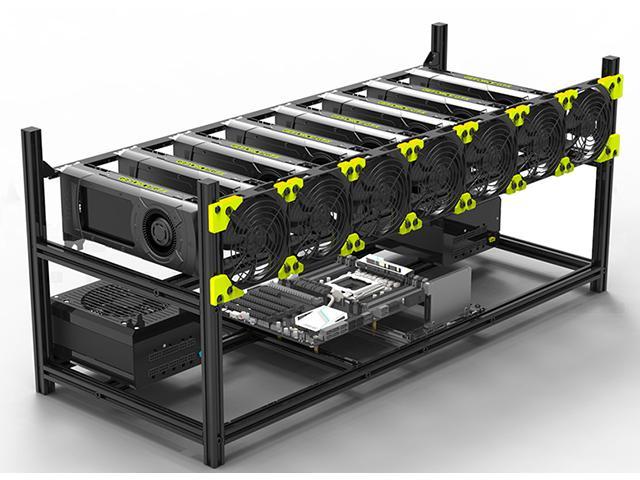

And looking at this mining case:

8 GPU Stackable Preassemble Mining Case Rig Aluminum Open Air Frame For Ethereum(ETH)/ETC/ZCash/Monero/BTC Easy Mounting Edition(Just 10 minutes) - Newegg.com

Buy 8 GPU Stackable Preassemble Mining Case Rig Aluminum Open Air Frame For Ethereum(ETH)/ETC/ZCash/Monero/BTC Easy Mounting Edition(Just 10 minutes) with fast shipping and top-rated customer service. Once you know, you Newegg!

Thanks