TABLE OF CONTENTS

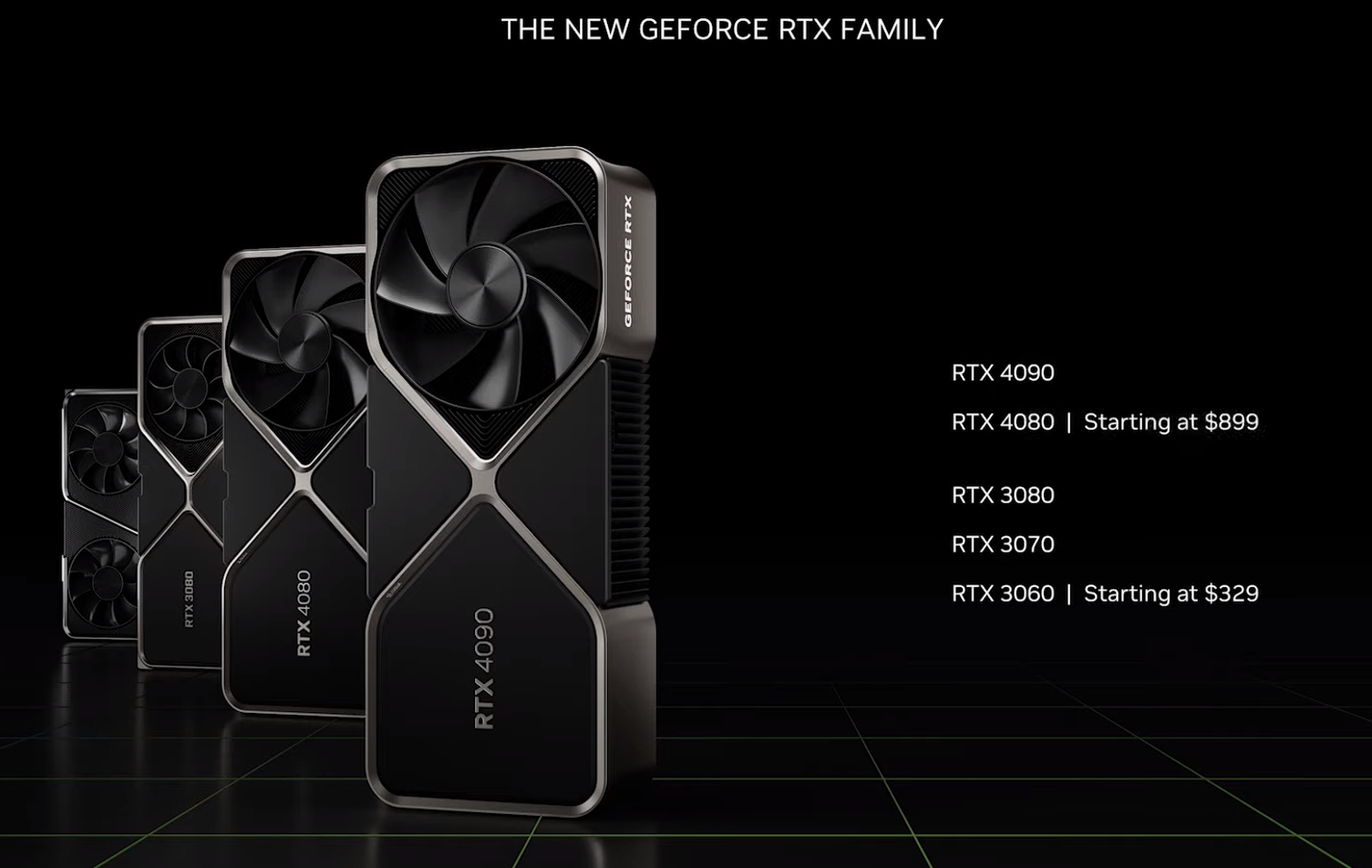

Nvidia showed off its Ada Lovelace architecture with top-of-the-line GeForce Gaming graphics cards in its GTC keynote on 20th September 2022.

Dubbed the GeForce RTX 4000 series, Nvidia claims impressive performance gains over its previous generation RTX lineup. So, is it time to rush out and buy one as soon as you find one on shelves?

Sadly, it doesn’t seem that straightforward. There’s a bit to unpack, so bear with me.

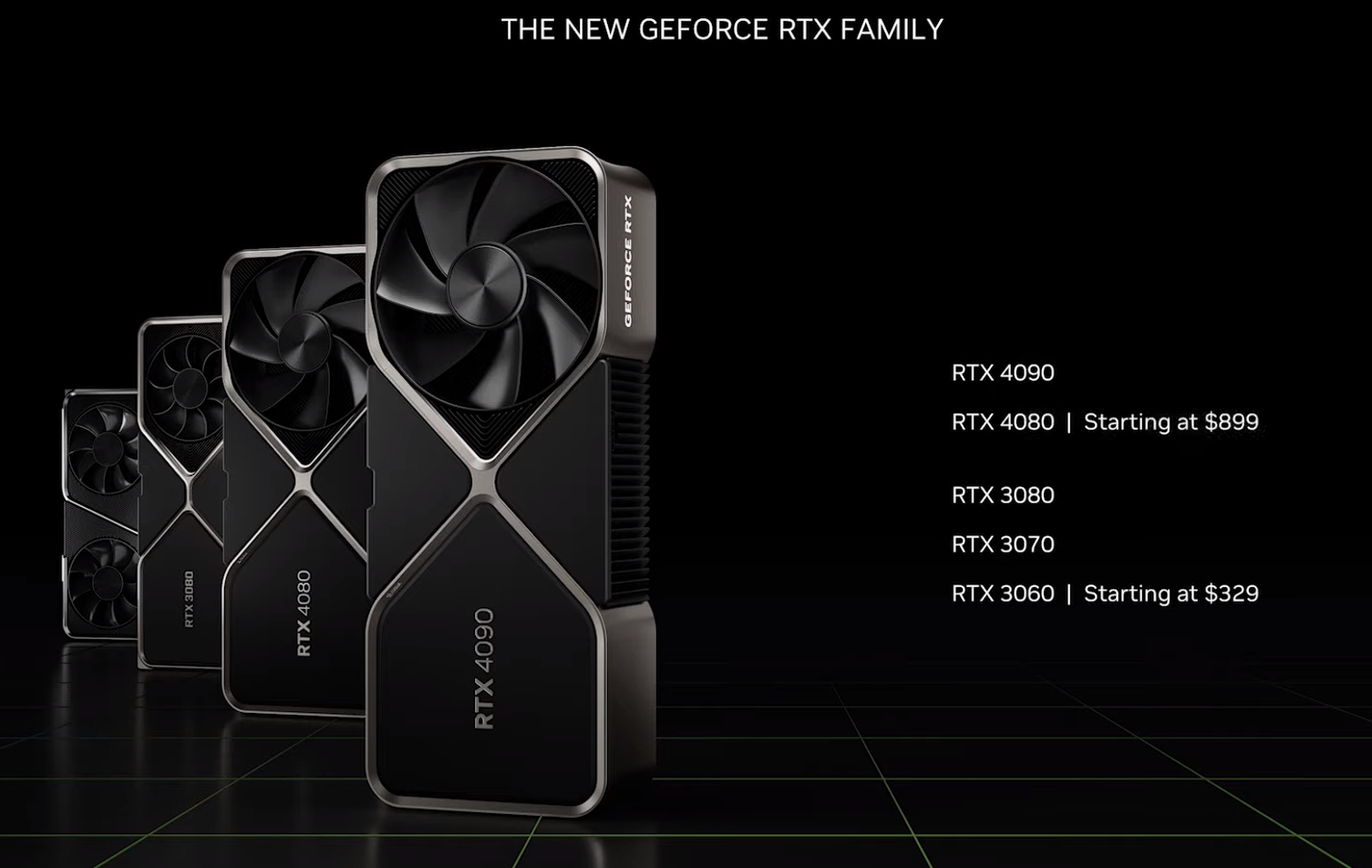

First, here are the SKUs that Nvidia CEO, Jen-Hsun “Jensen” Huang, announced on stage:

Price Table USD & EUR

- RTX 4090

- RTX 4080 16GB

RTX 4080 12GB (more about this later)

Update: Nvidia ‘unlaunched’ the RTX 4080 12GB shortly after we wrote this article.

No information was shared about lower-end SKUs like an RTX 4060. That said, Nvidia did close the GPU reveal with a worrying slide that doesn’t bode well for those parts:

The slide indicates that RTX 3000 parts like the RTX 3060, 3070, and 3080 will remain Nvidia’s ‘mid-range’ offerings.

Unless Nvidia can clear existing stock or AMD punches Nvidia in the gut with their Radeon announcement on 3rd November 2022 and forces their hand, I don’t see much hope for a mid-range offering from the RTX 4000 series anytime soon.

(Intel’s launch of its Arc A770 and A750 graphics card on the same day as Nvidia releasing its RTX 40-series cards is also something to watch.)

Now, let’s break the Nvidia CEO’s keynote down into bite-sized pieces, shall we?

Dissecting the Major Nvidia RTX 4000 Series Announcements

Impressive CUDA Core Counts

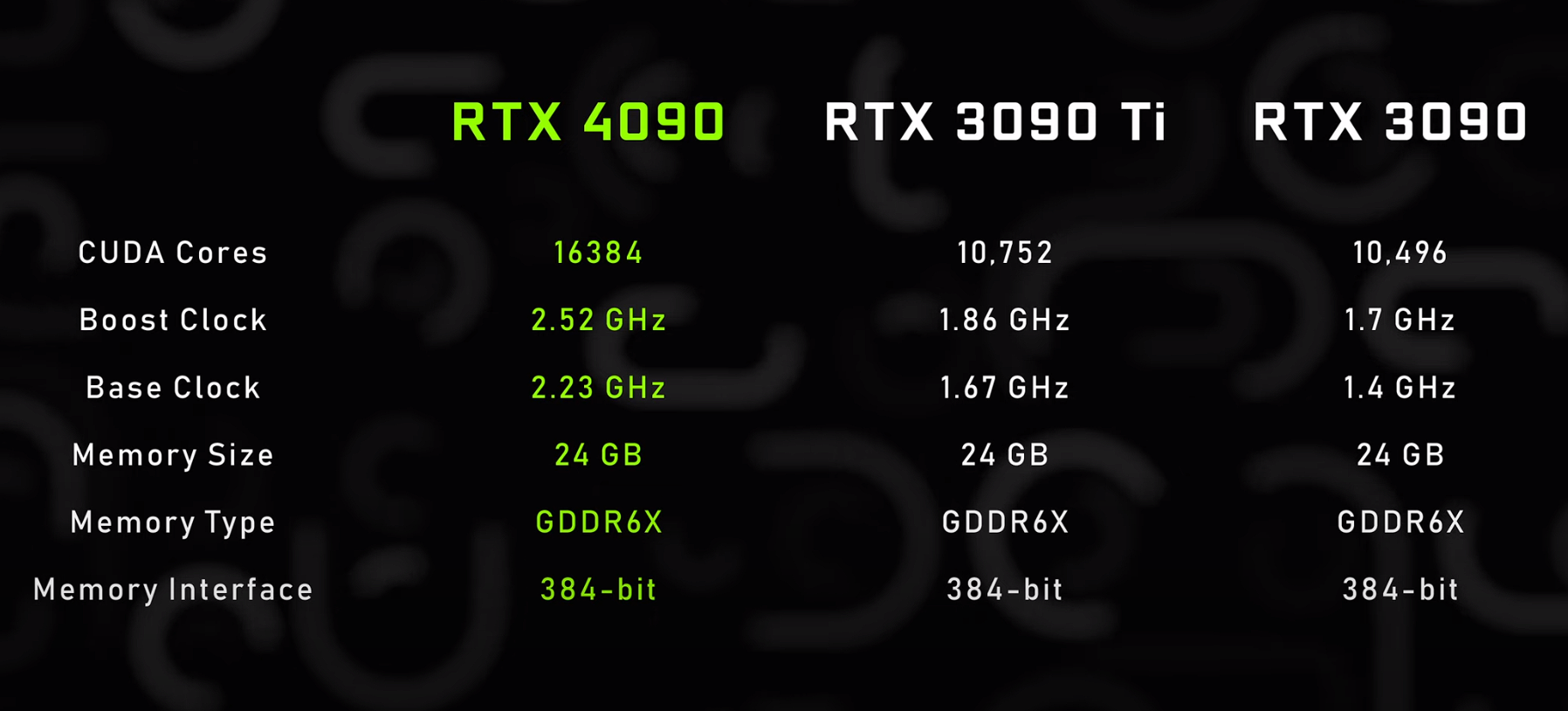

Nvidia has packed a whopping 16,384 CUDA cores into its top-tier RTX 4090.

To put this into perspective, the last-gen top-tier RTX 3090 Ti offered just 10,752 CUDA cores.

That’s a huge jump.

In a vacuum, the improvement here is incredible. But the entire lineup tells a different story.

Something seems off here, doesn’t it?

Hopping just one tier down to an RTX 4080 (16GB) gives you even fewer CUDA cores than the last-gen RTX 3090!

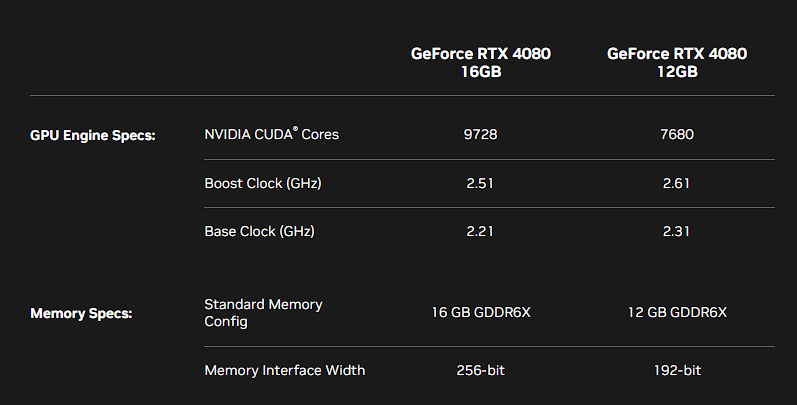

And the RTX 4080 (12GB) has a different CUDA count and memory bandwidth – making it…well, not really an RTX 4080?

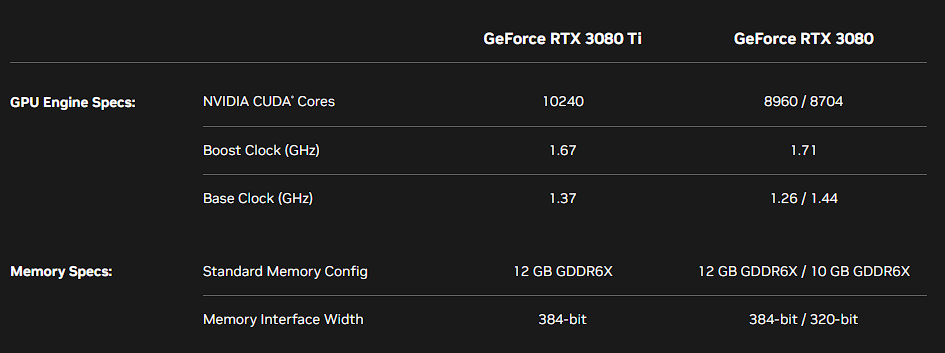

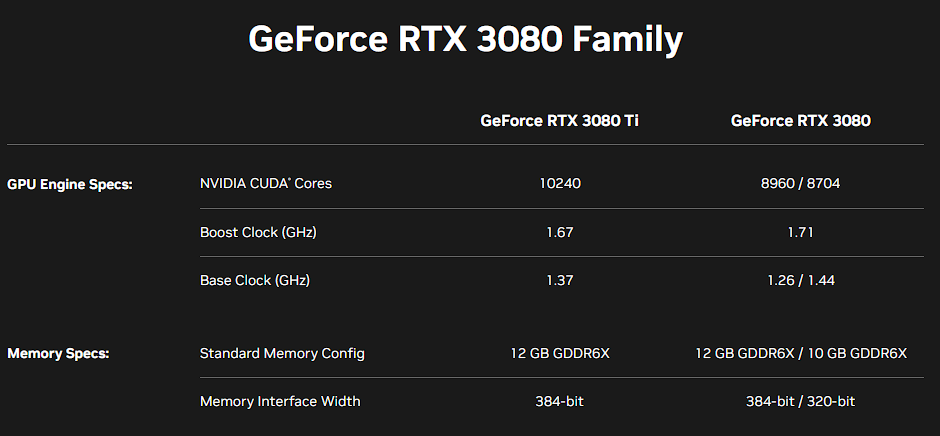

For reference, here are the specs for an RTX 3080 and 3080 Ti.

So, we went from 10,752 CUDA cores (RTX 3090 Ti) to 8960/8704 (RTX 3080) on the 30-series. That’s a 17% drop in CUDA core count.

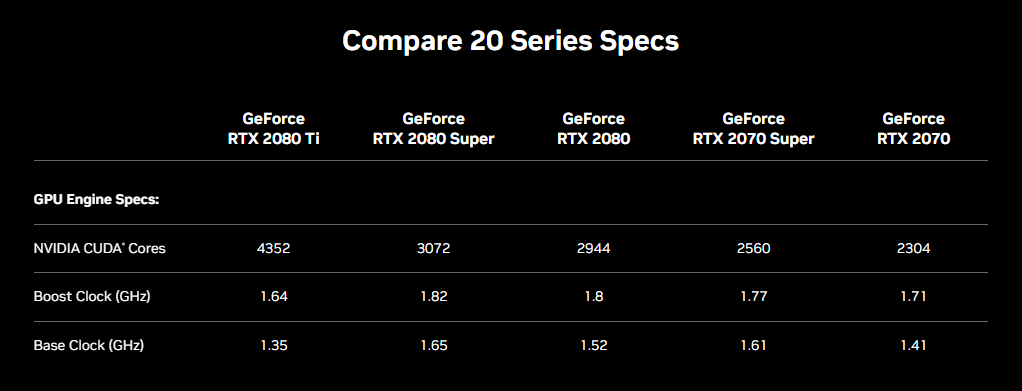

Even with the RTX 20-series, the drop was considered massive, with a 32% drop going from an RTX 2080 Ti to an RTX 2080.

And this time, we’re going from 16,384 (RTX 4090) to 9,728 (RTX 4080 16GB) in a single SKU jump. That’s a 40% cut to CUDA count.

Nvidia could’ve easily slotted in one or even two more SKUs in the gap between an RTX 4080 and an RTX 4090. But that would’ve meant branding what they released as an RTX 4080 16GB now as something like an RTX 4070, at best.

If you bring pricing into the picture, it looks so much worse.

To be completely clear, directly comparing CUDA counts isn’t a great idea because each architecture will perform very differently, and a new-gen core will be far more capable.

I’m just illustrating how the gap at the top end of the product stack has widened so drastically this generation.

So, instead, Nvidia went with the plan of quietly leaving a significant gulf between the top 2 SKUs and adding a 3rd SKU with a confusing name to distract everyone.

Now, while consumers and media celebrate the company ‘unlaunching’ the 12GB RTX 4080, Nvidia gets to market a (kind of) *70-class card at well over $1000 without anyone flinching.

The 12GB Nvidia RTX ‘4080’: Is it Really a 4080?

Update: Nvidia rolled back the release of the RTX 4080 12GB, calling it ‘confusing.’ However, it is still going ahead with the RTX 4080 16GB without any changes.

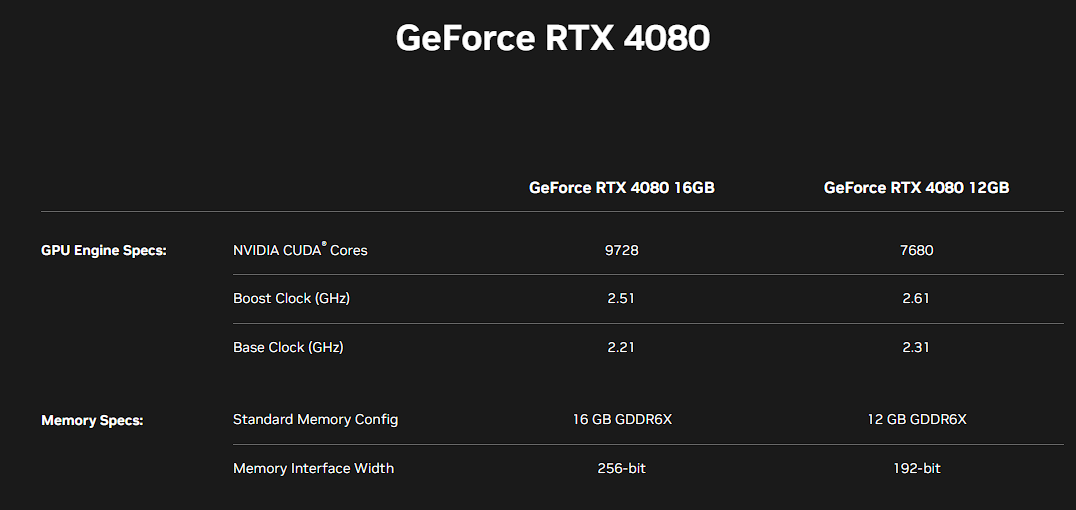

Before I dive into this one, here are a few GPU specifications you should see.

Direct your attention to the CUDA core counts and Memory Interface Widths.

The difference in CUDA core counts between the 12GB and 16GB RTX 4080 is massive, not to mention that narrower 192-bit memory bus. Fun fact: the 192-bit bus has almost always been the choice for 60-class cards from Nvidia.

Now, many would say that it doesn’t matter what the specs are as long as performance is comparable. No arguments there.

If the performance gulf between an RTX 4090 and an RTX 4080 isn’t humongous, nothing else matters.

But from the performance slides Nvidia showed off, the difference in RT + DLSS performance at 4K seemed pretty close.

But for games that don’t use these technologies, I’m pretty sure you’re looking at a severe performance drop.

It’s just not right for Nvidia to market the 12GB variant as an RTX 4080 in any form.

Power Consumption Bump: Is it Worth the Performance Gained?

Although there is a considerable performance bump using RTX and DLSS, the power envelope for these new graphics cards also seems much higher.

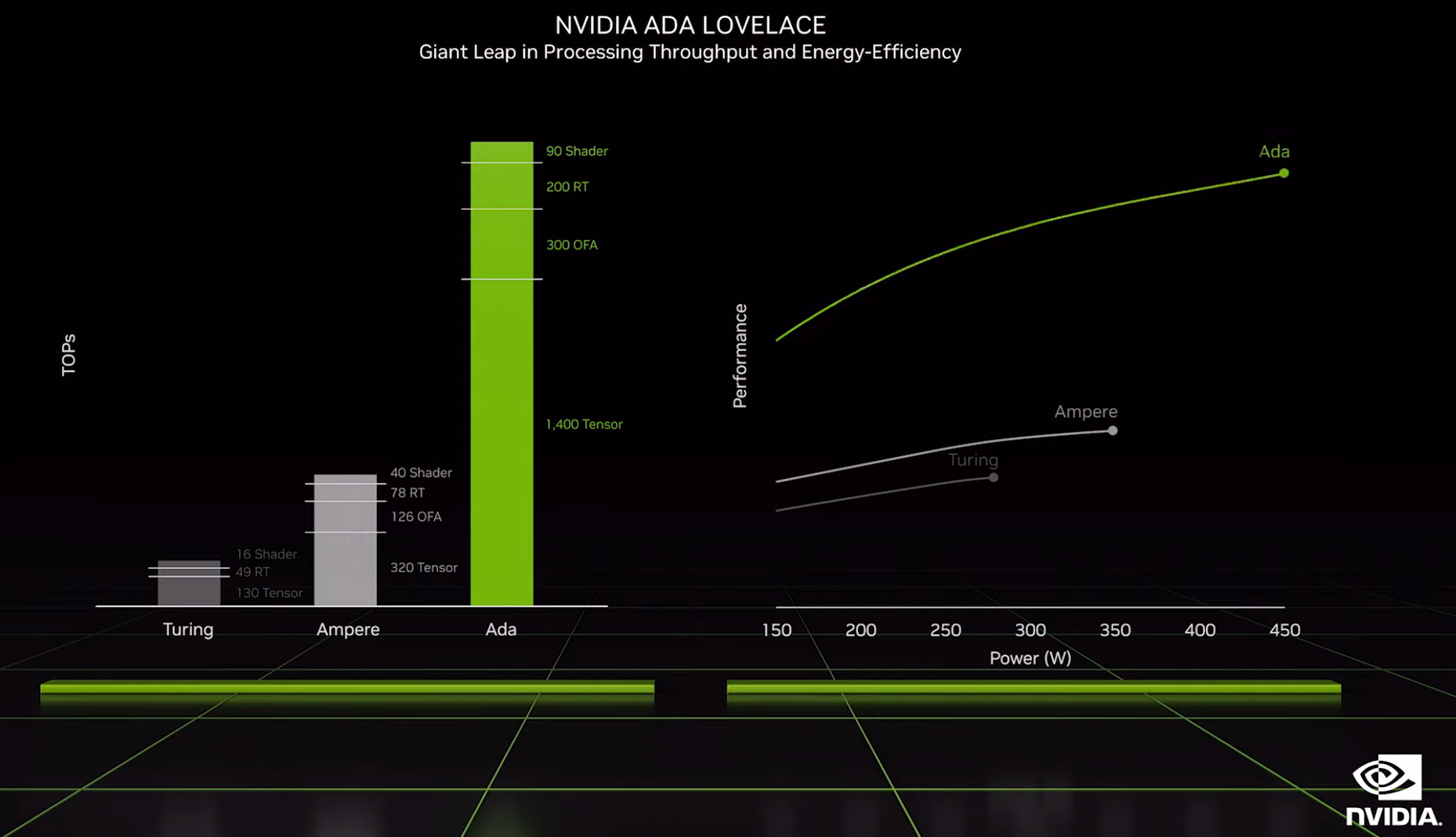

Here’s the graph that Nvidia presented during its keynote:

Going off this, you’ll notice a few things:

- Ampere power consumption in wattage tops out at 350W on the graph, while many RTX 3090 Ti graphics cards could draw as much as ~430W in games and ~490W in stress tests. Non-OC models sat closer to the 400W mark.

- Now, Nvidia reports that the Ada Lovelace GPUs supposedly stretch that 350W number to an eye-watering 450W.

Going by the difference between Nvidia’s reported number for Ampere, I’d wager that most OC’ed AIB RTX 4090 Graphics cards would draw up to 500W on average for gaming and go well above this point in stress tests.

But for professionals with GPU rendering workloads, you should see lower power usage than gaming, as clocks don’t boost up that high.

However, many would argue (rightly so) that you’re getting double the performance as well! If you’re genuinely getting DOUBLE the performance of an Ampere GPU and want only a single GPU in your system, then it’s an easy choice.

Yes, it’s worth it!

Professional workloads like GPU rendering that can effectively use Nvidia’s RT cores should enjoy an advantage.

However, the fact that these cards are just so large and draw too much power means that we’d be limiting how many graphics cards you can use in your system.

For GPU rendering workloads, that’s just too important a factor to disregard.

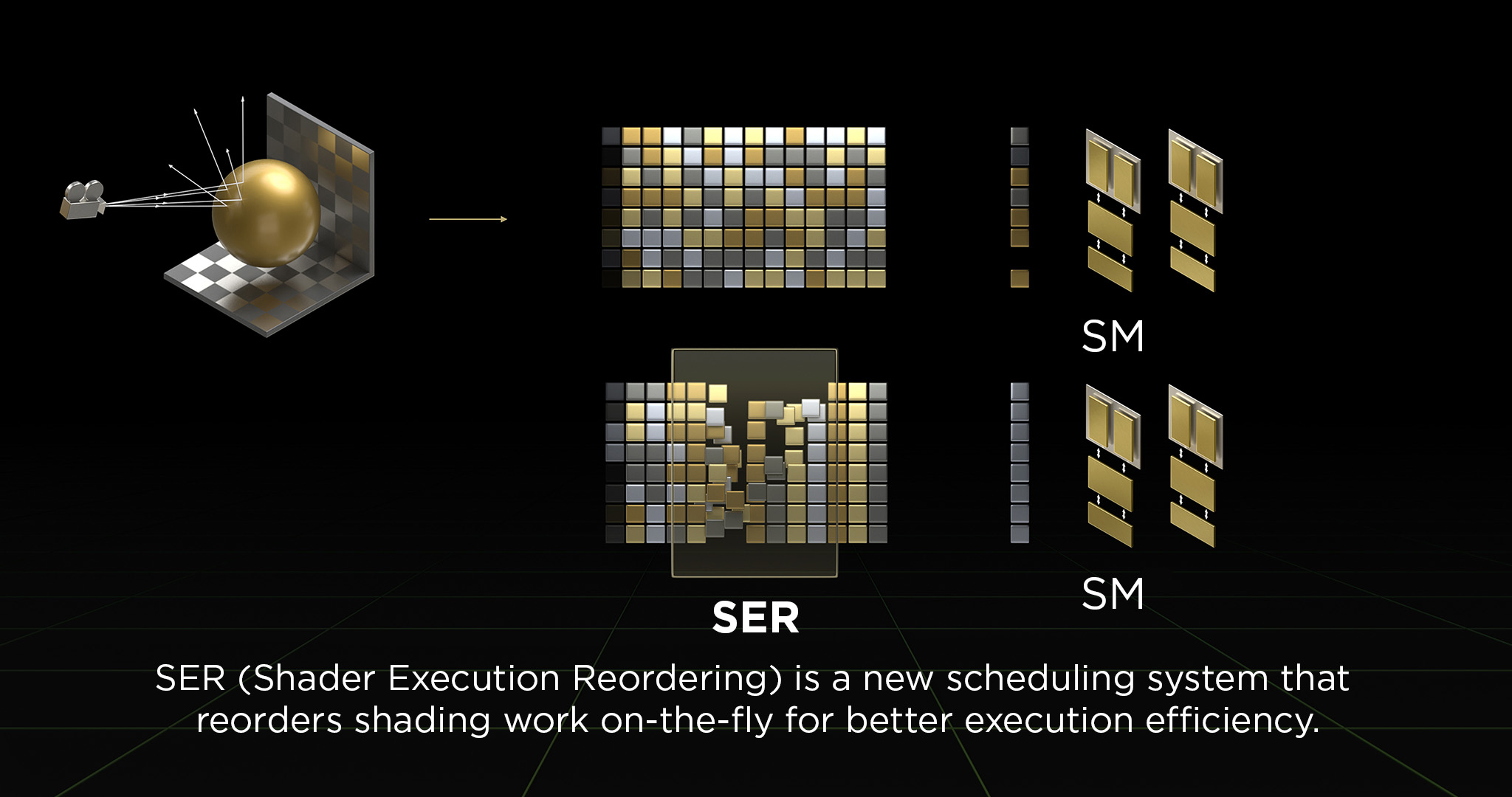

Shader Execution Reordering

Nvidia also announced the inclusion of new tech in the Streaming Multiprocessors of Ada Lovelace GPUs called Shader Execution Reordering (SER).

Source: Nvidia

During this part of the keynote, Jensen Huang made a pretty big claim.

“Ada’s SM includes a major new technology called Shader Execution Reordering. SER is as big an innovation as out-of-order execution was for CPUs!” – Jensen Huang (CEO, Nvidia)

Out-of-order execution (OoOE) is basically what made mainstream computing a reality in the mid-90s. The claim that Nvidia’s Shader Execution Reordering is as big an innovation as OoOE is indeed an impressive and bold one.

A claim that I sincerely hope is backed by equally remarkable performance because it’d be an exciting time for graphics processing.

According to Nvidia, SER allows up to a 2-3x uplift in Ray Tracing performance and a 25% improvement in rasterization performance. Of course, independent reviews and benchmarks will have to verify these claims, but if true, it’s an excellent step towards more efficient computing.

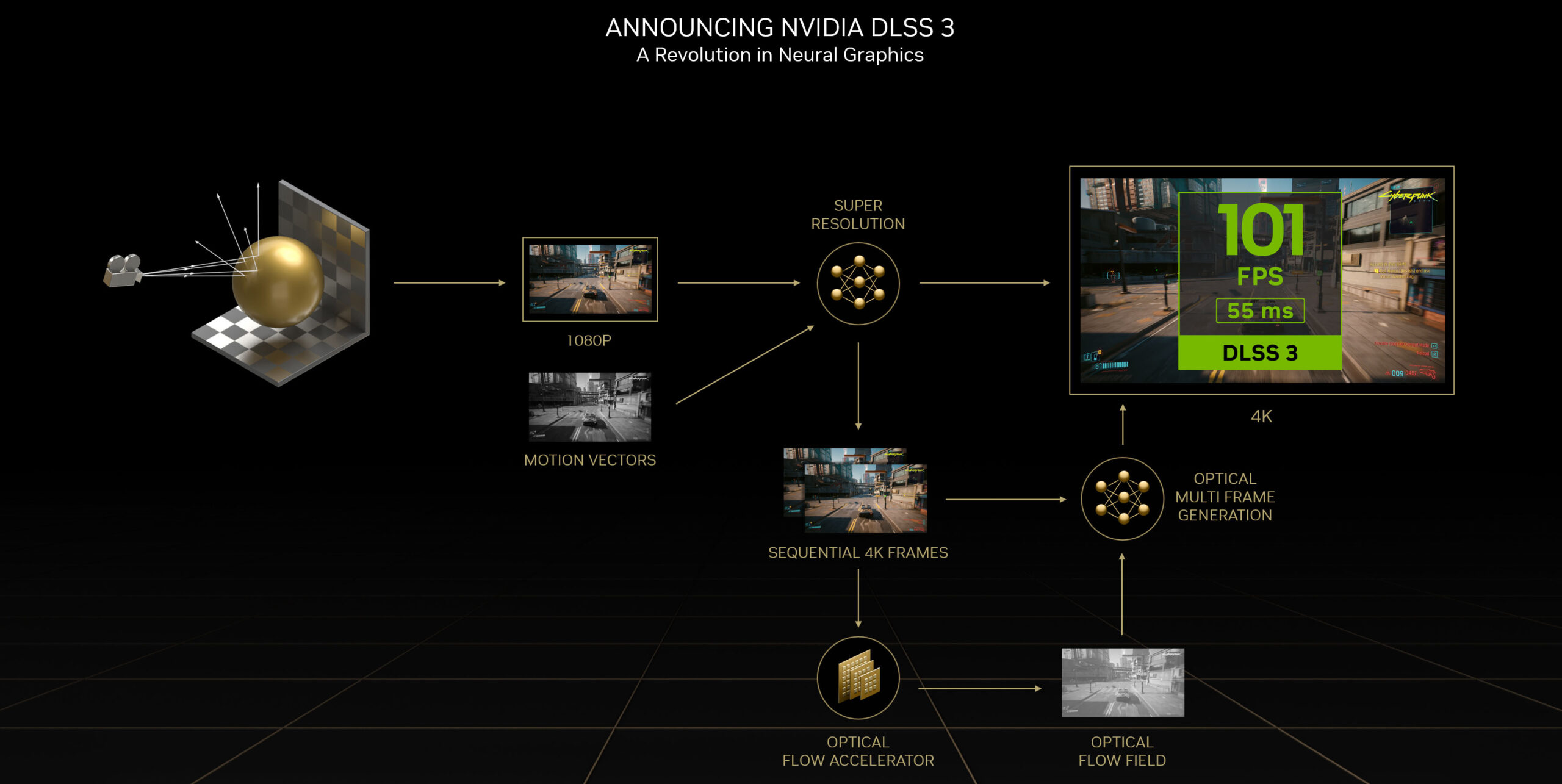

RTX Neural Rendering Courtesy DLSS 3.0

Nvidia also showed off its brand-new Neural Rendering technology used within DLSS 3.0.

Source: Nvidia

Here’s how it works.

Your graphics card processes the current frame and the previous frame of your game to assess how a scene is changing. An Optical Flow Generator simultaneously handles and feeds all the data about the direction and velocity of pixels from frame to frame.

The pairs of frames and direction and velocity data are fed into the neural network to generate intermediate frames. These intermediate frames are generated from scratch – ensuring a smooth gameplay experience.

Nvidia also claims that because DLSS 3.0 doesn’t put additional load on your GPU, it can boost performance by up to 4x!

That said, the game footage Nvidia showed during its launch event wasn’t too convincing. For example, they showed off Cyberpunk 2077, but the scene showcased had minimal camera pan and predictable movement.

However, and this is pure speculation, the applications for this technology in GPU rendering could be ground-breaking.

If you could use AI to generate intermediate frames for you, rendering tasks should also theoretically be able to leverage this capability. We’ll just have to wait and watch how/if rendering engines plan to use the Ada Lovelace GPUs.

Pricing: Wallets are Groaning Already

Although the price increase of a mere $100 at the absolute high-end won’t really turn people off, the higher mid-range will really sting.

With the CUDA counts that Nvidia is offering on its ‘premium’ 80-class cards this time around, the mid-range is more-or-less DOA unless we see price cuts or lineup shuffling.

Nothing except the RTX 4090 makes any sense for buyers, at the moment. If you want to buy 80 or 70-class cards, I’d recommend waiting for Radeon’s event to see how Nvidia responds.

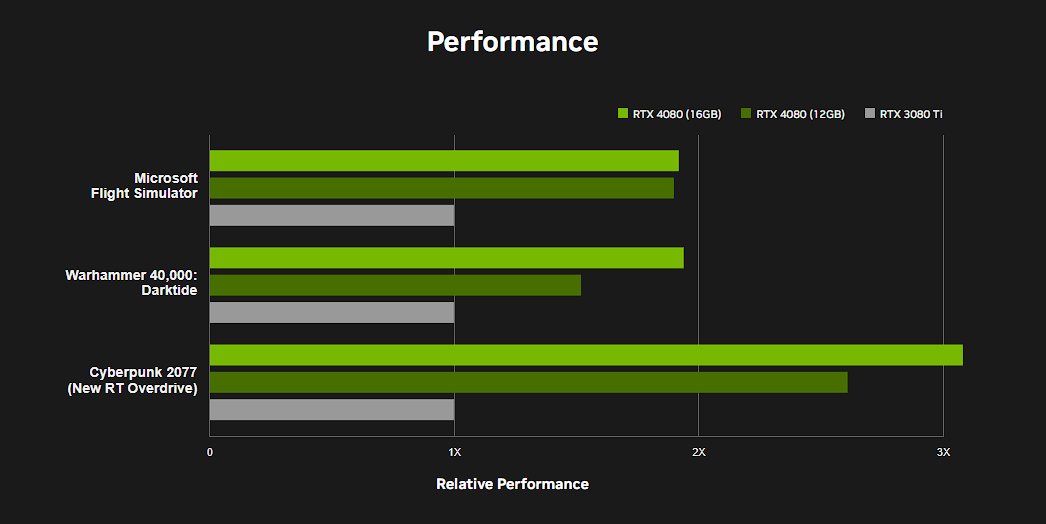

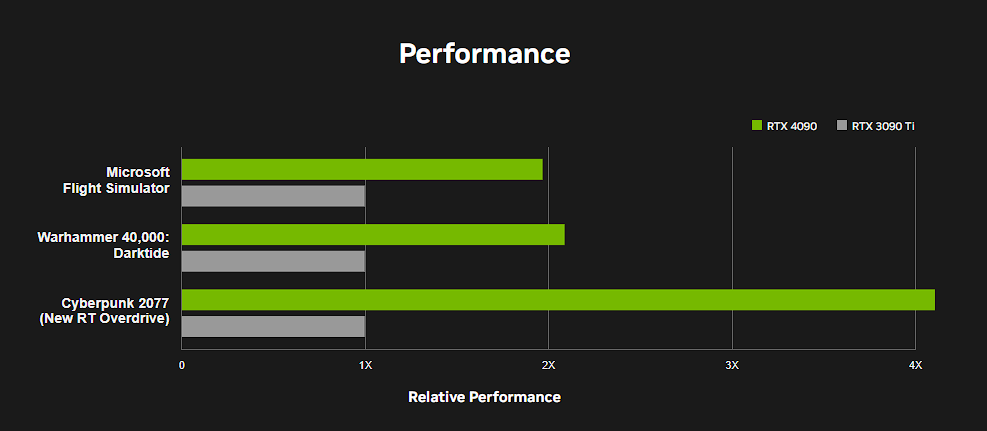

Performance: Hitting it Out of the Park?

Here are the graphs that Nvidia showcased for the RTX 40-series graphics cards:

At first glance, the gaming performance looks incredible, doesn’t it? However, there’s a minor footnote –

“3840×2160 Resolution, Highest Game Settings, DLSS Super Resolution Performance Mode, DLSS Frame Generation on RTX 40 Series, i9-12900K, 32GB RAM, Win 11 x64. All DLSS Frame Generation data and Cyberpunk 2077 with new Ray Tracing: Overdrive Mode based on pre-release builds.”

So, to see gains anywhere near these numbers shown, you need games that support both DLSS as well as RT. I would expect around 60-70% better rasterization performance.

Even with the CUDA core count and memory bandwidth differences, these cards’ RT + DLSS performance doesn’t seem too far off for some reason.

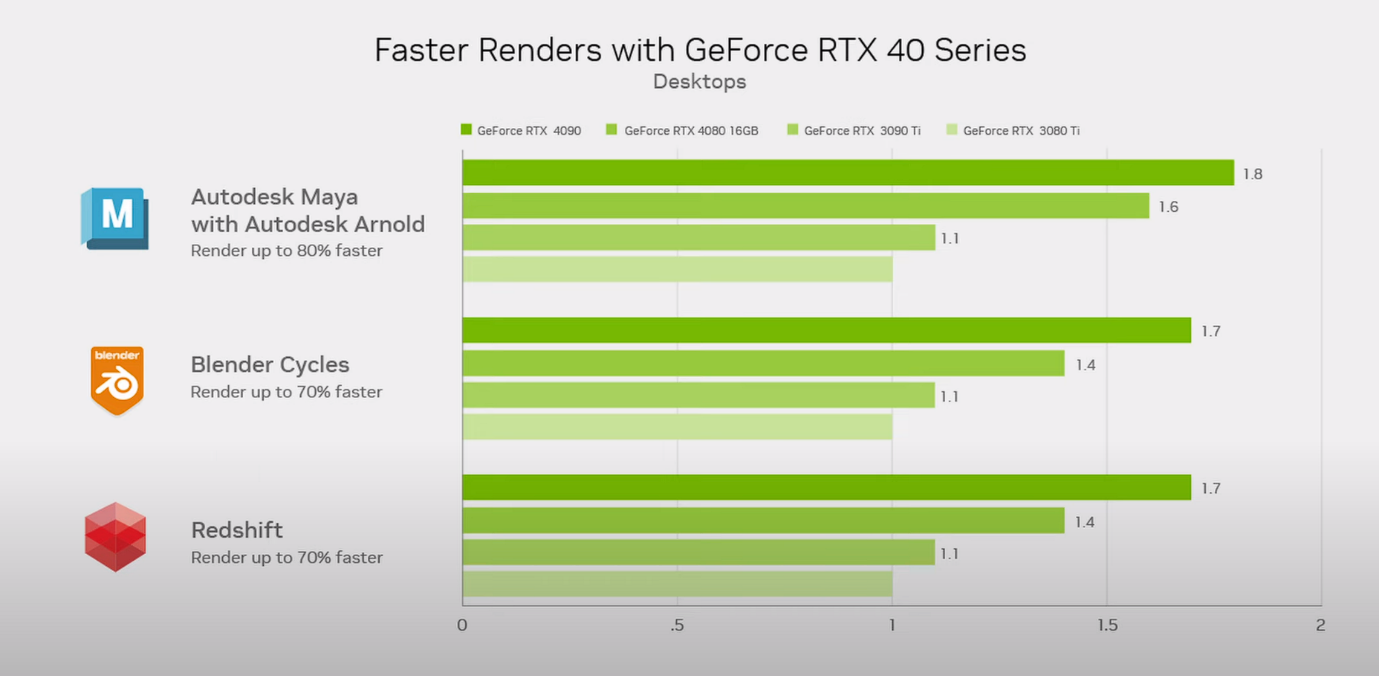

Nvidia also showed off a graph for professional workloads that looks absolutely fantastic.

If Nvidia isn’t using scenes that actively help its new architecture, the improvements here are pretty substantial.

In Redshift, we’re looking at a 60-70% uplift in render speeds. If a single RTX 4090 can do nearly the work done by 2x RTX 3090 graphics cards, the power consumption isn’t really too bad.

You can find benchmark results and a performance overview below.

RTX 4090 Benchmarks for Workstation Apps

GPU Rendering Workloads

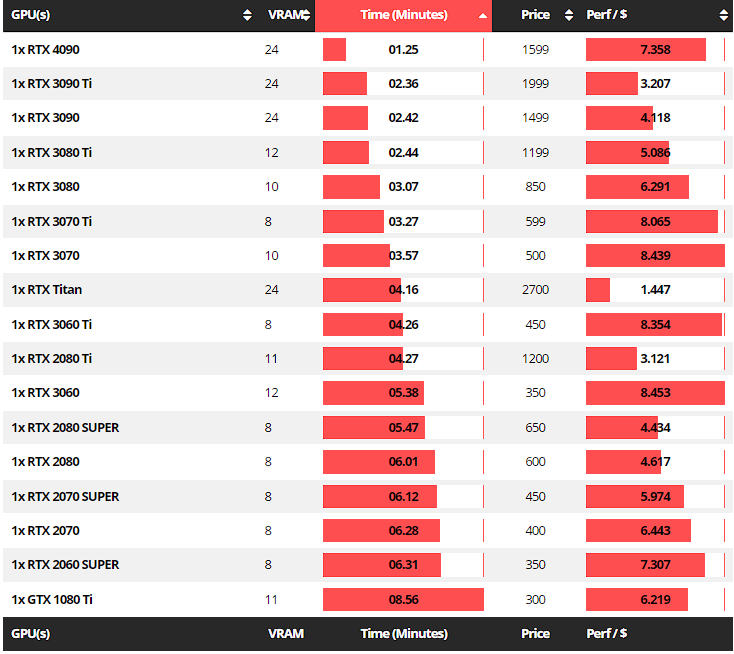

RTX 4090 Redshift Benchmarks

Maxon Redshift is one of the most popular GPU render engines out there, and how the RTX 40-series performs in this test will dictate whether it’s a viable upgrade for professionals still running older GPUs.

Although it scales linearly with multiple GPUs, the humongous > 4-slot RTX 4090 cards certainly won’t allow more than 1/2 in even large systems. And we haven’t even started to consider power draw yet.

So, how is the performance? Let’s find out! [Full Redshift Benchmark List]

There’s absolutely no doubt about the performance the RTX 4090 brings to the table. It’s down to just over a single minute! That’s impressive. Doubling the performance of an RTX 3090 is no small feat.

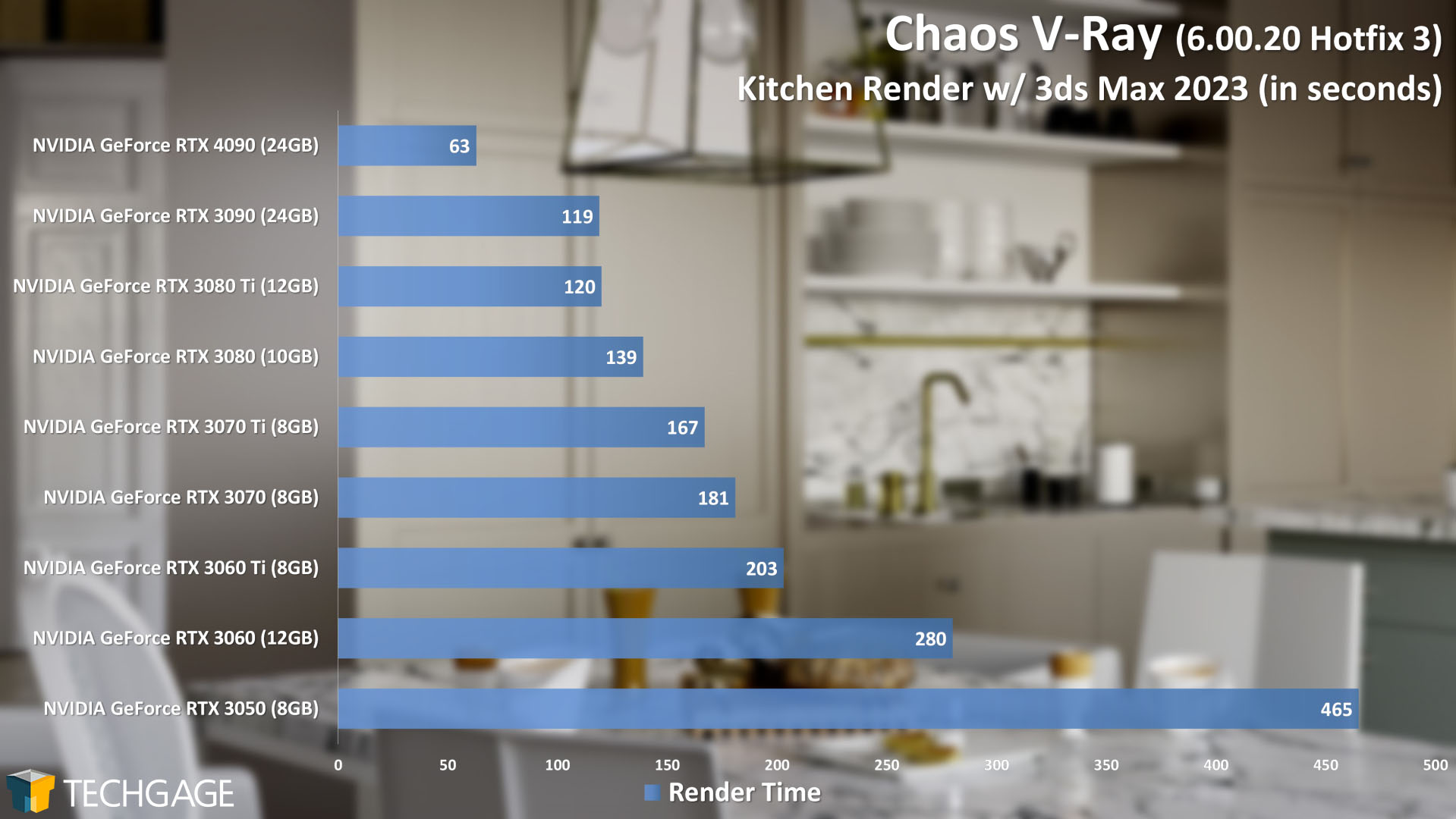

RTX 4090 Performance in Chaos V-Ray

A special thanks to Rob Williams at TechGage and his RTX 4090 benchmark article for the V-Ray and Autodesk Arnold data.

So, doubling the performance of an RTX 3090 starting to seem like a trend even with V-Ray, doesn’t it? The mighty Kitchen render now only takes just over a minute total with an RTX 4090!

Source – TechGage

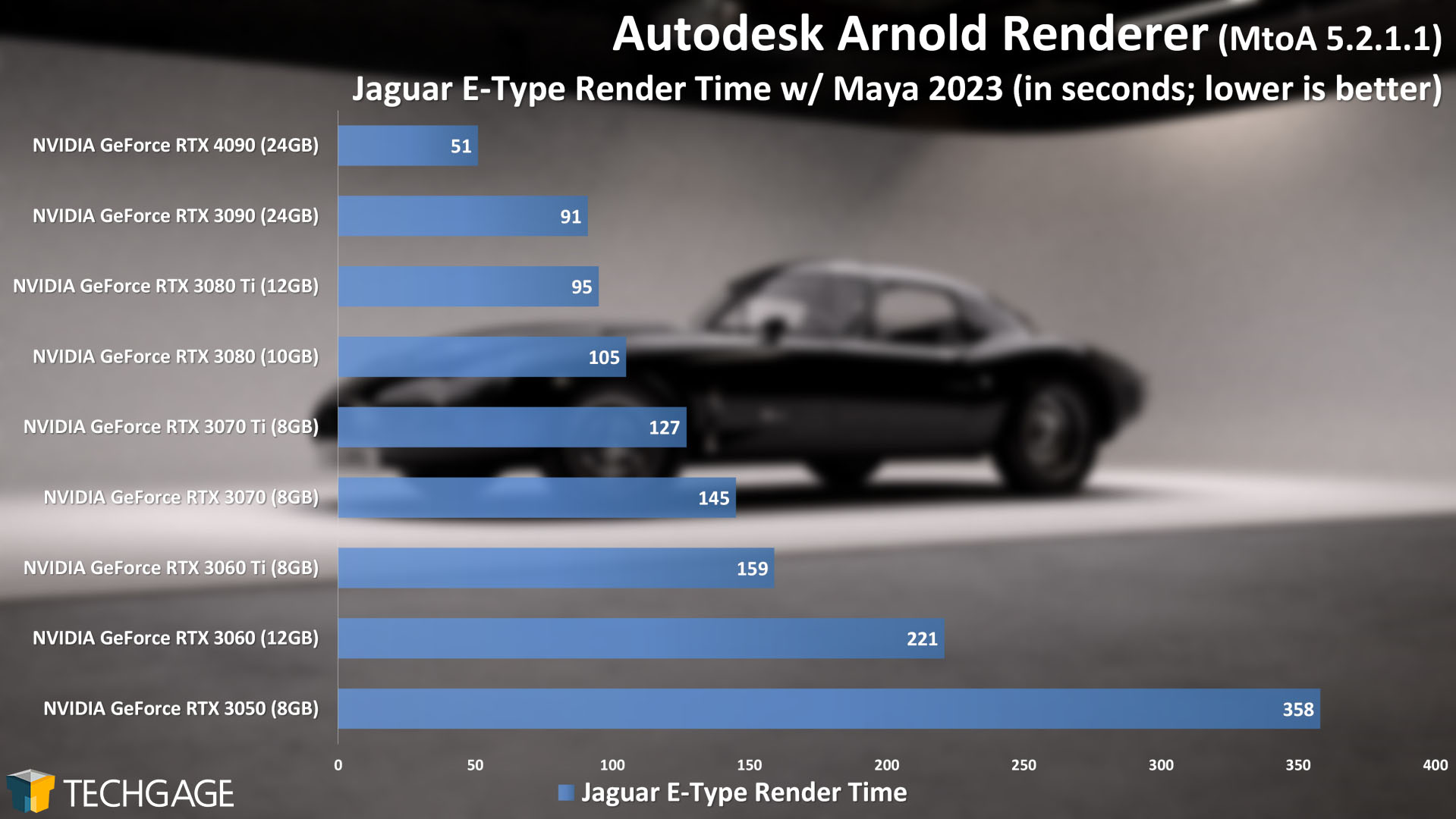

RTX 4090 Performance in Autodesk Arnold Renderer

Nvidia’s RTX 4090 continues the trend of nearly halving the render times of an RTX 3090 in Autodesk’s popular Arnold renderer as well.

Source – TechGage

RTX 4090 Performance in Blender (Optix)

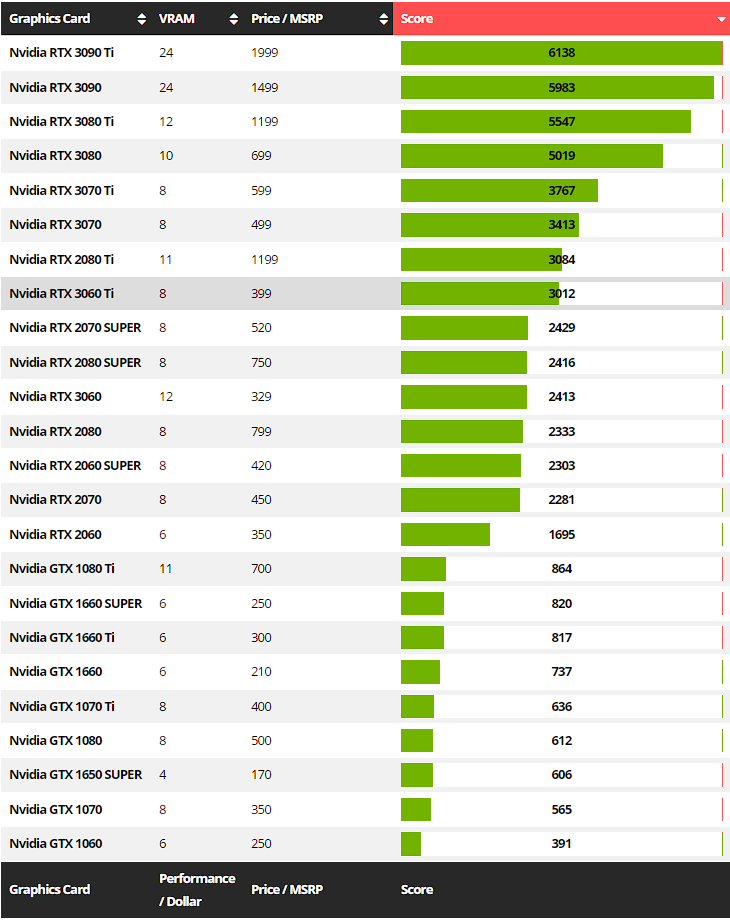

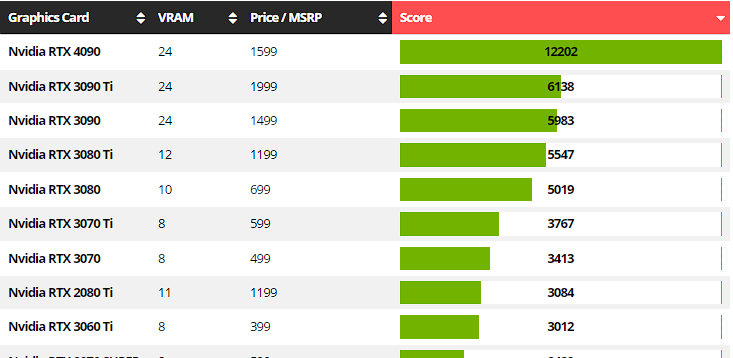

It’s sometimes difficult to visualize how much improvement a new-gen product brings to the table. So, let’s see our Blender benchmark chart before adding the RTX 4090:

Now, after running the Blender Open Data benchmark on the RTX 4090, here’s what the chart looks like:

At a glance, it’s clear that Nvidia has continued its tradition of effectively doubling performance with each subsequent release (2080 Ti -> 3090 -> 4090).

If your primary workload is Blender, and you need all the speed you can get for Optix rendering from a single GPU, the RTX 4090 has absolutely no peer, at the moment.

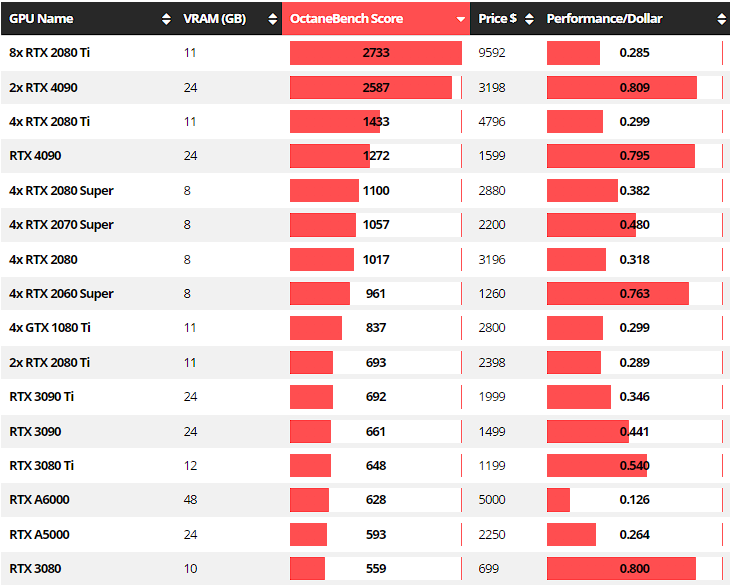

RTX 4090 Performance in Octane Render

Again, the RTX 4090 bests the Nvidia RTX 3090 by offering double the performance in Octane Render.

On a hilarious side note, the only config that comes close to the RTX 4090 requires a whopping 8 RTX 2080 Ti graphics cards strung along with risers and paraphernalia. If that’s where you’re at, it’s time to grab an RTX 4090. Yesterday.

It’s clear that for a single or dual-GPU setup, the RTX 4090 is a powerhouse like nothing we’ve seen before.

But when you factor in the possibility of adding more GPUs to a render node, it goes a bit sideways.

Does an RTX 4090 Make Sense for GPU Rendering Workloads?

Although we’re impressed with Nvidia’s RTX 4090 performance numbers, some caveats make it a difficult ‘outright’ recommendation.

If you’re building a brand-new workstation/render node, it makes perfect sense to go with Nvidia’s RTX 4090 graphics card, ESPECIALLY if you’re not looking into multi-GPU setups.

However, what if you’re upgrading from older-gen parts?

That’s where things get a bit murky. Three things professionals should consider:

- Take a closer look at the performance charts, especially at those 30-series numbers, and keep in mind that most of them offer 2-slot blower cards.

- Using an RTX 4090 severely limits the number of GPUs you can add to further speed up your renders on GPU renderers like Redshift.

- Would adding another cheaper GPU get you similar performance?

Here are some estimates:

Suppose you run a render node with four RTX 2080 Ti graphics cards. The approximate time you’ll complete the Redshift benchmark would be right around ~64-67 seconds.

What if you’re running 4 RTX 3080s instead? Well, now you’ll be already completing this render in around ~45-47 seconds.

A single RTX 4090 completes this benchmark scene in double that time, at 85 seconds. If you do manage to squeeze in 2 of these into a build, you’ll drop that time to 42~ seconds.

Unfortunately, the drop just isn’t wide enough to warrant an upgrade for Redshift. Yet.

You might get way better value by just plonking another GPU into your build.

Recommendation for GPU render engines that scale linearly (Redshift, etc.):

Adding more GPU horsepower to speed up renders is an important aspect of GPU rendering and gives professionals some much-needed flexibility.

Wait for blower-style RTX 4090 (if any) or more power-efficient SKUs like RTX 4070, etc.

As of October 2022, blower RTX 3080/3090 graphics cards remain the best way to go.

Is the Nvidia RTX 4090 and 4080 worth it? [Conclusion]

Nvidia seems to have purposely designed its 40-series lineup to make everything except the RTX 4090 look like an awful bargain.

Of course, it could be because stocks of RTX 30-series are still stuck on shelves. Clearing them out at the highest possible price is essential to maintaining Nvidia’s revenue numbers from the past couple of years.

In my opinion, Nvidia really only wants to sell you the RTX 4090. For anything else, they want a steep premium that’s not worth it.

Those who want cheaper options are left with (probably) lousy-value RTX 4080s or 2-year-old RTX 30-series options that are still selling near their MSRP.

For now, I’d recommend staying away from the RTX 40-series until one of two things happens:

- Nvidia launches more power-efficient parts like RTX 4070 (assuming they offer reasonable performance uplifts over last-gen parts)

- You start to see more efficient 2-slot RTX 4090 graphics cards aimed at professionals (assuming their heat and power draw are managed well)

Thoughts on the ‘Unlaunching’ of the RTX 4080 12GB

Even though Nvidia rolled back the release of the controversial RTX 4080 12GB, I still think it was simply a way to distract people from the gimped RTX 4080 still sitting quietly at a whopping $1199.

Also, it allowed Nvidia to say this on their launch slides:

RTX 4080 | Starting at $899

Sounds like a sweet deal when put like that, doesn’t it?

Over to You

What do you think about Nvidia’s RTX 40-series launch? Good, bad, or downright ugly? Let us know in the comments or on our forum!

![Is the Nvidia RTX 4090 and 4080 Worth it for Content Creators? [3D Rendering, Video Editing & More] Is the Nvidia RTX 4090 and 4080 Worth it for Content Creators? [3D Rendering, Video Editing & More]](https://www.cgdirector.com/wp-content/uploads/media/2022/10/Nvidia-RTX-4000-Launch-Worth-it-for-Content-Creators-Twitter-1200x675.jpg)

![Can You Run Two Different GPUs in One PC? [Mixing NVIDIA and AMD GPUs] Can You Run Two Different GPUs in One PC? [Mixing NVIDIA and AMD GPUs]](https://www.cgdirector.com/wp-content/uploads/media/2023/03/Can-You-Run-Two-Different-GPUs-in-One-PC-Mixing-NVIDIA-and-AMD-GPU-Twitter-594x335.jpg)

4 Comments

25 April, 2023

What’s the word on the new 4070?

30 October, 2022

Some other sites like Tom’s Hardware and other have done test with power limiting but then for games and noticed that the performance loss like for a 70% power limit is about 5%. But with the power limit you limit the max GPU clock what is causing the high power draw. But as you say that for rendering the clock is actually a lot lower the power draw should also be lower and hence the power limiting might have less effect. That would be a great test to do on your wonderfull site as I’m sure a lot off people are curious about the too ;).

30 October, 2022

Nvidia purposely killed off RTX3090 style cards (and urged AIB partners to do the same) coz they want you to buy Quadro cards. Also these blower style cards have a lot off heat problems and often throttle while the fans style cards run much cooler and at full efficiency.

Added the 3090 and 4090 have 24GB of memory, those older cards of XX80 style cards only have 12 GB or less of VRAM which for complex scenes would force you to do out of core rendering or for the render engines who can’t do that limit the complexity or just make it crash.

And here in Europe the energy prices are insane so 4 of these older cards eat way to much energy with the lower performance per watt. So the 4090 seems like the best option to me.

I have looked into adding 2 RTX4090’s in one system but because these cards are so huge even on a WRX80 board it’s a challenge to have more then 1,5 slots space between the cards which I would consider the minimum for the top card not to ‘choke’. As an added disadvantage the lower card blows it’s hot air out upwards through the third fan right into the air intake of the top card’s thrid fan. This would certainly have a performance and temperature inpact on this top card.

Combined the heat output of almost 900 Watts of hot air blown directly into the case makes it a challenge to cool your system as a whole certainly combined with a 280 Watt Threadripper.

So realistically speaking one RTX4090 wil be enough for most, but still the best choice over older cards.

3 November, 2022

Hey Jerghal,

The thing is, when stacking cards (more than 2), axial coolers don’t work very well because the bottom cards end up blowing hot air into the intakes of the ones sitting above them, which increases the chances of throttling. This isn’t too much of an issue with lower wattage cards, but with multiple cards that can give off over 300W of heat, you need the air to go straight out of the case and not circulate within. That’s where blowers help. And RTX 3090 blower cards seem to be coming back in stock slowly, but currently still sitting at scalped prices (https://www.amazon.com/Gigabyte-NVIDIA-GeForce-Graphics-GV-N3090TURBO-24GD/dp/B08KHKDTSJ).

And as you said, it’s really the size of the RTX 4090 that’s the problem. Power scaling benches show that these cards CAN run efficiently if not pushed way past the efficiency curve. But it is what it is, I guess 🙂

As for power, yes, if you’re building a system from scratch, an RTX 4090 is probably all you need now. It’s more about existing rigs and professionals who want a boost to their render speeds. If you’re already running multiple cards, it’s worth it to just wait for better releases or add another GPU to your setup if you can.

Cheers, and thanks for the insight!

Jerry