TABLE OF CONTENTS

After ‘unlaunching’ the confusingly-named RTX 4080 12GB, Nvidia is now ready to ‘launch’ the real GeForce RTX 4080 (16GB) in its Ada Lovelace GPU lineup. This is only the second graphics card to support Nvidia’s brand-new DLSS 3.0 and features next-gen RT cores to boost ray tracing performance significantly.

If you want a complete overview of the architectural changes with Ada Lovelace and recent announcements about them, do check out our initial coverage!

Today, we’ll explore popular professional workloads like GPU rendering and video production on the Nvidia GeForce RTX 4080 16GB Founders Edition.

Saying that we have high hopes for this product would be an understatement.

Although the GeForce RTX 4090 is an uncontested monster when it comes to professional workloads like GPU rendering, its equally monstrous size (at the time of writing) limits the number of GPUs you can add to your render node. This isn’t too much of a concern for workloads that don’t scale well with multiple GPUs.

But it’s an important factor to consider for GPU render engines that do scale almost linearly with additional GPUs you throw at them (and fit into your PC).

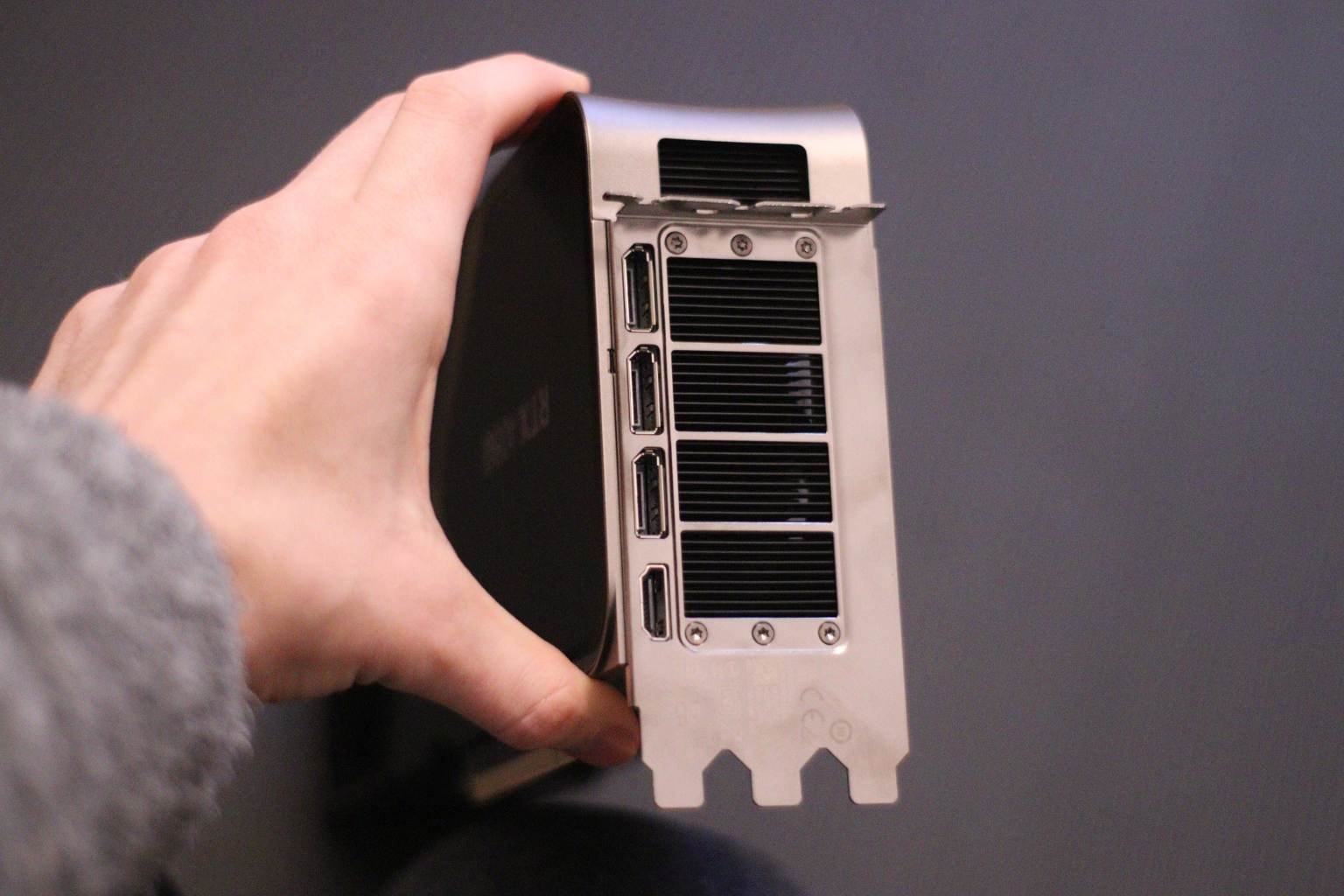

The RTX 4080 Founders Edition sits at precisely 3 slots wide, leaving enough room for at least dual GPU setups without being pigeonholed into motherboards with a strange PCIe layout or cases that are hilariously huge. Fingers crossed that AIB models will feature more modest sizes.

What we really want is 2-slot blower models, Nvidia!

While the RTX 30-series did offer a marked improvement in performance over the RTX 20-series, the price point was prohibitively high, and availability was nearly non-existent.

Will the RTX 4080 finally be a worthy successor to the RTX 2080 Ti?

RTX 4080 Review – Our Test Bench

Test System – Here’s the hardware we paired with the Nvidia GeForce RTX 4080 16GB:

- CPU: AMD Ryzen 9 5900X 12-core Processor

- Graphics Card: Nvidia RTX 4080 16GB

- CPU Cooler: beQuiet! Dark Rock Pro 4

- RAM: G.Skill TridentZ Royal 128GB DDR4 (4x 32GB, CL16 – 3600)

- Motherboard: MSI Prestige Creation X570

- Power Supply: Corsair HX850i 850W

- Storage: Seagate FireCuda 530 2TB PCIe 4.0 NVMe SSD

- OS: Windows 10 Pro, 64-bit

Here’re the 4080’s specs as reported by GPU-Z:

Creator workloads that stress the GPU aren’t too CPU-heavy, so we went with a reasonable AMD Ryzen 9 5900X as our CPU.

Note – Since we haven’t paired the RTX 4080 with the fastest available CPU, our Gaming numbers could be slightly lower than those reported by other outlets.

GeForce RTX 4080 Founders Edition Technical Specifications and MSRP

| GPU | GeForce RTX 3080 Ti | GeForce RTX 4090 | GeForce RTX 4080 |

|---|---|---|---|

| CUDA Core Count | 10240 | 16384 | 9728 |

| Base Clock (GHz) | 1.37 | 2.23 | 2.205 |

| Boost Clock (GHz) | 1.665 | 2.52 | 2.505 |

| VRAM | 12 GB GDDR6X | 24 GB GDDR6X | 16 GB GDDR6X |

| Memory Bus Width | 384-bit | 384-bit | 256-bit |

| TDP | 350W | 450W | 320W |

| Launch MSRP | $1119 | $1599 | $1199 |

From a specs standpoint, it’s pretty apparent that Nvidia strips away quite a bit from the top-tier RTX 4090 to arrive at an RTX 4080. In fact, its specifications (like memory bus width, CUDA count drop, etc.) more closely resemble a 70-class product from Nvidia rather than an 80-class one.

Nonetheless, if it manages to offer stellar performance within a reasonable power envelope – we’re going to be very happy with it.

So, without further ado, let’s get started with the performance numbers and find out!

Exploring GPU Rendering Workloads with the RTX 4080: Finally, A Worthy Successor to the Aging RTX 2080 Ti

GPU rendering is a highly-parallelized workload that benefits from additional GPUs. In this section, we’ll cover a few popular GPU renderers and determine whether buying an RTX 4080 for GPU rendering workloads makes sense.

At the end of each benchmark section, we’ll also list the max power draw detected during a render. We’ve seen some discussions and misinformation floating around, so this will give creators another data point to consider for their upgrades.

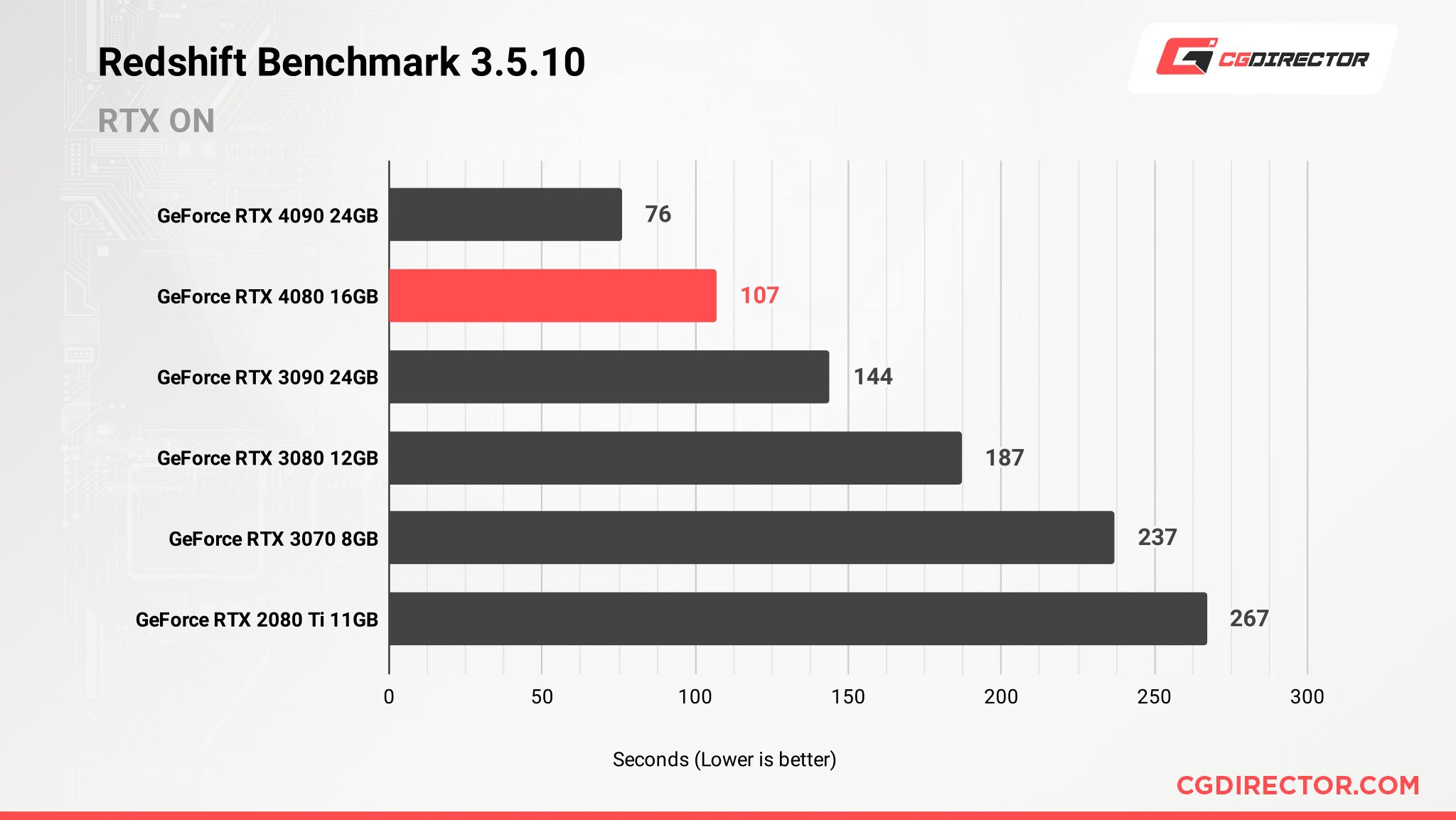

RTX 4080 Redshift Performance Benchmark

Redshift has been around for ages and has become very popular in the CG space among 3D artists. If you rely on it for your work, you’re pretty much stuck with Nvidia for now because AMD Radeon GPUs aren’t fully supported yet.

Let’s see how the RTX 4080 fares in the official Redshift Benchmark, shall we?

The GeForce RTX 4080 Founder’s Edition easily outpaces the GeForce RTX 3090 with around a 25% reduction in render times at a lower price (if considering MSRP) with RT on. IF it ends up saving power in the process, it isn’t a ‘bad’ deal in any way.

Although I do acknowledge the appeal of spending an extra $400 to drop your render times by another ~30%, I urge you to reconsider unless you’re sure that you need only a single GPU now and for the considerable future. Mining rigs with multiple RTX 4090s are always an option, though!

However, if you’re still on the RTX 2000 series, the RTX 4080 offers a remarkable reduction in render times at around the same price you paid for an RTX 2080 Ti. It’ll drop render times by well over half!

Yes, comparing products that are two generations apart is not fair, but honestly, the RTX 3000 series and months of scalper prices seem like a bad dream that most would rather forget.

Max Reported Power Draw for the RTX 4080 during the Redshift Benchmark: 218W

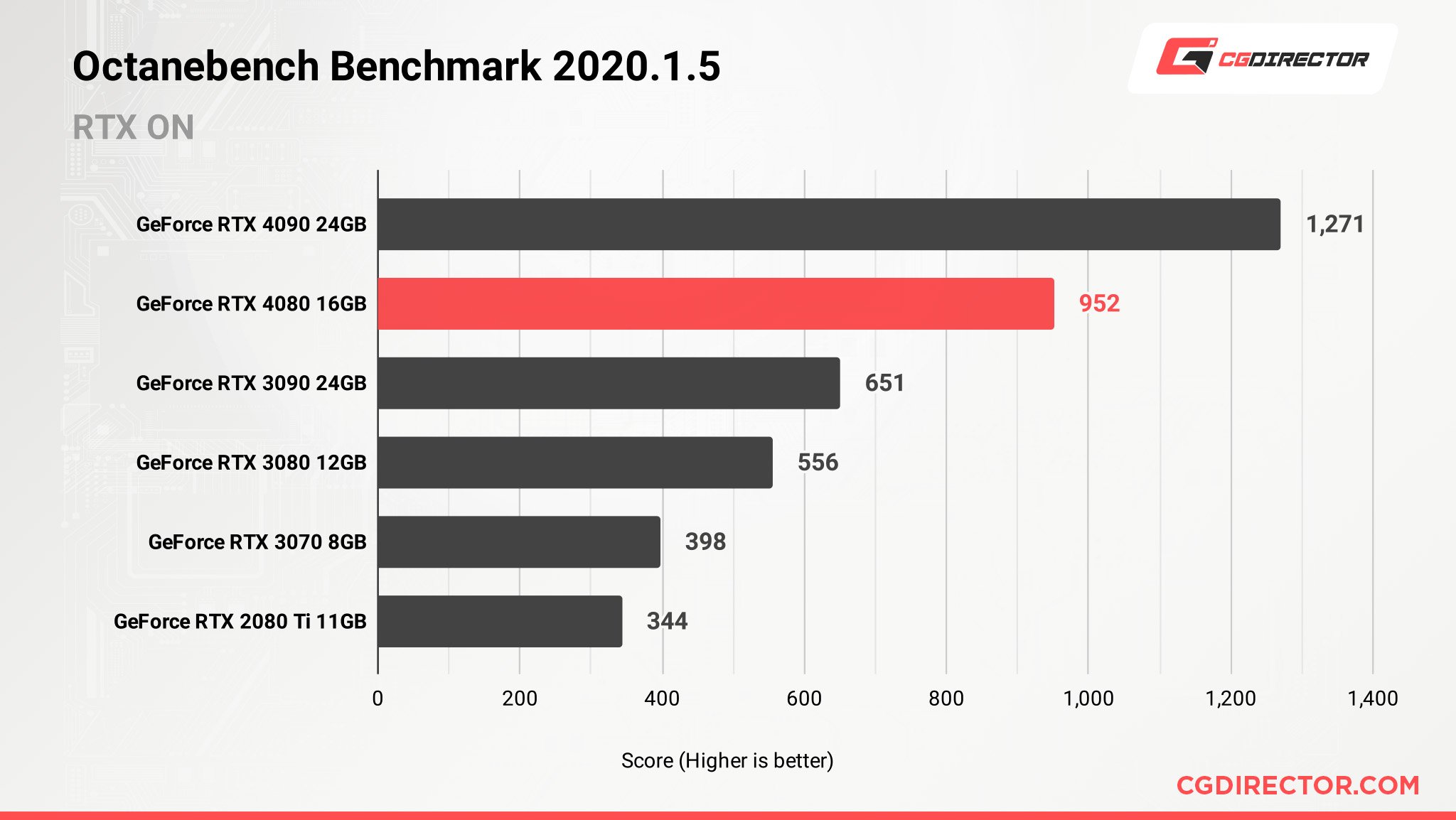

RTX 4080 Octane Performance Benchmark

OctaneRender prides itself on being the world’s first AND fastest unbiased, spectrally correct GPU render engine. Its OctaneBench benchmark tests your GPU and comes up with a score that indicates the performance of your GPU.

Here’s how the RTX 4080 stacks up against its peers (Octane is yet again Nvidia-only):

The RTX 4080 trounces the RTX 3090 in Octane with a 46% higher score if you leave RT on!

Surprising? Yes! Unwelcome? Hell, no!

However, with the much more powerful RT cores on the RTX 40-series products, it’s not unexpected.

For OctaneRender, the RTX 4080 is a noticeable upgrade if you can find one for around the MSRP. Again, just like Redshift, multiple GPUs scale well on Octane, so don’t be tempted to reach for that RTX 4090 unless you’re sure you won’t need to add more GPUs down the line.

Max Reported Power Draw for the RTX 4080 during the Octane Benchmark: 251W

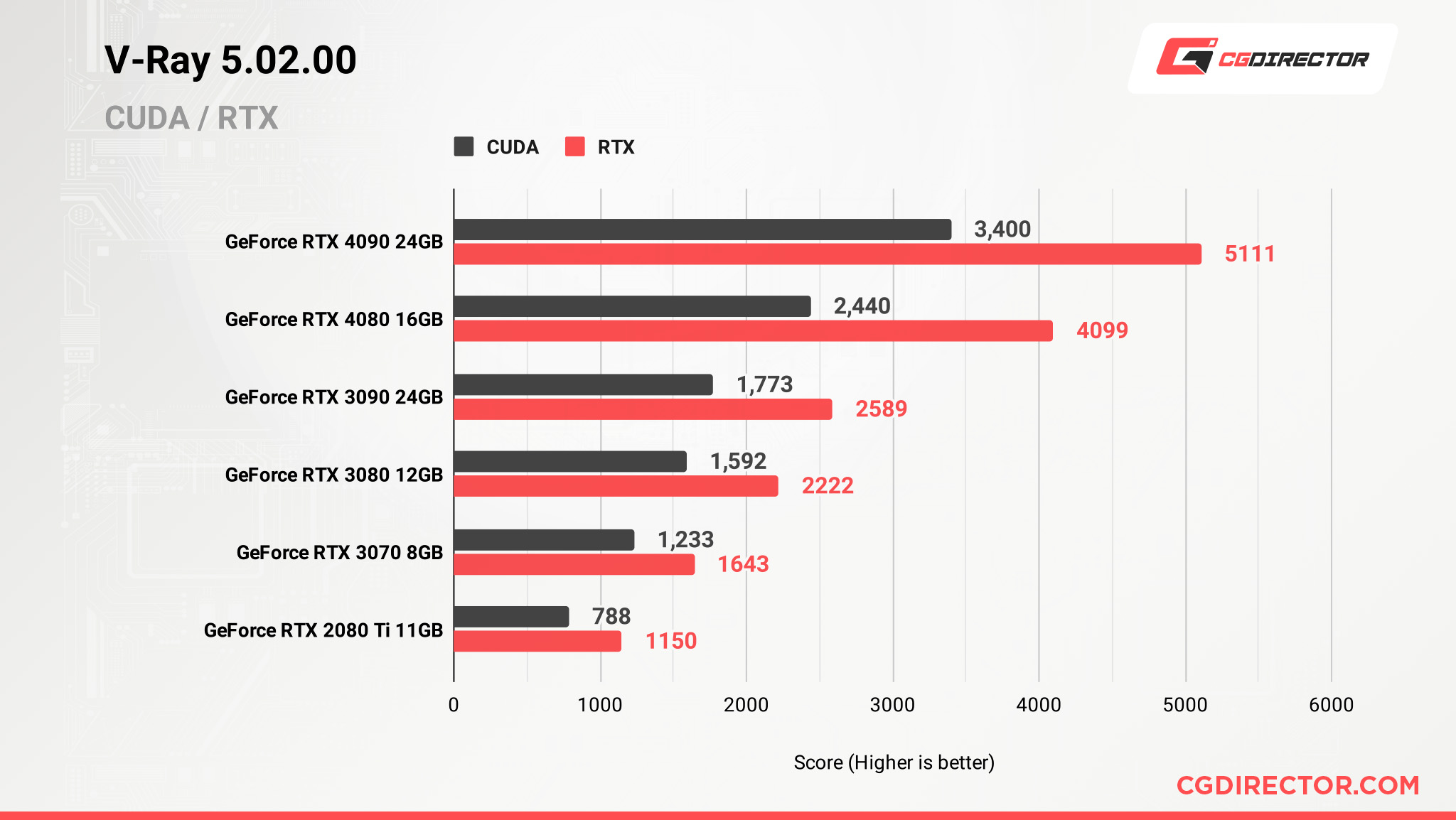

RTX 4080 V-Ray 5 GPU Benchmark

V-Ray is yet another 3D rendering engine and boasts integrations with several professional apps like 3ds Max, SketchUp, Maya, Cinema4D, Rhino, and more. It offers both CUDA-accelerated and RT-accelerated render options.

Let’s see how the RTX 4080 compares to its last-gen counterparts, as well as the RTX 4090:

In what’s becoming a trend, the RTX 4080 again handily stomps the RTX 3090 to bits in V-Ray 5 with RTX. You can expect a 58% faster render when using RT acceleration.

However, if you’re using just CUDA or your scene doesn’t have many elements that can leverage RT acceleration, the improvement is much more modest at 37%.

Don’t get me wrong – that’s not unimpressive. It’s just so much faster when you CAN leverage the RTX 4080’s RT cores.

Max Reported Power Draw for the RTX 4080 during the V-Ray Benchmark (RTX): 204W

Max Reported Power Draw for the RTX 4080 during the V-Ray Benchmark (CUDA): 202W

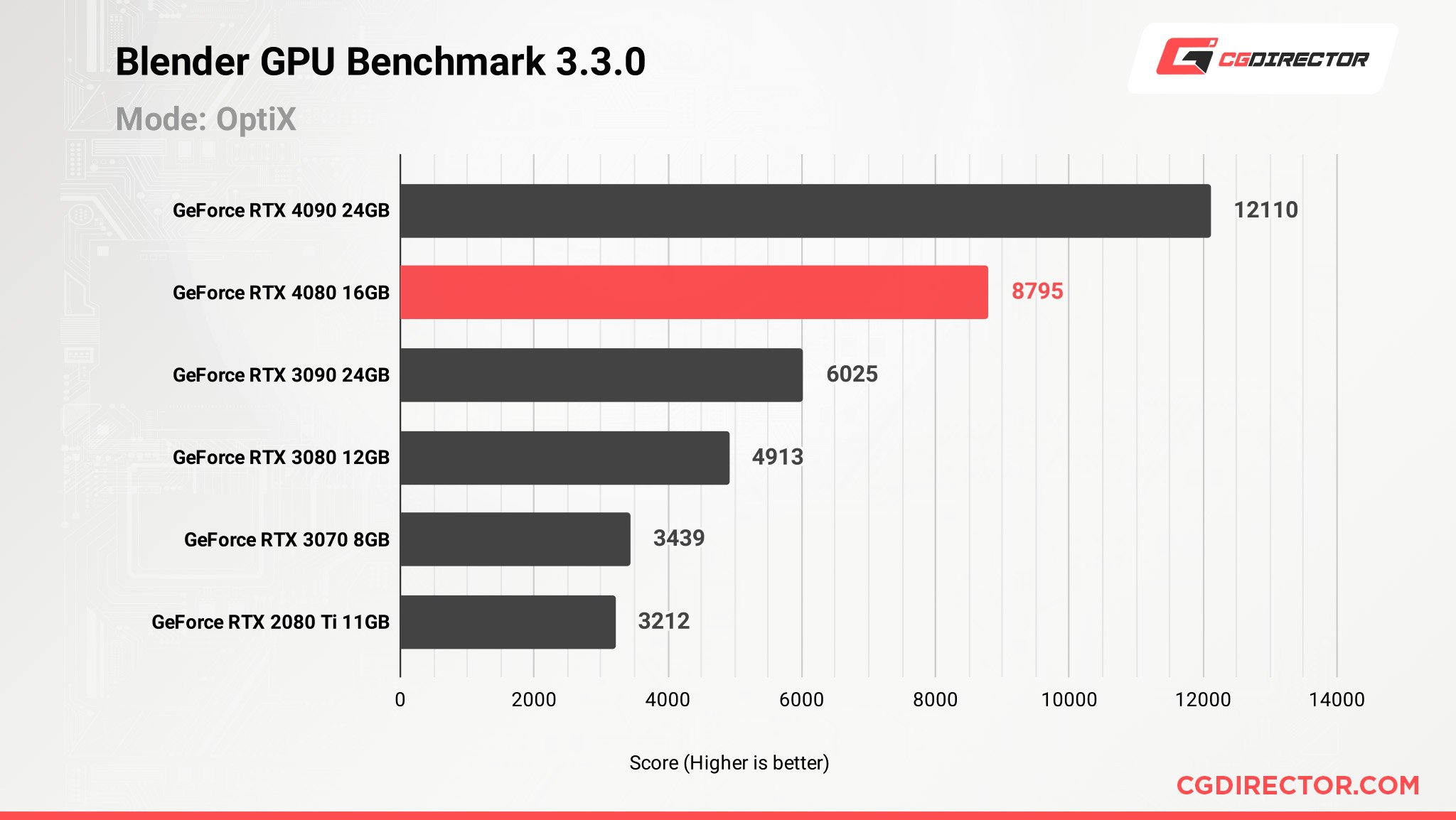

RTX 4080 Blender Cycles Benchmark

Blender has become one of the most popular 3D apps in the world, and its Cycles renderer with Optix acceleration makes the experience much more refined!

We’ve already seen how much Nvidia’s RT cores help Blender’s rendering performance, so we’re expecting more of the same with the RTX 4080 as well:

And keeping in line with the other numbers, the Founder’s Edition GeForce RTX 4080 improves on the RTX 3090 with a nearly 46% performance bump in Blender’s Cycles renderer! We can’t really call that a marginal uplift, especially when you factor in its lower price point.

Max Reported Power Draw for the RTX 4080 during the Blender Benchmark: 222W

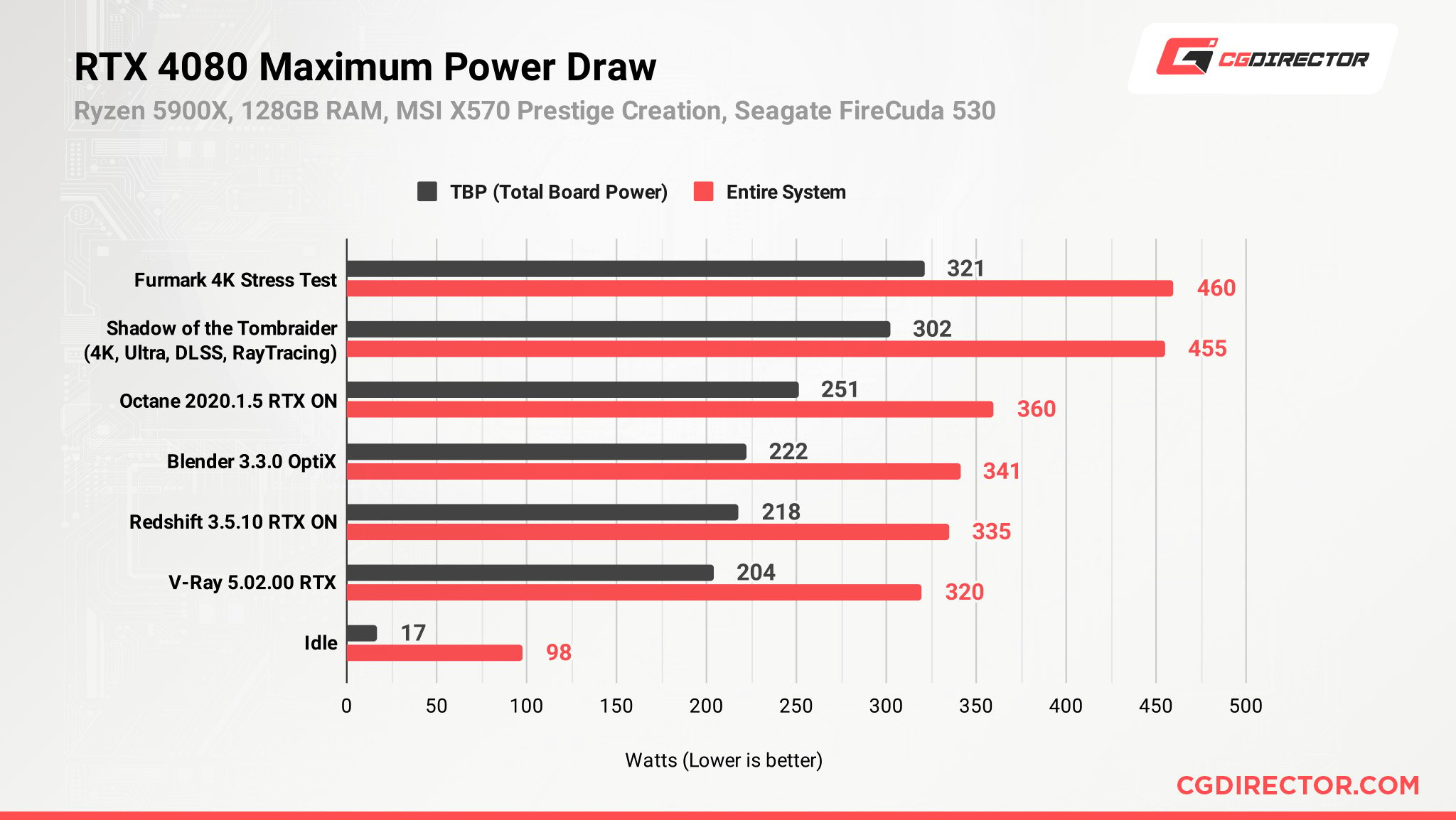

Investigating Power Draw Numbers for GPU Rendering Workloads: Much Ado About Nothing for Creators?

While independent reviews have shown abnormally high power draw for the RTX 40-series products, we have always maintained that rendering workloads won’t consume as much power.

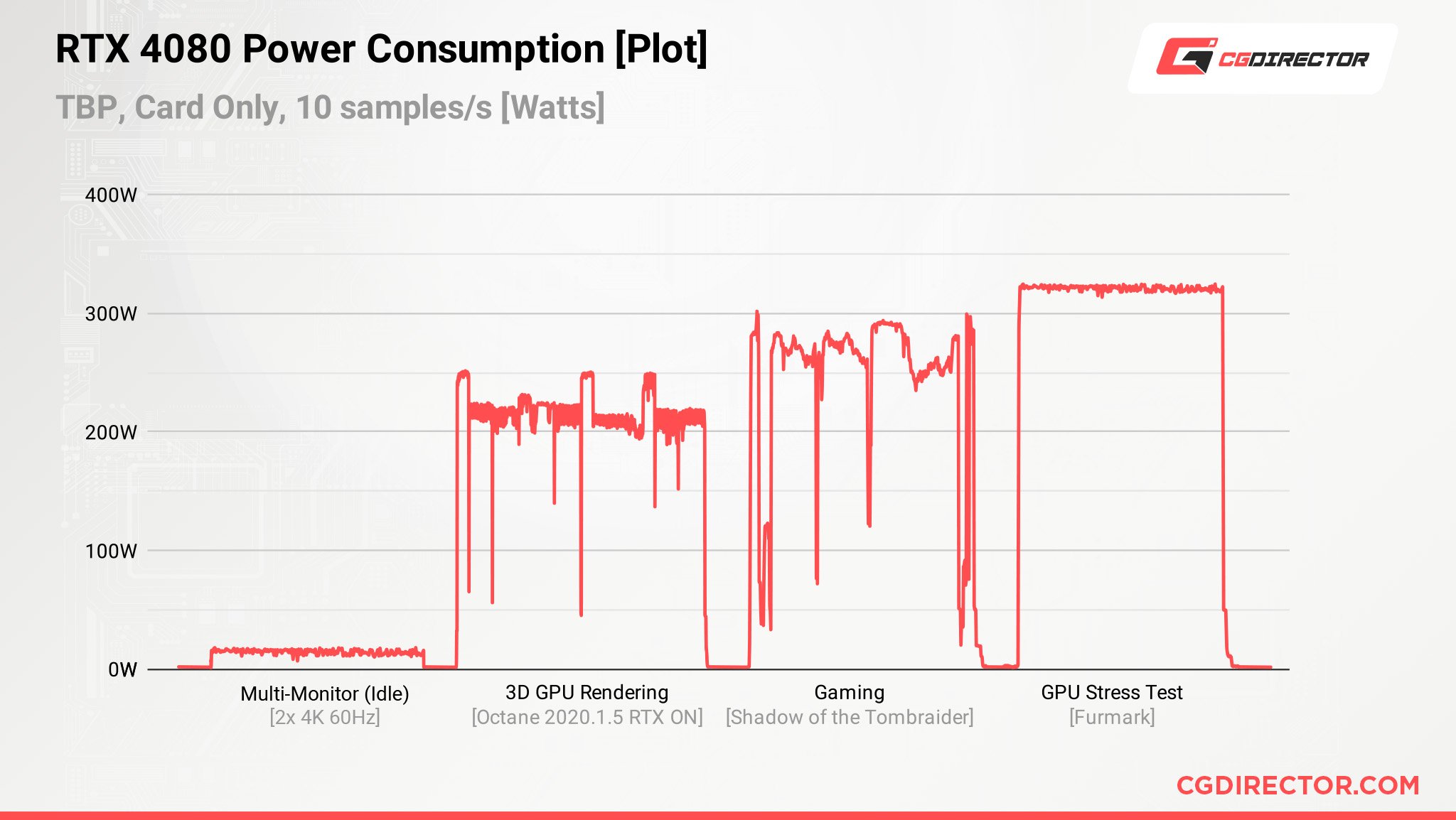

This time around, we decided to put a solid number to those claims, and here’s how the RTX 4080 performed in different types of workloads:

As you can see here, the RTX 4080 draws just a smidge over 250W at the very worst (in Octane 2020.1 with RTX ON). That’s a far cry from the power draw during gaming at 4K, which sits well over 300W!

Why? Well, it’s simple. GPU Rendering is so highly parallelized that clocks don’t boost up to consume relatively massive amounts of power. Gaming, on the other hand, relies on processing in real-time, and clocks help process those tasks faster.

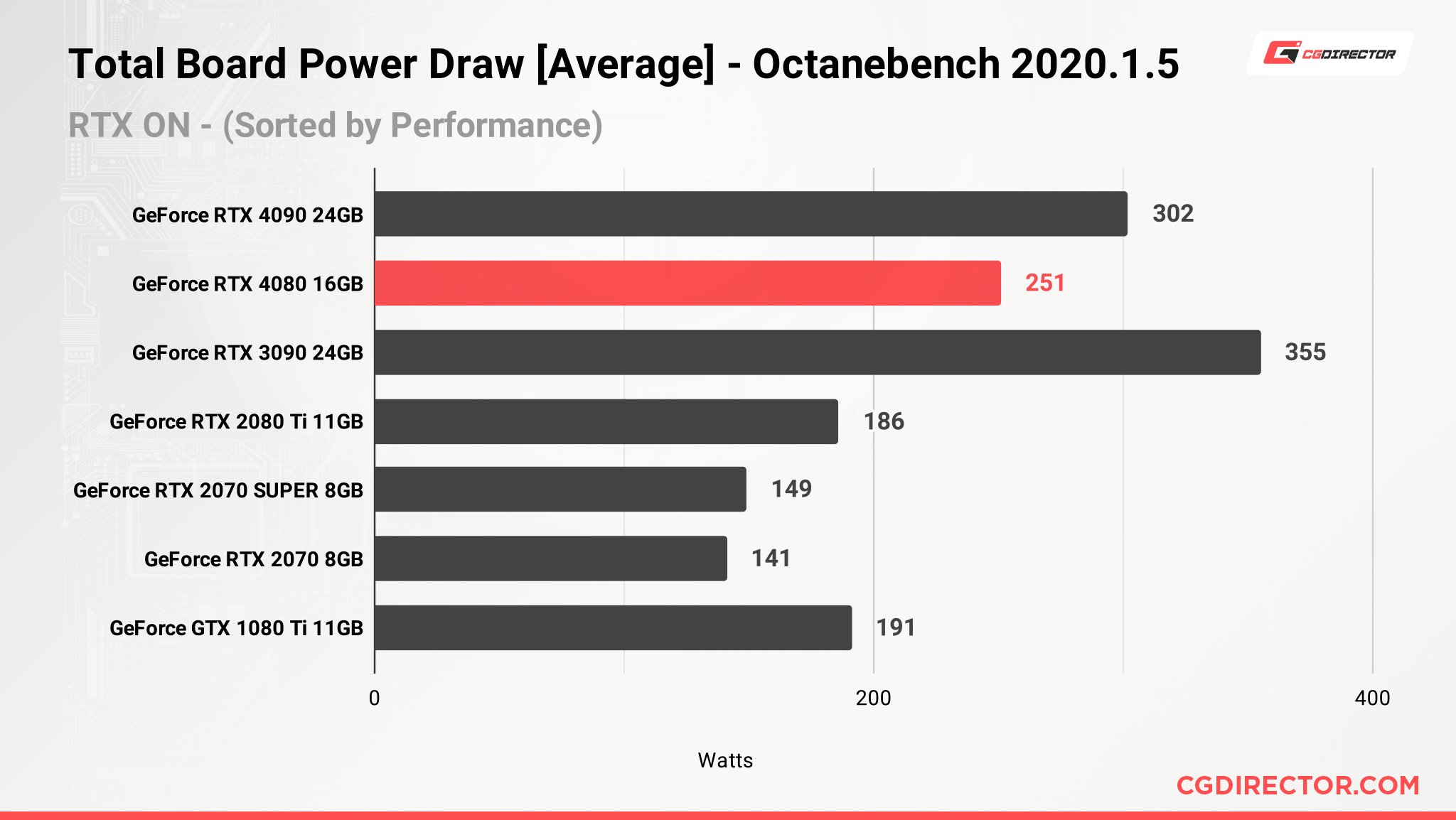

So, since we have the worst power draw result with Octane, let’s see how other Nvidia GPUs fare when it comes to power drawn in OctaneBench.

Well, one of the first numbers you’ll notice will be the RTX 4090. The supposedly power-hungry RTX-40 series part quietly zips through renders, while drawing just 300W!

Pretty far away from its 450W spec, and way lower than its gaming power draw. That said, these ARE still the most power-hungry GPUs that Nvidia has made in the past 5~ years (if you ignore the RTX 3090).

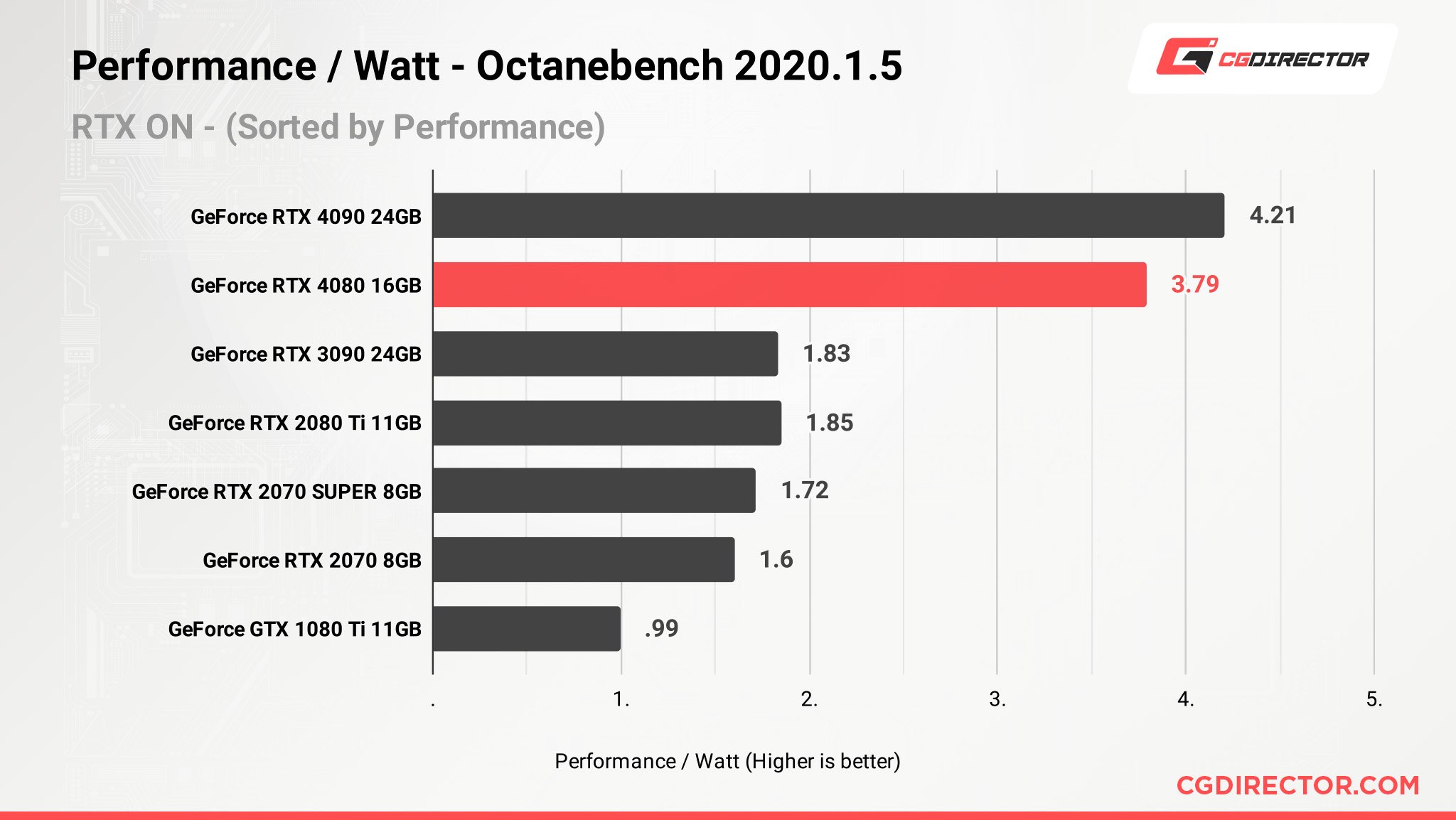

With these power numbers, it’s clear that both the RTX 4090 and the 4080 deliver a substantial increase in performance/W. That said, coolers are still built to handle gaming loads, so 2-slot blower designs probably won’t be a thing anytime soon.

I have to point out that the RTX 3090 looks particularly egregious in this particular metric.

If you can find an RTX 4080 for around the MSRP, it’s finally good enough value for professionals looking to upgrade from their RTX 2080 Ti setups.

Why is the Power Draw Much Lower for Rendering?

GPUs can be pushed well past their point of maximum efficiency to make sure they clock higher. Gaming workloads require higher clock speeds for better performance because many effects and real-time render techniques just can’t be parallelized effectively.

On the other hand, GPU rendering is a perfectly parallel workload that can be distributed evenly across the multiple cores of the GPU.

It doesn’t need the GPU to hit boost clock speeds to deliver the required performance and runs much more efficiently.

Idle Multi-Monitor Power Draw for GeForce RTX 4080 Founders Edition

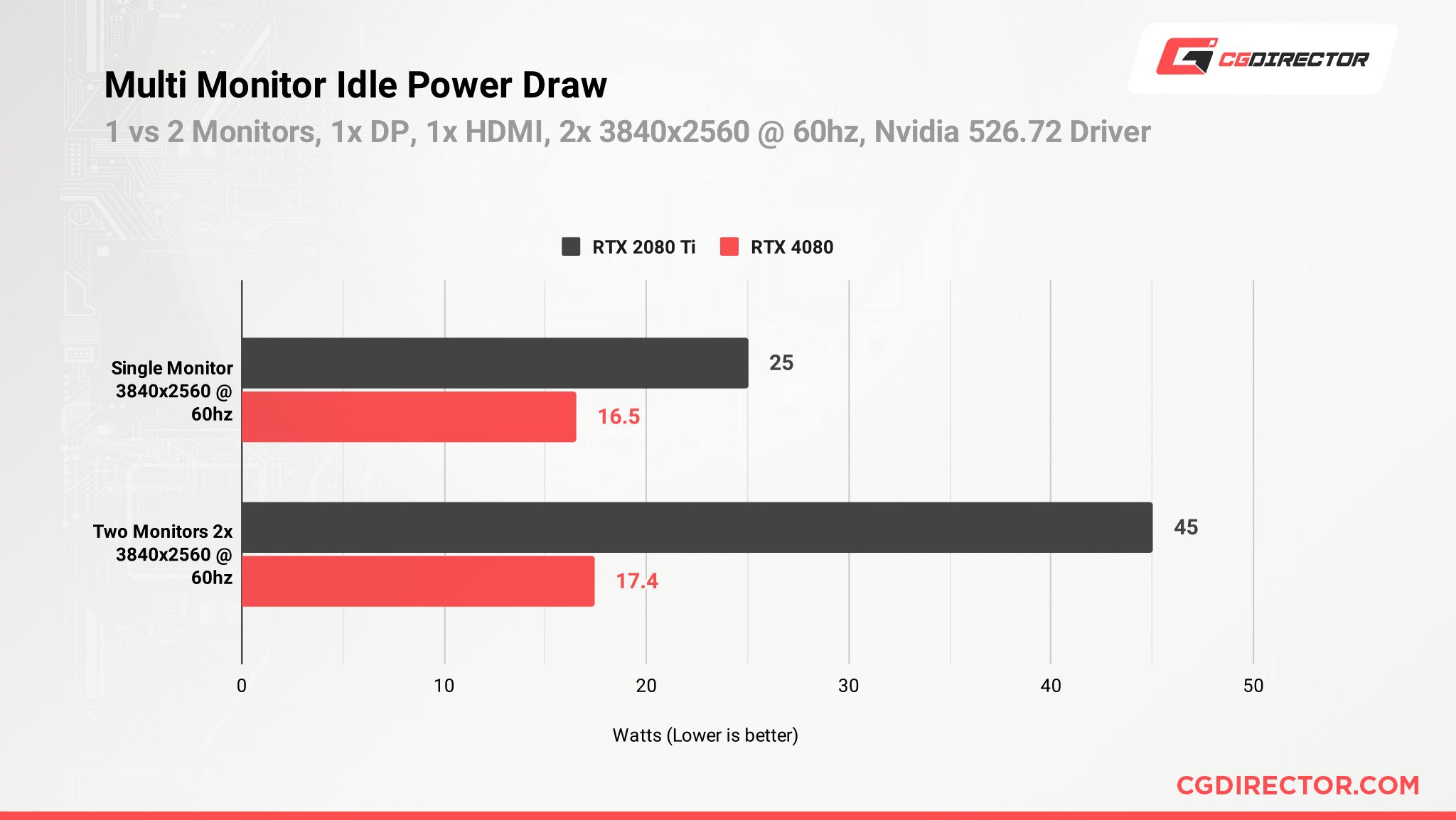

Previously, we found some Nvidia graphics cards (especially many of the 20XX series cards) drew substantially more power when powering multiple high-res monitors.

We wondered whether this was still a thing, and we can safely report that it’s not.

Dual monitors now consume just 1W more than a single monitor. It’s certainly a welcome improvement for professionals who find it nearly impossible to get work done without multiple monitors.

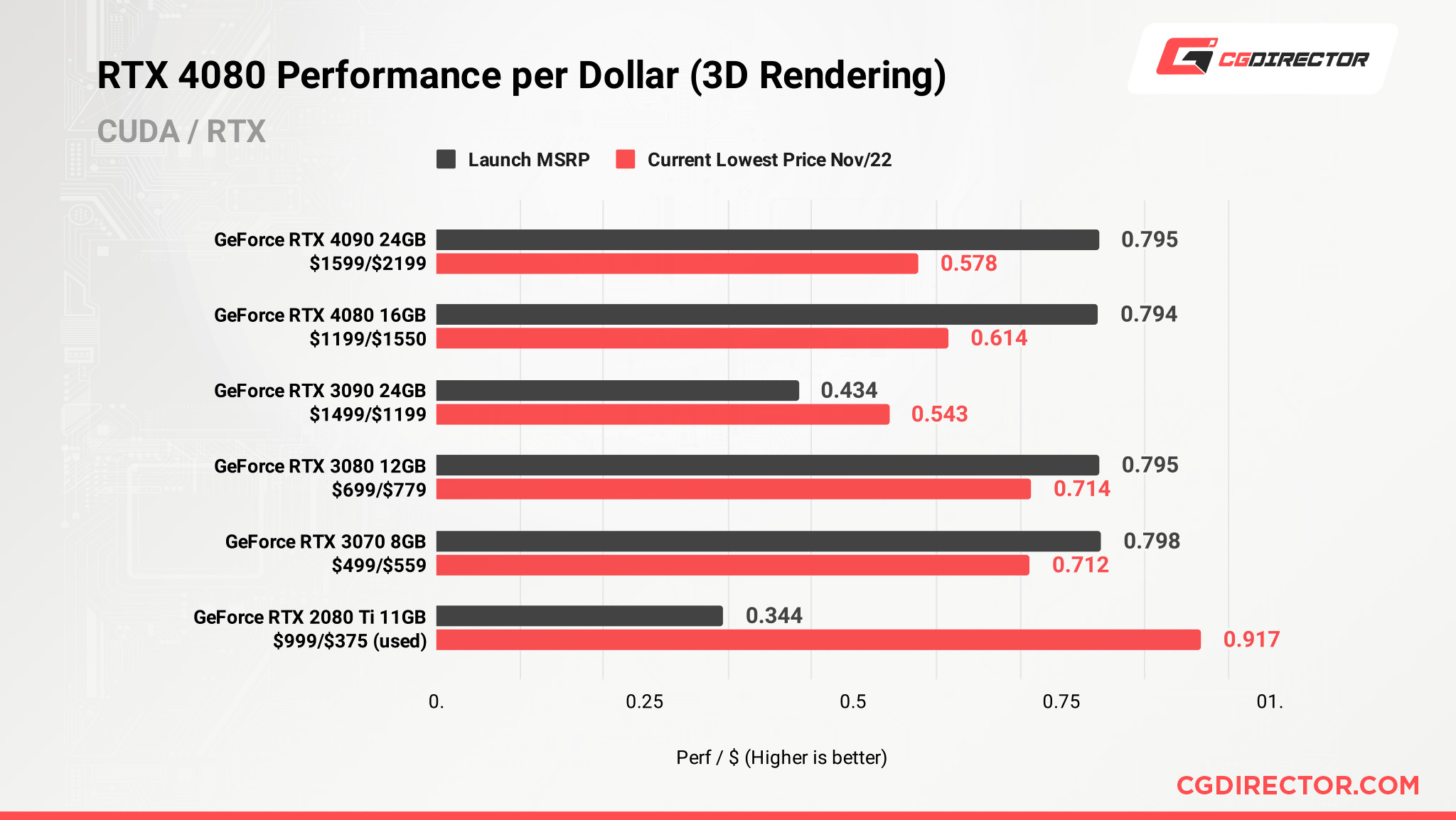

Performance Per Dollar: Better? Worse? Or the Same.

Nvidia has been almost clinical in how they offer performance and at what price for the last couple of generations. Seeing these numbers really puts Nvidia’s pricing into perspective.

Here’s how the performance/$ looks for 3D rendering workloads (RTX ON / Octane Renderer):

With the exception of the RTX 3090, every Nvidia release offers a perf/$ of ~0.79! Of course, this is further proof that the RTX 3090 at its launch MSRP was a terrible offering.

So, what does this mean for buyers? Well, if the RTX 4080 product lineup can beat those red bars, opting for the 40-series part makes perfect sense. On the other hand, if prices go in the other direction, you’ll probably find better value in the RTX 30-series graphics cards at their current prices.

Exploring PCIe Performance Scaling on the RTX 4080: Does PCIe 4.0 Really Not Matter, or is Everyone Missing the Point?

Before diving into this one, it’s important to understand what PCIe performance scaling is.

Each successive generation of PCIe offers a significant uplift in bandwidth. We’re now seeing motherboard and SSD manufacturers advertise blazing-fast PCIe 5.0 platforms and products that boast incredible speeds.

PCIe performance scaling explores whether there is any difference going from an older generation of PCIe to a newer one.

So, what do you think? Is there?

If you’ve followed the hardware scene for a while, you’ll probably have seen PCIe scaling benchmarks from popular reviewers that conclude with – there’s no difference. So, we decided to run a few tests as well.

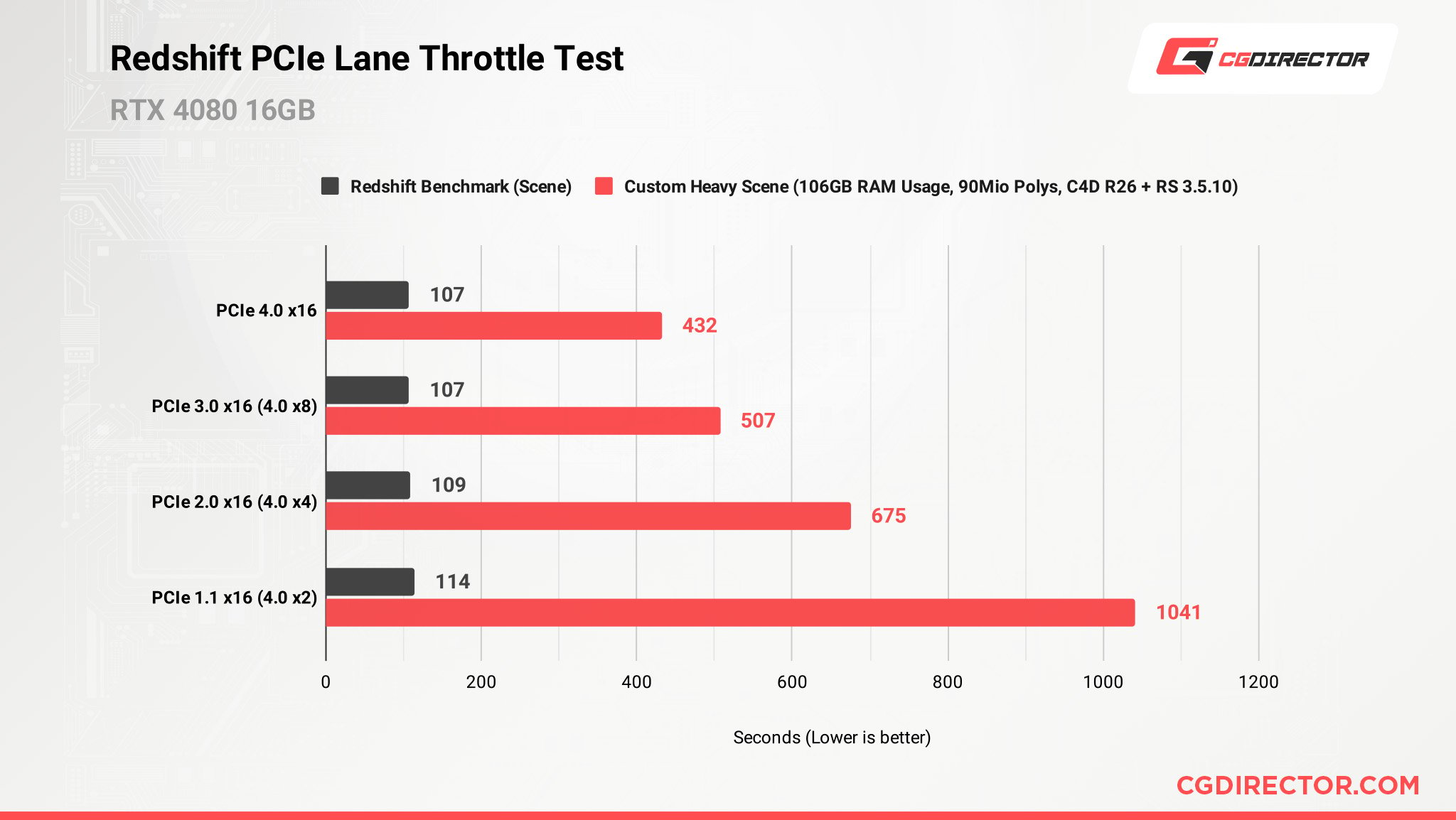

For the first run, we used a standard Redshift benchmark. However, for the second one, we used a custom scene that Alex was working on a few weeks ago. Here’s how that went:

As you can see from the render times here, older PCIe generations or lower PCIe 4.0 bandwidth can affect performance quite a bit in complex scenes. Why? Well, the bandwidth comes into play when the render engine can’t fit your scene into the VRAM and has no option but to shuffle data in and out of VRAM constantly.

If you work with relatively larger scenes and plan to use an RTX 40-series card, I’d recommend not dropping below PCIe 3.0 x8 (or PCIe 4.0 x4). Below that point, you’ll notice performance starting to suffer as your render engine struggles to send all the required data to your GPU.

That said, if your scenes almost always fit within your VRAM, you’ll notice no difference to your workflow and can ignore the bandwidth considerations entirely. The Redshift Benchmark Scene is a rather modest scene that easily fits into 8GB of VRAM and therefore sees almost no slowdowns on reduced PCIe Bandwidths.

As with many things in life, the answer to the question: “does PCIe bandwidth matter?” is still a resounding “it depends!”

RTX 4080 Adobe Premiere Pro, After Effects, and DaVinci Resolve Benchmarks: Nothing to Write Home About

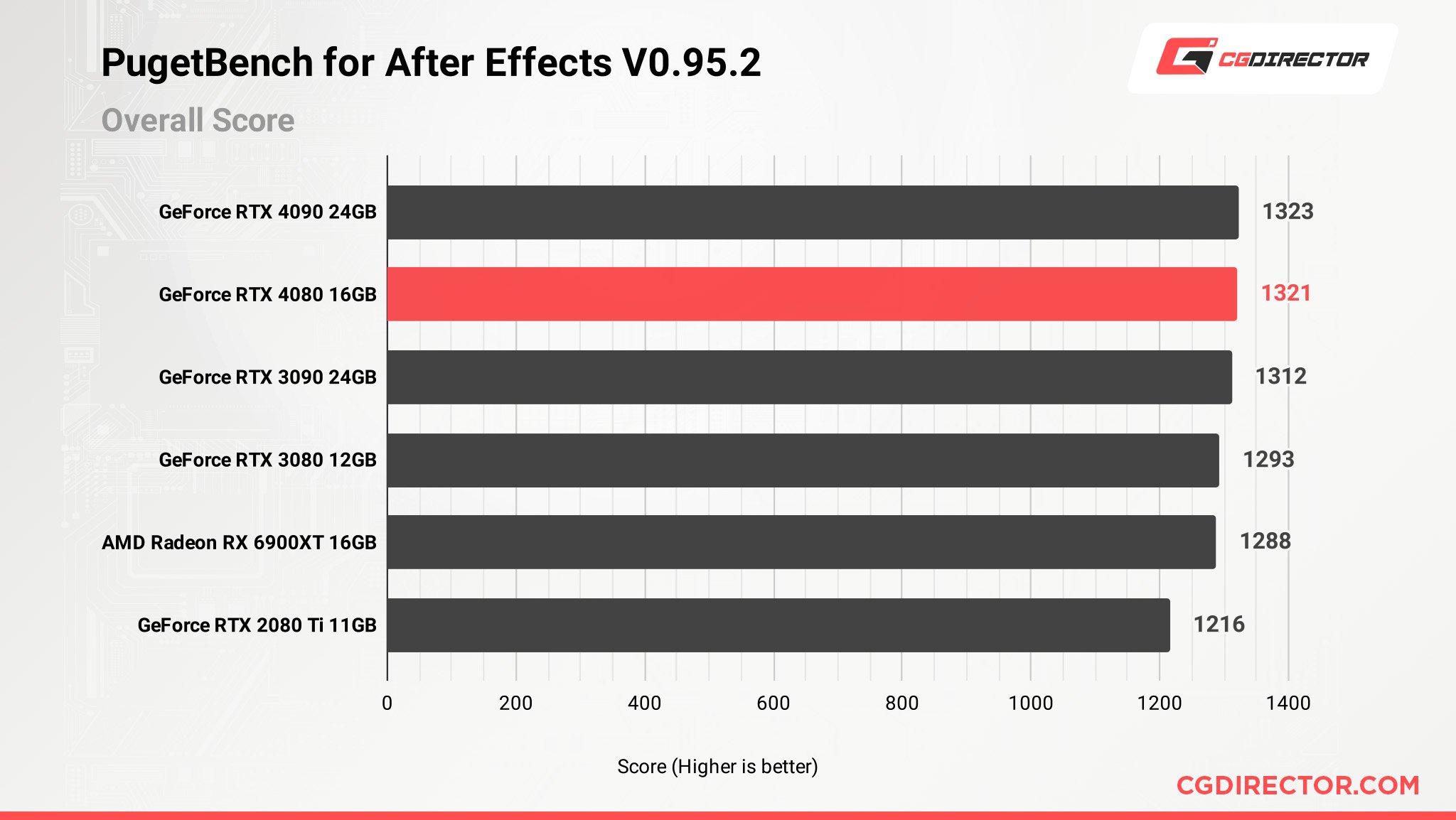

GeForce RTX 4080 Adobe After Effects Benchmark (PugetBench)

As you can see from the PugetBench scores, many older-gen parts offer similar performance to the RTX 4080. Now, this isn’t a strike against the RTX 4080 because Adobe isn’t exactly known for its optimization. So, it’s really no surprise that After Effects isn’t really able to leverage the potential of these RTX 40-series GPUs.

Nonetheless, if you’re primarily working with After Effects, there’s no compelling reason to look for an upgrade yet.

On a sidenote, AMD Radeon makes its first appearance in our charts with After Effects and delivers decent performance.

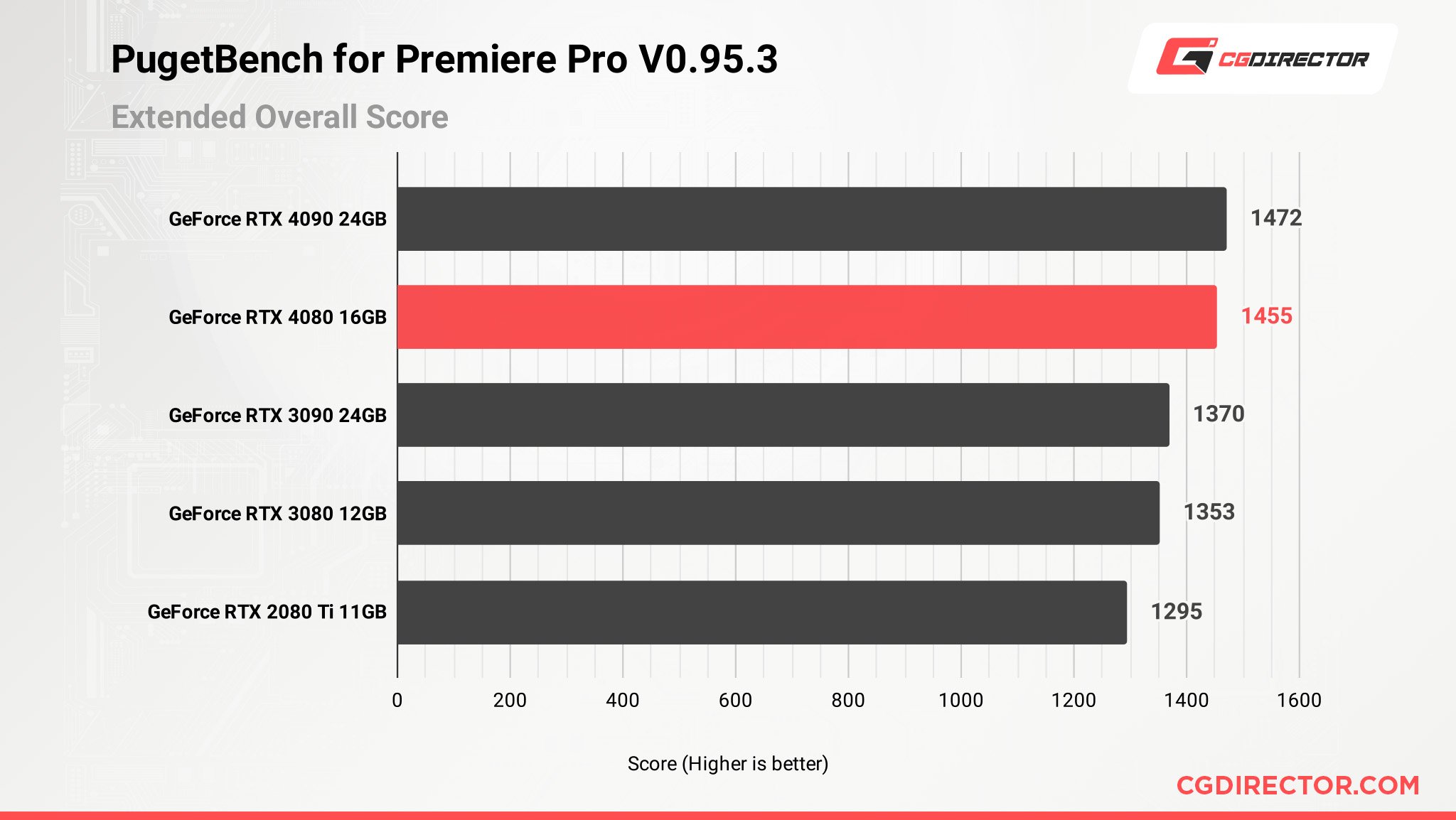

GeForce RTX 4080 Adobe Premiere Pro Benchmark (PugetBench)

While After Effects didn’t benefit from the additional graphics horsepower of the RTX 4080, Premiere Pro does offer a marginal uplift. You can expect close to 12% better performance if you’re considering a switch from something like an RTX 2080 Ti. I’d skip this generation if you’re already on a 30-series GPU.

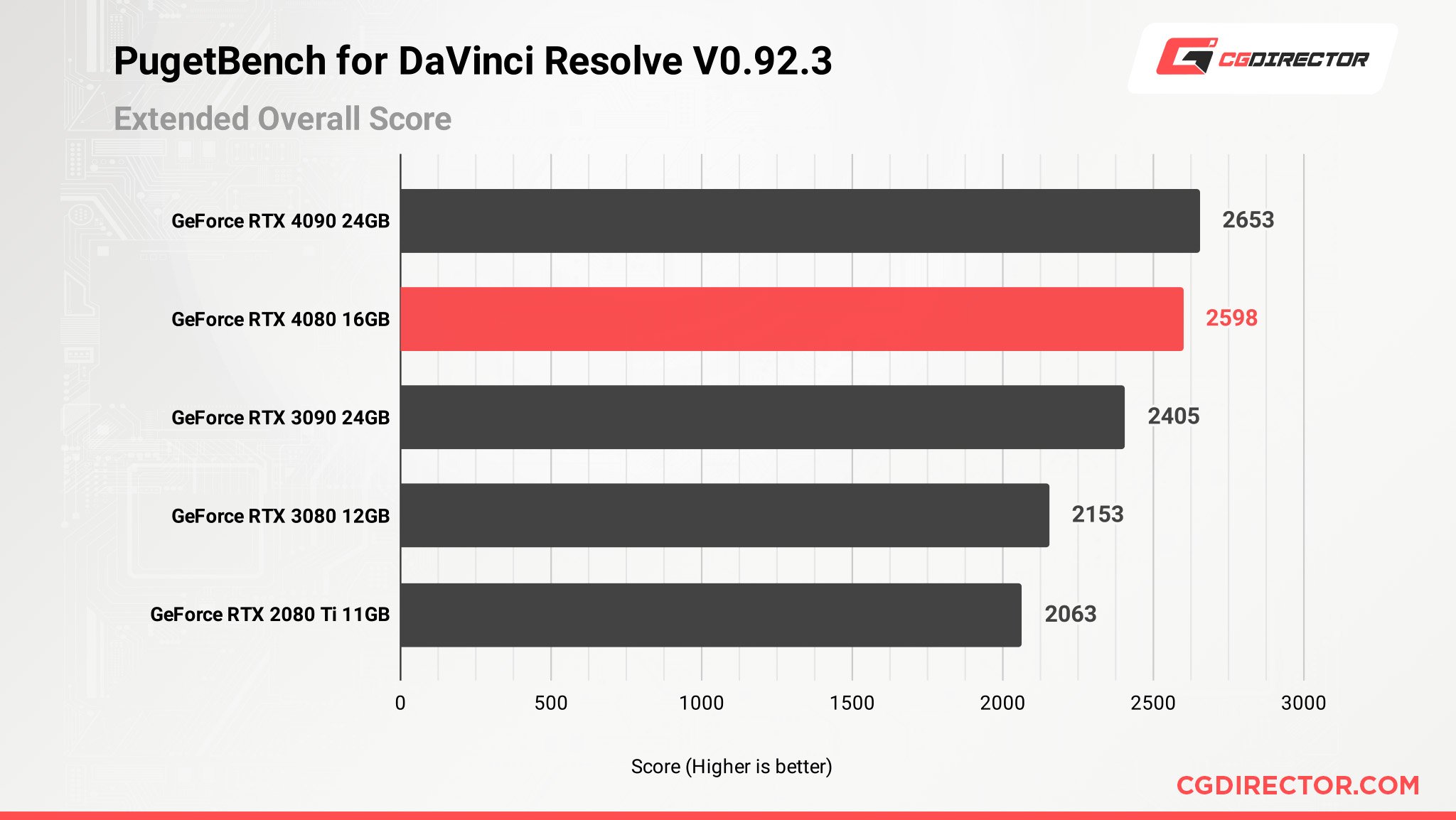

GeForce RTX 4080 DaVinci Resolve Benchmark (PugetBench)

Well, since the RTX 4080 clearly doesn’t help those Adobe apps too much, let’s see what Blackmagic can do.

Although there is an improvement here, I wouldn’t call it significant in any way. The RTX 4080 offers a 25% performance uplift over a 2-gen old RTX 2080 Ti, while it only musters a measly 8% improvement over an RTX 3090.

Again, the performance on offer isn’t too attractive unless you’re looking to shave away as much time as possible in your video production workflow.

That said, Pros who rely on these apps might jump at the chance to speed up their workflows and get work done faster.

Is This the End of Quad GPU Setups?

Well, if you’re currently running render nodes with 3-4 GPUs, you’d still be disappointed by the sizes of the RTX 40-series lineup. Even a 3-slot card like the RTX 4080 isn’t ideal because it puts a hard cap on how many fit inside a Full Tower and an E-ATX motherboard.

So, yes. Quad-GPU setups without moving to PCIe risers and open benches are pretty much dead (for now). We would love some 2-slot blower options for professionals, but we haven’t seen too many of them for a couple of years now.

Fingers crossed that this release will be different!

Why Not Just Use Workstation Blower Models?

We often get this question, and even companies selling to 3D professionals try making a case for them. There are two reasons why we don’t like going the Quadro (or whatever Nvidia calls them now) route for render nodes and workstations:

- Prices are way too high for professionals working as contractors to justify.

- Performance is slightly worse than their gaming counterparts.

Give CG professionals access to 2-slot blower models of the RTX 4080, and we’d be a happy bunch!

Assessing the GeForce RTX 4080 Founders Edition: Is it Finally Time to Upgrade?

If Nvidia’s promised MSRP stays put and you can find products close to the advertised price, I can see the value professionals will get from this GPU.

However, we’ve seen time and again that the announced MSRPs and actual pricing on shelves are two different things. So, we’ll be following up in a few months to update our charts to match.

As of now, I have nothing bad to say about the RTX 4080. Yes, it’s very cut down compared to the RTX 4090. But the performance is good, and the power draw stays reasonable.

Our temperatures never reached anywhere close to problem levels, even after hours of rendering, so the Founders Edition cooler is solid and will hold up well.

Overall, the RTX 4080 16GB Founders Edition Graphics Card is an excellent choice for Content Creators and CG Artists who have been desperately looking for an upgrade over the past 2-3 years!

For 3D GPU Rendering Workloads, in particular, we’re happy to finally see a GPU that deserves a recommendation.

It’s just sad that the GeForce RTX 4080 would go from ‘good’ and ‘decent’ to absolute God tier with around a $200 price drop!

What’re your thoughts on the RTX 4080? Want us to run some other benchmarks? Let us know in the comments! 🙂

![Nvidia GeForce RTX 4080 16GB Review [Content Creation, Rendering, & Power Draw] Nvidia GeForce RTX 4080 16GB Review [Content Creation, Rendering, & Power Draw]](https://www.cgdirector.com/wp-content/uploads/media/2022/11/Nvidia-RTX-4080-16GB-Review-Content-Creation-Rendering-Power-Draw-Twitter-1200x675.jpg)

![Guide to Undervolting your GPU [Step by Step] Guide to Undervolting your GPU [Step by Step]](https://www.cgdirector.com/wp-content/uploads/media/2024/04/Guide-to-Undervolting-your-GPU-Twitter-594x335.jpg)

![Are Intel ARC GPUs Any Good? [2024 Update] Are Intel ARC GPUs Any Good? [2024 Update]](https://www.cgdirector.com/wp-content/uploads/media/2024/02/Are-Intel-ARC-GPUs-Any-Good-Twitter-594x335.jpg)

![Graphics Card (GPU) Not Detected [How to Fix] Graphics Card (GPU) Not Detected [How to Fix]](https://www.cgdirector.com/wp-content/uploads/media/2024/01/Graphics-Card-GPU-Not-Detected-CGDIRECTOR-Twitter-594x335.jpg)

10 Comments

11 February, 2023

Would it be beneficial for me to switch from an RTX 3070 Ti to a 4080? or better I wait for another generation or prices improve, sometimes my 3070 Ti does suffer from renderings though.

13 February, 2023

Hi Roland. That’s a tricky topic for one to tackle. On the one hand, the 4080 is noticeably superior, but buying one might not be all that sensible with the current state of the market. If you can snag one for an acceptable price then, by all means, go for it!

Additionally, you can’t always wait for the “next big thing,” and NVIDIA’s gen-on-gen improvement with its 4000 series GPUs has been quite impressive (to say the least). So if you need the horsepower and if your livelihood depends on it, it’s definitely a worthwhile purchase.

2 January, 2023

Should I keep my 3090ti until I can buy a 4090 or is it worth losing 24gigs of VRAM to get a 4080?

3 January, 2023

It’ll depend on the type of scenes you’re rendering. If you’re not really using / really need the 24gigs of VRAM, the upgrade might be worth it. It would be most sensible, though, to just add in a second GPU if your setup allows for this.

Alex

24 December, 2022

Is it worth changing from a 3090ti? I am concerned about less VRAM for scenes

25 December, 2022

Hey Mike, I think an RTX 3090 Ti already has 24GB VRAM? If you need more, there’s no further VRAM upgrade for consumer GPUs. Only options are to pair multiple cards using NVLink to combine their VRAM or consider workstation RTX GPUs.

Cheers!

Jerry

15 November, 2022

Thanks for the review, so would you say PCIe 5 and even PCIe 4.0 x16 really isnt a necessity to run these new GPUs? I’m thinking of going the quad route with either the 4080 or 4090 on a mining rig. All of those running at PCIe 4 x8 should be ok, right?

Thanks

15 November, 2022

Yes you can easily run multi-gpu configs with the 4080 in pcie4 x8 bandwidths for simple to medium complexity scenes, with extremely heavy scenes you might see some minor slowdowns with shuffling data in and out of vram/ram.

Alex

2 January, 2023

How does running two 4080s help if you cannot NVLink them?

3 January, 2023

NVLINK really only allows for VRAM sharing. Render Engines can use multiple individual GPUs using only their own VRAM just fine, though. The extra processing power is added, but the VRAM amount PER GPU stays the same. This still scales almost linearly with every added GPU unless you have huge scenes that don’t fit into each GPU’s VRAM.

Cheers,

Alex